Дескриптор процесса

В ядре дескриптором процесса является структура под названием task_struct,

которая хранит атрибуты процесса и информацию о нем.

Процесс взаимодействует со многими аспектами ядра , такими как управление памятью и шедулер.

Все структуры всех выполняемых процессов ядро хранит в связанном списке , называемом task_list.

Также имеется ссылка на текущий выполняемый процесс и соответственную task_struct ,

которая называется current.

Процесс может иметь один или несколько тредов.

Каждый тред имеет свою собственную task_struct , в которой имеется уникальный id-шник этого треда.

Треды живут в адресном пространстве процесса.

К важнейшим категориям дескриптора процесса относятся :

Посмотрим на саму структуру task_struct.

Она определена в файлк include/linux/sched.h:

-----------------------------------------------------------------------

include/linux/sched.h

384 struct task_struct {

385 volatile long state;

386 struct thread_info *thread_info;

387 atomic_t usage;

388 unsigned long flags;

389 unsigned long ptrace;

390

391 int lock_depth;

392

393 int prio, static_prio;

394 struct list_head run_list;

395 prio_array_t *array;

396

397 unsigned long sleep_avg;

398 long interactive_credit;

399 unsigned long long timestamp;

400 int activated;

401

302 unsigned long policy;

403 cpumask_t cpus_allowed;

404 unsigned int time_slice, first_time_slice;

405

406 struct list_head tasks;

407 struct list_head ptrace_children;

408 struct list_head ptrace_list;

409

410 struct mm_struct *mm, *active_mm;

...

413 struct linux_binfmt *binfmt;

414 int exit_code, exit_signal;

415 int pdeath_signal;

...

419 pid_t pid;

420 pid_t tgid;

...

426 struct task_struct *real_parent;

427 struct task_struct *parent;

428 struct list_head children;

429 struct list_head sibling;

430 struct task_struct *group_leader;

...

433 struct pid_link pids[PIDTYPE_MAX];

434

435 wait_queue_head_t wait_chldexit;

436 struct completion *vfork_done;

437 int __user *set_child_tid;

438 int __user *clear_child_tid;

439

440 unsigned long rt_priority;

441 unsigned long it_real_value, it_prof_value, it_virt_value;

442 unsigned long it_real_incr, it_prof_incr, it_virt_incr;

443 struct timer_list real_timer;

444 unsigned long utime, stime, cutime, cstime;

445 unsigned long nvcsw, nivcsw, cnvcsw, cnivcsw;

446 u64 start_time;

...

450 uid_t uid,euid,suid,fsuid;

451 gid_t gid,egid,sgid,fsgid;

452 struct group_info *group_info;

453 kernel_cap_t cap_effective, cap_inheritable, cap_permitted;

454 int keep_capabilities:1;

455 struct user_struct *user;

...

457 struct rlimit rlim[RLIM_NLIMITS];

458 unsigned short used_math;

459 char comm[16];

...

461 int link_count, total_link_count;

...

467 struct fs_struct *fs;

...

469 struct files_struct *files;

...

509 unsigned long ptrace_message;

510 siginfo_t *last_siginfo;

...

516 };

-----------------------------------------------------------------------

Атрибуты процесса

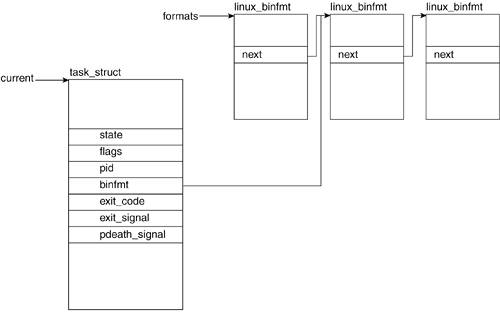

The process attribute category is a catch-all category we defined for task characteristics related to the state and identification of a task. Examining these fields' values at any time gives the kernel hacker an idea of the current status of a process. Figure 3.2 illustrates the process attributerelated fields of the task_struct.

3.2.1.1. state

The state field keeps track of the state a process finds itself in during its execution lifecycle. Possible values it can hold are TASK_RUNNING, TASK_INTERRUPTIBLE, TASK_UNINTERRUPTIBLE, TASK_ZOMBIE, TASK_STOPPED, and TASK_DEAD (see the "Process Lifespan" section in this chapter for more detail).

3.2.1.2. pid

In Linux, each process has a unique process identifier (pid). This pid is stored in the task_struct as a type pid_t. Although this type can be traced back to an integer type, the default maximum value of a pid is 32,768 (the value pertaining to a short int).

3.2.1.3. flags

Flags define special attributes that belong to the task. Per process flags are defined in include/linux/sched.h and include those flags listed in

Table 3.1. The flag's value provides the kernel hacker with more information regarding what the task is undergoing.

Table 3.1. Selected task_struct Flag's Field ValuesFlag Name | When Set |

|---|

PF_STARTING | Set when the process is being created. | PF_EXITING | Set during the call to do_exit(). | PF_DEAD | Set during the call to exit_notify() in the process of exiting. At this point, the state of the process is either TASK_ZOMBIE or TASK_DEAD. | PF_FORKNOEXEC | The parent upon forking sets this flag. |

3.2.1.4. binfmt

Linux supports a number of executable formats. An executable format is what defines the structure of how your program code is to be loaded into memory.

Figure 3.2 illustrates the association between the task_struct and the linux_binfmt struct, the structure that contains all the information related to a particular binary format (see

Chapter 9 for more detail).

3.2.1.5. exit_code and exit_signal

The exit_code and exit_signal fields hold a task's exit value and the terminating signal (if one was used). This is the way a child's exit value is passed to its parent.

3.2.1.6. pdeath_signal

pdeath_signal is a signal sent upon the parent's death.

3.2.1.7. comm

A process is often created by means of a command-line call to an executable. The comm field holds the name of the executable as it is called on the command line.

3.2.1.8. ptrace

ptrace is set when the ptrace() system call is called on the process for performance measurements. Possible ptrace() flags are defined in include/ linux/ptrace.h.

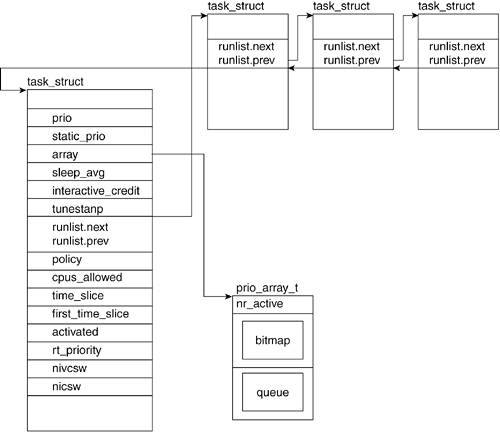

3.2.2. Scheduling Related Fields

A process operates as though it has its own virtual CPU. However, in reality, it shares the CPU with other processes. To sustain the switching between process executions, each process closely interrelates with the scheduler (see

Chapter 7 for more detail).

However, to understand some of these fields, you need to understand a few basic scheduling concepts. When more than one process is ready to run, the scheduler decides which one runs first and for how long. The scheduler achieves fairness and efficiency by allotting each process a timeslice and a priority. The timeslice defines the amount of time the process is allowed to execute before it is switched off for another process. The priority of a process is a value that defines the relative order in which it will be allowed to be executed with respect to other waiting processesthe higher the priority, the sooner it is scheduled to run. The fields shown in

Figure 3.3 keep track of the values necessary for scheduling purposes.

3.2.2.1. prio

In Chapter 7, we see that the dynamic priority of a process is a value that depends on the processes' scheduling history and the specified nice value. (See the following sidebar for more information about nice values.) It is updated at sleep time, which is when the process is not being executed and when timeslice is used up. This value, prio, is related to the value of the static_prio field described next. The prio field holds +/- 5 of the value of static_prio, depending on the process' history; it will get a +5 bonus if it has slept a lot and a -5 handicap if it has been a processing hog and used up its timeslice.

3.2.2.2. static_prio

static_prio is equivalent to the nice value. The default value of static_prio is MAX_PRIO-20. In our kernel, MAX_PRIO defaults to 140.

|

The nice() system call allows a user to modify the static scheduling priority of a process. The nice value can range from 20 to 19. The nice() function then calls set_user_nice() to set the static_prio field of the task_struct. The static_prio value is computed from the nice value by way of the PRIO_TO_NICE macro. Likewise, the nice value is computed from the static_prio value by means of a call to NICE_TO_PRIO.

---------------------------------------kernel/sched.c

#define NICE_TO_PRIO(nice) (MAX_RT_PRIO + nice + 20)

#define PRIO_TO_NICE(prio) ((prio MAX_RT_PRIO 20)

-----------------------------------------------------

|

3.2.2.3. run_list

The run_list field points to the runqueue. A runqueue holds a list of all the processes to run. See the "

Basic Structure" section for more information on the runqueue struct.

3.2.2.4. array

The array field points to the priority array of a runqueue. The "

Keeping Track of Processes: Basic Scheduler Construction" section in this chapter explains this array in detail.

3.2.2.5. sleep_avg

The sleep_avg field is used to calculate the effective priority of the task, which is the average amount of clock ticks the task has spent sleeping.

3.2.2.6. timestamp

The timestamp field is used to calculate the sleep_avg for when a task sleeps or yields.

3.2.2.7. interactive_credit

The interactive_credit field is used along with the sleep_avg and activated fields to calculate sleep_avg.

3.2.2.8. policy

The policy determines the type of process (for example, time sharing or real time). The type of a process heavily influences the priority scheduling. For more information on this field, see

Chapter 7.

3.2.2.9. cpus_allowed

The cpus_allowed field specifies which CPUs might handle a task. This is one way in which we can specify which CPU a particular task can run on when in a multiprocessor system.

3.2.2.10. time_slice

The time_slice field defines the maximum amount of time the task is allowed to run.

3.2.2.11. first_time_slice

The first_time_slice field is repeatedly set to 0 and keeps track of the scheduling time.

3.2.2.12. activated

The activated field keeps track of the incrementing and decrementing of sleep averages. If an uninterruptible task gets woken, this field gets set to -1.

3.2.2.13. rt_priority

rt_priority is a static value that can only be updated through schedule(). This value is necessary to support real-time tasks.

3.2.2.14. nivcsw and nvcsw

Different kinds of context switches exist. The kernel keeps track of these for profiling reasons. A global switch count gets set to one of the four different context switch counts, depending on the kind of transition involved in the context switch (see

Chapter 7 for more information on context switch). These are the counters for the basic context switch:

The nivcsw field (number of involuntary context switches) keeps count of kernel preemptions applied on the task. It gets incremented only upon a task's return from a kernel preemption where the switch count is set to nivcsw. The nvcsw field (number of voluntary context switches) keeps count of context switches that are not based on kernel preemption. The switch count gets set to nvcsw if the previous state was not an active preemption.

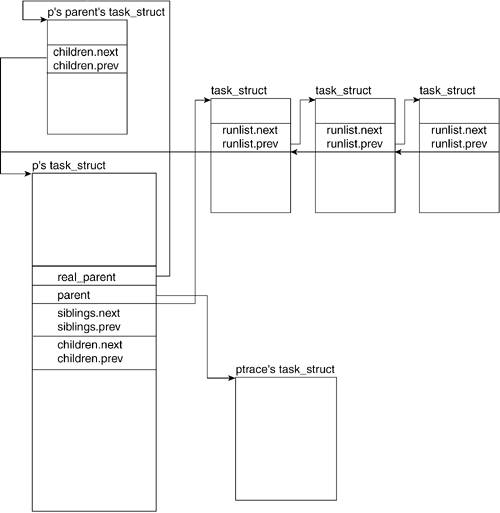

3.2.3. Process RelationsRelated Fields

The following fields of the task_struct are those related to process relationships. Each task or process p has a parent that created it. Process p can also create processes and, therefore, might have children. Because p's parent could have created more than one process, it is possible that process p might have siblings.

Figure 3.4 illustrates how the task_structs of all these processes relate.

3.2.3.1. real_parent

real_parent points to the current process' parent's description. It will point to the process descriptor of init() if the original parent of our current process has been destroyed. In previous kernels, this was known as p_opptr.

3.2.3.2. parent

parent is a pointer to the descriptor of the parent process. In

Figure 3.4, we see that this points to the ptrace task_struct. When ptrace is run on a process, the parent field of task_struct points to the ptrace process.

3.2.3.3. children

children is the struct that points to the list of our current process' children.

3.2.3.4. sibling

sibling is the struct that points to the list of the current process' siblings.

3.2.3.5. group_leader

A process can be a member of a group of processes, and each group has one process defined as the group leader. If our process is a member of a group, group_leader is a pointer to the descriptor of the leader of that group. A group leader generally owns the tty from which the process was created, called the controlling terminal.

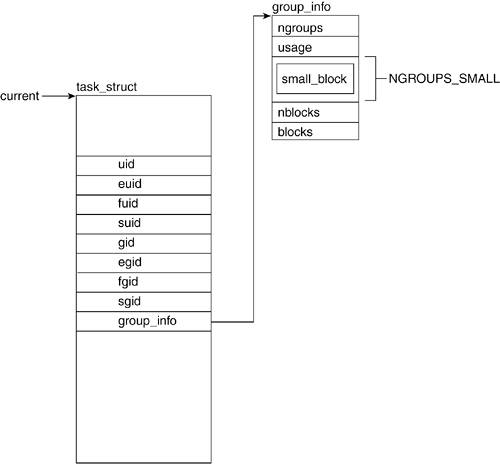

3.2.4. Process CredentialsRelated Fields

In multiuser systems, it is necessary to distinguish among processes that are created by different users. This is necessary for the security and protection of user data. To this end, each process has credentials that help the system determine what it can and cannot access.

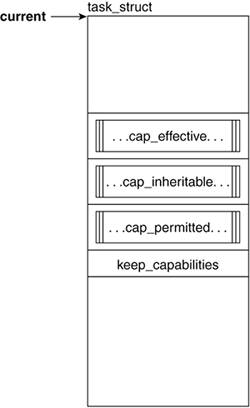

Figure 3.5 illustrates the fields in the task_struct related to process credentials.

3.2.4.1. uid and gid

The uid field holds the user ID number of the user who created the process. This field is used for protection and security purposes. Likewise, the gid field holds the group ID of the group who owns the process. A uid or gid of 0 corresponds to the root user and group.

3.2.4.2. euid and egid

The effective user ID usually holds the same value as the user ID field. This changes if the executed program has the set UID (SUID) bit on. In this case, the effective user ID is that of the owner of the program file. Generally, this is used to allow any user to run a particular program with the same permissions as another user (for example, root). The effective group ID works in much the same way, holding a value different from the gid field only if the set group ID (SGID) bit is on.

3.2.4.3. suid and sgid

suid (saved user ID) and sgid (saved group ID) are used in the setuid() system calls.

3.2.4.4. fsuid and fsgid

The fsuid and fsgid values are checked specifically for filesystem checks. They generally hold the same values as uid and gid except for when a setuid() system call is made.

3.2.4.5. group_info

In Linux, a user may be part of more than one group. These groups may have varying permissions with respect to system and data accesses. For this reason, the processes need to inherit this credential. The group_info field is a pointer to a structure of type group_info, which holds all the information regarding the various groups of which the process can be a member.

The group_info structure allows a process to associate with a number of groups that is bound by available memory. In

Figure 3.5, you can see that a field of group_info called small_block is an array of NGROUPS_SMALL (in our case, 32) gid_t units. If a task belongs to more than 32 groups, the kernel can allocate blocks or pages that hold the necessary number of gid_ts beyond NGROUPS_SMALL. The field nblocks holds the number of blocks allocated, while ngroups holds the value of units in the small_block array that hold a gid_t value.

3.2.5. Process CapabilitiesRelated Fields

Traditionally, UNIX systems offer process-related protection of certain accesses and actions by defining any given process as privileged (super user or UID = 0) or unprivileged (any other process). In Linux, capabilities were introduced to partition the activities previously available only to the superuser; that is, capabilities are individual "privileges" that may be conferred upon a process independently of each other and of its UID. In this manner, particular processes can be given permission to perform particular administrative tasks without necessarily getting all the privileges or having to be owned by the superuser. A capability is thus defined as a given administrative operation.

Figure 3.6 shows the fields that are related to process capabilities.

3.2.5.1. cap_effective, cap_inheritable, cap_permitted, and keep_capabilities

The structure used to support the capabilities model is defined in include/linux/security.h as an unsigned 32-bit value. Each 32-bit mask corresponds to a capability set; each capability is assigned a bit in each of:

cap_effective.

The capabilities that can be currently used by the process.

cap_inheritable.

The capabilities that are passed through a call to execve.

cap_permitted.

The capabilities that can be made either effective or inheritable. One way to understand the distinction between these three types is to consider the permitted capabilities to be similar to a trivialized gene pool made available by one's parents. Of the genetic qualities made available by one's parents, we can display a subset of them (effective qualities) and/or pass them on (inheritable). Permitted capabilities constitute more of a potentiality whereas effective capabilities are an actuality. Therefore, cap_effective and cap_inheritable are always subsets of cap_permitted.

keep_capabilities.

Keeps track of whether the process will drop or maintain its capabilities on a call to setuid().

Table 3.2 lists some of the supported capabilities that are defined in include/linux/capability.h.

Table 3.2. Selected CapabilitiesCapability | Description |

|---|

CAP_CHOWN | Ignores the restrictions imposed by chown() | CAP_FOWNER | Ignores file-permission restrictions | CAP_FSETID | Ignores setuid and setgid restrictions on files | CAP_KILL | Ignores ruid and euids when sending signals | CAP_SETGID | Ignores group-related permissions checks | CAP_SETUID | Ignores uid-related permissions checks | CAP_SETCAP | Allows a process to set its capabilities |

The kernel checks if a particular capability is set with a call to capable() passing as a parameter the capability variable. Generally, the function checks to see whether the capability bit is set in the cap_effective set; if so, it sets current->flags to PF_SUPERPRIV, which indicates that the capability is granted. The function returns a 1 if the capability is granted and 0 if capability is not granted.

Three system calls are associated with the manipulation of capabilities: capget(), capset(), and prctl(). The first two allow a process to get and set its capabilities, while the prctl() system call allows manipulation of current->keep_capabilities.

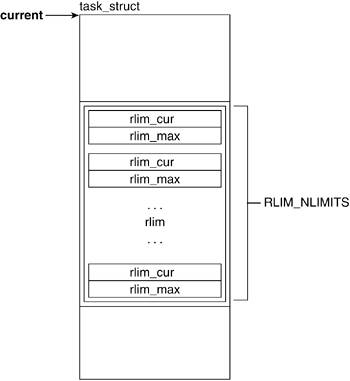

3.2.6. Process LimitationsRelated Fields

A task uses a number of the resources made available by hardware and the scheduler. To keep track of how they are used and any limitations that might be applied to a process, we have the following fields.

3.2.6.1. rlim

The rlim field holds an array that provides for resource control and accounting by maintaining resource limit values. Figure 3.7 illustrates the rlim field of the task_struct.

Linux recognizes the need to limit the amount of certain resources that a process is allowed to use. Because the kinds and amounts of resources processes might use varies from process to process, it is necessary to keep this information on a per process basis. What better place than to keep a reference to it in the process descriptor?

The rlimit descriptor (include/linux/resource.h) has the fields rlim_cur and rlim_max, which are the current and maximum limits that apply to that resource. The limit "units" vary by the kind of resource to which the structure refers.

-----------------------------------------------------------------------

include/linux/resource.h

struct rlimit {

unsigned long rlim_cur;

unsigned long rlim_max;

};

-----------------------------------------------------------------------

Table 3.3 lists the resources upon which their limits are defined in include/asm/resource.h. However, both x86 and PPC have the same resource limits list and default values.

Table 3.3. Resource Limits ValuesRL Name | Description | Default rlim_cur | Default rlim_max |

|---|

RLIMIT_CPU | The amount of CPU time in seconds this process may run. | RLIM_INFINITY | RLIM_INFINITY | RLIMIT_FSIZE | The size of a file in 1KB blocks. | RLIM_INFINITY | RLIM_INFINITY | RLIMIT_DATA | The size of the heap in bytes. | RLIM_INFINITY | RLIM_INFINITY | RLIMIT_STACK | The size of the stack in bytes. | _STK_LIM | RLIM_INFINITY | RLIMIT_CORE | The size of the core dump file. | 0 | RLIM_INFINITY | RLIMIT_RSS | The maximum resident set size (real memory). | RLIM_INFINITY | RLIM_INFINITY | RLIMIT_NPROC | The number of processes owned by this process. | 0 | 0 | RLIMIT_NOFILE | The number of open files this process may have at one time. | INR_OPEN | INR_OPEN | RLIMIT_MEMLOCK | Physical memory that can be locked (not swapped). | RLIM_INFINITY | RLIM_INFINITY | RLIMIT_AS | Size of process address space in bytes. | RLIM_INFINITY | RLIM_INFINITY | RLIMIT_LOCKS | Number of file locks. | RLIM_INFINITY | RLIM_INFINITY |

When a value is set to RLIM_INFINITY, the resource is unlimited for that process.

The current limit (rlim_cur) is a soft limit that can be changed via a call to setrlimit(). The maximum limit is defined by rlim_max and cannot be exceeded by an unprivileged process. The geTRlimit() system call returns the value of the resource limits. Both setrlimit() and getrlimit() take as parameters the resource name and a pointer to a structure of type rlimit.

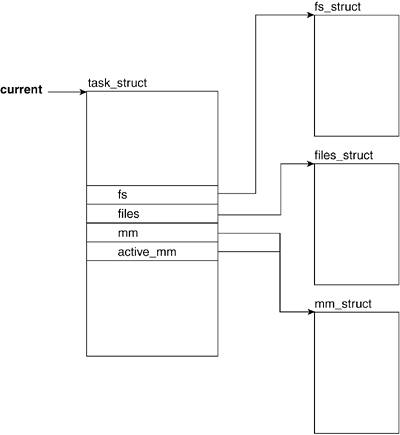

3.2.7. Filesystem- and Address SpaceRelated Fields

Processes can be heavily involved with files throughout their lifecycle, performing tasks such as opening, closing, reading, and writing. The task_struct has two fields that are associated with file- and filesystem-related data: fs and files (see

Chapter 6, "Filesystems," for more detail). The two fields related to address space are active_mm and mm (see

Chapter 4, "Memory Management," for more detail on mm_struct).

Figure 3.8 shows the filesystem- and address spacerelated fields of the task_struct.

3.2.7.1. fs

The fs field holds a pointer to filesystem information.

3.2.7.2. files

The files field holds a pointer to the file descriptor table for the task. This file descriptor holds pointers to files (more specifically, to their descriptors) that the task has open.

3.2.7.3. mm

mm points to address-space and memory-managementrelated information.

3.2.7.4. active_mm

active_mm is a pointer to the most recently accessed address space. Both the mm and active_mm fields start pointing at the same mm_struct.

Evaluating the process descriptor gives us an idea of the type of data that a process is involved with throughout its lifetime. Now, we can look at what happens throughout the lifespan of a process. The following sections explain the various stages and states of a process and go through the sample program line by line to explain what happens in the kernel.

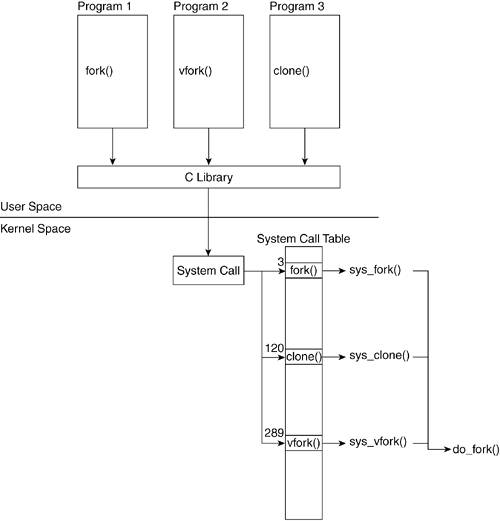

3.3. Process Creation: fork(), vfork(), and clone() System Calls

After the sample code is compiled into a file (in our case, an ELF executable), we call it from the command line. Look at what happens when we press the Return key. We already mentioned that any given process is created by another process. The operating system provides the functionality to do this by means of the fork(), vfork(), and clone() system calls.

The C library provides three functions that issue these three system calls. The prototypes of these functions are declared in <unistd.h>.

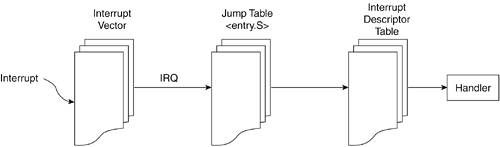

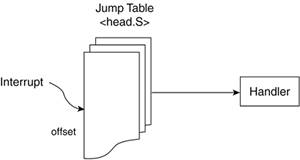

Figure 3.9 shows how a process that calls fork() executes the system call sys_fork(). This figure describes how kernel code performs the actual process creation. In a similar manner, vfork() calls sys_fork(), and clone() calls sys_clone().

All three of these system calls eventually call do_fork(), which is a kernel function that performs the bulk of the actions related to process creation. You might wonder why three different functions are available to create a process. Each function slightly differs in how it creates a process, and there are specific reasons why one would be chosen over the other.

When we press Return at the shell prompt, the shell creates the new process that executes our program by means of a call to fork(). In fact, if we type the command ls at the shell and press Return, the pseudocode of the shell at that moment looks something like this:

if( (pid = fork()) == 0 )

execve("foo");

else

waitpid(pid);

We can now look at the functions and trace them down to the system call. Although our program calls fork(), it could just as easily have called vfork() or clone(), which is why we introduced all three functions in this section. The first function we look at is fork(). We delve through the calls fork(), sys_fork(), and do_fork(). We follow that with vfork() and finally look at clone() and trace them down to the do_fork() call.

3.3.1. fork() Function

The fork() function returns twice: once in the parent and once in the child process. If it returns in the child process, fork() returns 0. If it returns in the parent, fork() returns the child's PID. When the fork() function is called, the function places the necessary information in the appropriate registers, including the index into the system call table where the pointer to the system call resides. The processor we are running on determines the registers into which this information is placed.

At this point, if you want to continue the sequential ordering of events, look at the "

Interrupts" section in this chapter to see how sys_fork() is called. However, it is not necessary to understand how a new process gets created.

Let's now look at the sys_fork() function. This function does little else than call the do_fork() function. Notice that the sys_fork() function is architecture dependent because it accesses function parameters passed in through the system registers.

-----------------------------------------------------------------------

arch/i386/kernel/process.c

asmlinkage int sys_fork(struct pt_regs regs)

{

return do_fork(SIGCHLD, regs.esp, ®s, 0, NULL, NULL);

}

-----------------------------------------------------------------------

-----------------------------------------------------------------------

arch/ppc/kernel/process.c

int sys_fork(int p1, int p2, int p3, int p4, int p5, int p6,

struct pt_regs *regs)

{

CHECK_FULL_REGS(regs);

return do_fork(SIGCHLD, regs->gpr[1], regs, 0, NULL, NULL);

}

-----------------------------------------------------------------------

The two architectures take in different parameters to the system call. The structure pt_regs holds information such as the stack pointer. The fact that gpr[1] holds the stack pointer in PPC, whereas %esp holds the stack pointer in x86, is known by convention.

3.3.2. vfork() Function

The vfork() function is similar to the fork() function with the exception that the parent process is blocked until the child calls exit() or exec().

sys_vfork()

arch/i386/kernel/process.c

asmlinkage int sys_vfork(struct pt_regs regs)

{

return do_fork(CLONE_VFORK | CLONE_VM | SIGCHLD, regs.ep, ®s, 0, NULL, NULL);

}

-----------------------------------------------------------------------

arch/ppc/kernel/process.c

int sys_vfork(int p1, int p2, int p3, int p4, int p5, int p6,

struct pt_regs *regs)

{

CHECK_FULL_REGS(regs);

return do_fork(CLONE_VFORK | CLONE_VM | SIGCHLD, regs->gpr[1],

regs, 0, NULL, NULL);

}

-----------------------------------------------------------------------

The only difference between the calls to sys_fork() in sys_vfork() and sys_fork() are the flags that do_fork() is passed. The presence of these flags are used later to determine if the added behavior just described (of blocking the parent) will be executed.

3.3.3. clone() Function

The clone() library function, unlike fork() and vfork(), takes in a pointer to a function along with its argument. The child process created by do_fork()calls this function as soon as it gets created.

-----------------------------------------------------------------------

sys_clone()

arch/i386/kernel/process.c

asmlinkage int sys_clone(struct pt_regs regs)

{

unsigned long clone_flags;

unsigned long newsp;

int __user *parent_tidptr, *child_tidptr;

clone_flags = regs.ebx;

newsp = regs.ecx;

parent_tidptr = (int __user *)regs.edx;

child_tidptr = (int __user *)regs.edi;

if (!newsp)

newsp = regs.esp;

return do_fork(clone_flags & ~CLONE_IDLETASK, newsp, ®s, 0, parent_tidptr,

child_tidptr);

}

-----------------------------------------------------------------------

-----------------------------------------------------------------------

arch/ppc/kernel/process.c

int sys_clone(unsigned long clone_flags, unsigned long usp,

int __user *parent_tidp, void __user *child_thread\

ptr,

int __user *child_tidp, int p6,

struct pt_regs *regs)

{

CHECK_FULL_REGS(regs);

if (usp == 0)

usp = regs->gpr[1]; /* stack pointer for chi\

ld */

return do_fork(clone_flags & ~CLONE_IDLETASK, usp, regs,\

0,

parent_tidp, child_tidp);

}

----------------------------------------------------------------------- child_tidptr);

}

-----------------------------------------------------------------------

-----------------------------------------------------------------------

arch/ppc/kernel/process.c

int sys_clone(unsigned long clone_flags, unsigned long usp,

int __user *parent_tidp, void __user *child_thread\

ptr,

int __user *child_tidp, int p6,

struct pt_regs *regs)

{

CHECK_FULL_REGS(regs);

if (usp == 0)

usp = regs->gpr[1]; /* stack pointer for chi\

ld */

return do_fork(clone_flags & ~CLONE_IDLETASK, usp, regs,\

0,

parent_tidp, child_tidp);

}

-----------------------------------------------------------------------

As Table 3.4 shows, the only difference between fork(), vfork(), and clone() is which flags are set in the subsequent calls to do_fork().

Table 3.4. Flags Passed to do_fork by fork(), vfork(), and clone()| | fork() | vfork() | clone() |

|---|

SIGCHLD | X | X | | CLONE_VFORK | | X | | CLONE_VM | | X | |

Finally, we get to do_fork(), which performs the real process creation. Recall that up to this point, we only have the parent executing the call to fork(), which then enables the system call sys_fork(); we still do not have a new process. Our program foo still exists as an executable file on disk. It is not running or in memory.

3.3.4. do_fork() Function

We follow the kernel side execution of do_fork() line by line as we describe the details behind the creation of a new process.

-----------------------------------------------------------------------

kernel/fork.c

1167 long do_fork(unsigned long clone_flags,

1168 unsigned long stack_start,

1169 struct pt_regs *regs,

1170 unsigned long stack_size,

1171 int __user *parent_tidptr,

1172 int __user *child_tidptr)

1173 {

1174 struct task_struct *p;

1175 int trace = 0;

1176 long pid;

1177

1178 if (unlikely(current->ptrace)) {

1179 trace = fork_traceflag (clone_flags);

1180 if (trace)

1181 clone_flags |= CLONE_PTRACE;

1182 }

1183

1184 p = copy_process(clone_flags, stack_start, regs, stack_size, parent_tidptr,

child_tidptr);

----------------------------------------------------------------------- child_tidptr);

-----------------------------------------------------------------------

Lines 11781183

The code begins by verifying if the parent wants the new process ptraced. ptracing references are prevalent within functions dealing with processes. This book explains only the ptrace references at a high level. To determine whether a child can be traced, fork_traceflag() must verify the value of clone_flags. If CLONE_VFORK is set in clone_flags, if SIGCHLD is not to be caught by the parent, or if the current process also has PT_TRACE_FORK set, the child is traced, unless the CLONE_UNTRACED or CLONE_IDLETASK flags have also been set.

Line 1184

This line is where a new process is created and where the values in the registers are copied out. The copy_process() function performs the bulk of the new process space creation and descriptor field definition. However, the start of the new process does not take place until later. The details of copy_process() make more sense when the explanation is scheduler-centric. See the "

Keeping Track of Processes: Basic Scheduler Construction" section in this chapter for more detail on what happens here.

-----------------------------------------------------------------------

kernel/fork.c

...

1189 pid = IS_ERR(p) ? PTR_ERR(p) : p->pid;

1190

1191 if (!IS_ERR(p)) {

1192 struct completion vfork;

1193

1194 if (clone_flags & CLONE_VFORK) {

1195 p->vfork_done = &vfork;

1196 init_completion(&vfork);

1197 }

1198

1199 if ((p->ptrace & PT_PTRACED) || (clone_flags & CLONE_STOPPED)) {

...

1203 sigaddset(&p->pending.signal, SIGSTOP);

1204 set_tsk_thread_flag(p, TIF_SIGPENDING);

1205 }

...

-----------------------------------------------------------------------

Line 1189

This is a check for pointer errors. If we find a pointer error, we return the pointer error without further ado.

Lines 11941197

At this point, check if do_fork() was called from vfork(). If it was, enable the wait queue involved with vfork().

Lines 11991205

If the parent is being traced or the clone is set to CLONE_STOPPED, the child is issued a SIGSTOP signal upon startup, thus starting in a stopped state.

-----------------------------------------------------------------------

kernel/fork.c

1207 if (!(clone_flags & CLONE_STOPPED)) {

...

1222 wake_up_forked_process(p);

1223 } else {

1224 int cpu = get_cpu();

1225

1226 p->state = TASK_STOPPED;

1227 if (!(clone_flags & CLONE_STOPPED))

1228 wake_up_forked_process(p); /* do this last */

1229 ++total_forks;

1230

1231 if (unlikely (trace)) {

1232 current->ptrace_message = pid;

1233 ptrace_notify ((trace << 8) | SIGTRAP);

1234 }

1235

1236 if (clone_flags & CLONE_VFORK) {

1237 wait_for_completion(&vfork);

1238 if (unlikely (current->ptrace & PT_TRACE_VFORK_DONE))

1239 ptrace_notify ((PTRACE_EVENT_VFORK_DONE << 8) | SIGTRAP);

1240 } else

...

1248 set_need_resched();

1249 }

1250 return pid;

1251 }

-----------------------------------------------------------------------

Lines 12261229

In this block, we set the state of the task to TASK_STOPPED. If the CLONE_STOPPED flag was not set in clone_flags, we wake up the child process; otherwise, we leave it waiting for its wakeup signal.

Lines 12311234

If ptracing has been enabled on the parent, we send a notification.

Lines 12361239

If this was originally a call to vfork(), this is where we set the parent to blocking and send a notification to the trace if enabled. This is implemented by the parent being placed in a wait queue and remaining there in a TASK_UNINTERRUPTIBLE state until the child calls exit() or execve().

Line 1248

We set need_resched in the current task (the parent). This allows the child process to run first.

|

3.4. Process Lifespan

Now that we have seen how a process is created, we need to look at what happens during the course of its lifespan. During this time, a process can find itself in various states. The transition between these states depends on the actions that the process performs and on the nature of the signals sent to it. Our example program has found itself in the TASK_INTERRUPTIBLE state and in TASK_RUNNING (its current state).

The first state a process state is set to is TASK_INTERRUPTIBLE. This occurs during process creation in the copy_process() routine called by do_fork(). The second state a process finds itself in is TASK_RUNNING, which is set prior to exiting do_fork(). These two states are guaranteed in the life of the process. Following those two states, many variables come into play that determine what states the process will find itself in. The last state a process is set to is TASK_ZOMBIE, during the call to do_exit(). Let's look at the various process states and the manner in which the transitions from one state to the next occur. We point out how our process proceeds from one state to another.

3.4.1. Process States

When a process is running, it means that its context has been loaded into the CPU registers and memory and that the program that defines this context is being executed. At any particular time, a process might be unable to run for a number of reasons. A process might be unable to continue running because it is waiting for input that is not present or the scheduler may have decided it has run the maximum amount of time units allotted and that it must yield to another process. A process is considered ready when it's not running but is able to run (as with the rescheduling) or blocked when waiting for input.

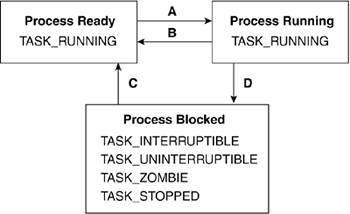

Figure 3.10 shows the abstract process states and underlies the possible Linux task states that correspond to each abstract state. Table 3.5 outlines the four transitions and how it is brought about. Table 3.6 associates the abstract states with the values used in the Linux kernel to identify those states.

Table 3.5. Summary of TransitionsTransition | Agent of Transition |

|---|

Ready to Running (A) | Selected by scheduler | Running to Ready (B) | Timeslice ends (inactive)

Process yields (active) | Blocked to Ready (C ) | Signal comes in

Resource becomes available | Running to Blocked (D) | Process sleeps or waits on something |

Table 3.6. Association of Linux Flags with Abstract Process StatesAbstract State | Linux Task States |

|---|

Ready | TASK_RUNNING | Running | TASK_RUNNING | Blocked | TASK_INTERRUPTIBLE

TASK_UNINTERRUPTIBLE

TASK_ZOMBIE

TASK_STOPPED |

NOTE

The set_current_state() process state can be set if access to the task struct is available by a direct assignment setting such as current->state= TASK_INTERRUPTIBLE. A call to set_current_state(TASK_INTERRUPTIBLE) will perform the same effect.

3.4.2. Process State Transitions

We now look at the kinds of events that would cause a process to go from one state to another. The abstract process transitions (refer to Table 3.5) include the transition from the ready state to the running state, the transition from the running state to the ready state, the transitions from the blocked state to the ready state, and the transition from the running state to the blocked state. Each transition can translate into more than one transition between different Linux task states. For example, going from blocked to running could translate to going from any one of TASK_ INTERRUPTIBLE, TASK_UNINTERRUPTIBLE, TASK_ZOMBIE, or TASK_STOPPED to TASK_RUNNING. Figure 3.11 and Table 3.7 describe these transitions.

Table 3.7. Summary of Task TransitionsStart Linux Task State | End Linux Task State | Agent of Transition |

|---|

TASK_RUNNING | TASK_UNINTERRUPTIBLE | Process enters wait queue. | TASK_RUNNING | TASK_INTERRUPTIBLE | Process enters wait queue. | TASK_RUNNING | TASK_STOPPED | Process receives SIGSTOP signal or process is being traced. | TASK_RUNNING | TASK_ZOMBIE | Process is killed but parent has not called sys_wait4(). | TASK_INTERRUPTIBLE | TASK_STOPPED | During signal receipt. | TASK_UNINTERRUPTIBLE | TASK_STOPPED | During waking up. | TASK_UNINTERRUPTIBLE | TASK_RUNNING | Process has received the resource it was waiting for. | TASK_INTERRUPTIBLE | TASK_RUNNING | Process has received the resource it was waiting for or has been set to running as a result of a signal it received. | TASK_RUNNING | TASK_RUNNING | Moved in and out by the scheduler. |

We now explain the various state transitions detailing the Linux task state transitions under the general process transition categories.

3.4.2.1. Ready to Running

The abstract process state transition of "ready to running" does not correspond to an actual Linux task state transition because the state does not actually change (it stays as TASK_RUNNING). However, the process goes from being in a queue of ready to run tasks (or run queue) to actually being run by the CPU.

TASK_RUNNING to TASK_RUNNING

Linux does not have a specific state for the task that is currently using the CPU, and the task retains the state of TASK_RUNNING even though the task moves out of a queue and its context is now executing. The scheduler selects the task from the run queue.

Chapter 7 discusses how the scheduler selects the next task to set to running.

3.4.2.2. Running to Ready

In this situation, the task state does not change even though the task itself undergoes a change. The abstract process state transition helps us understand what is happening. As previously stated, a process goes from running to being ready to run when it transitions from being run by the CPU to being placed in the run queue.

TASK_RUNNING to TASK_RUNNING

Because Linux does not have a separate state for the task whose context is being executed by the CPU, the task does not suffer an explicit Linux task state transition when this occurs and stays in the TASK_RUNNING state. The scheduler selects when to switch out a task from being run to being placed in the run queue according to the time it has spent executing and the task's priority (

Chapter 7 covers this in detail).

3.4.2.3. Running to Blocked

When a process gets blocked, it can be in one of the following states: TASK_INTERRUPTIBLE, TASK_UNINTERRUPTIBLE, TASK_ZOMBIE, or TASK_STOPPED. We now describe how a task gets to be in each of these states from TASK_RUNNING, as detailed in Table 3.7.

TASK_RUNNING to TASK_INTERRUPTIBLE

This state is usually called by blocking I/O functions that have to wait on an event or resource. What does it mean for a task to be in the TASK_INTERRUPTIBLE state? Simply that it is not on the run queue because it is not ready to run. A task in TASK_INTERRUPTIBLE wakes up if its resource becomes available (time or hardware) or if a signal comes in. The completion of the original system call depends on the implementation of the interrupt handler. In the code example, the child process accesses a file that is on disk. The disk driver is in charge of knowing when the device is ready for the data to be accessed. Hence, the driver will have code that looks something like this:

while(1)

{

if(resource_available)

break();

set_current_state(TASK_INTERRUPTIBLE);

schedule();

}

set_current_state(TASK_RUNNING);

The example process enters the TASK_INTERRUPTIBLE state at the time it performs the call to open(). At this point, it is removed from being the running process by the call to schedule(), and another process that the run queue selects becomes the running process. After the resource becomes available, the process breaks out of the loop and sets the process' state to TASK_RUNNING, which puts it back on the run queue. It then waits until the scheduler determines that it is the process' turn to run.

The following listing shows the function interruptible_sleep_on(), which can set a task in the TASK_INTERRUPTIBLE state:

-----------------------------------------------------------------------

kernel/sched.c

2504 void interruptible_sleep_on(wait_queue_head_t *q)

2505 {

2506 SLEEP_ON_VAR

2507

2508 current->state = TASK_INTERRUPTIBLE;

2509

2510 SLEEP_ON_HEAD

2511 schedule();

2512 SLEEP_ON_TAIL

2513 }

-----------------------------------------------------------------------

The SLEEP_ON_HEAD and the SLEEP_ON_TAIL macros take care of adding and removing the task from the wait queue (see the "

Wait Queues" section in this chapter). The SLEEP_ON_VAR macro initializes the task's wait queue entry for addition to the wait queue.

TASK_RUNNING to TASK_UNINTERRUPTIBLE

The TASK_UNINTERRUPTIBLE state is similar to TASK_INTERRUPTIBLE with the exception that processes do not heed signals that come in while it is in kernel mode. This state is also the default state into which a task is set when it is initialized during creation in do_fork(). The sleep_on() function is called to set a task in the TASK_UNINTERRUPTIBLE state.

-----------------------------------------------------------------------

kernel/sched.c

2545 long fastcall __sched sleep_on(wait_queue_head_t *q)

2546 {

2547 SLEEP_ON_VAR

2548

2549 current->state = TASK_UNINTERRUPTIBLE;

2550

2551 SLEEP_ON_HEAD

2552 schedule();

2553 SLEEP_ON_TAIL

2554

2555 return timeout;

2556 }

-----------------------------------------------------------------------

This function sets the task on the wait queue, sets its state, and calls the scheduler.

TASK_RUNNING to TASK_ZOMBIE

A process in the TASK_ZOMBIE state is called a zombie process. Each process goes through this state in its lifecycle. The length of time a process stays in this state depends on its parent. To understand this, realize that in UNIX systems, any process may retrieve the exit status of a child process by means of a call to wait() or waitpid() (see the "

Parent Notification and sys_wait4()" section). Hence, minimal information needs to be available to the parent, even once the child terminates. It is costly to keep the process alive just because the parent needs to know its state; hence, the zombie state is one in which the process' resources are freed and returned but the process descriptor is retained.

This temporary state is set during a process' call to sys_exit() (see the "

Process Termination" section for more information). Processes in this state will never run again. The only state they can go to is the TASK_STOPPED state.

If a task stays in this state for too long, the parent task is not reaping its children. A zombie task cannot be killed because it is not actually alive. This means that no task exists to kill, merely the task descriptor that is waiting to be released.

TASK_RUNNING to TASK_STOPPED

This transition will be seen in two cases. The first case is processes that a debugger or a trace utility is manipulating. The second is if a process receives SIGSTOP or one of the stop signals.

TASK_UNINTERRUPTIBLE or TASK_INTERRUPTIBLE to TASK_STOPPED

TASK_STOPPED manages processes in SMP systems or during signal handling. A process is set to the TASK_STOPPED state when the process receives a wake-up signal or if the kernel specifically needs the process to not respond to anything (as it would if it were set to TASK_INTERRUPTIBLE, for example).

Unlike a task in state TASK_ZOMBIE, a process in state TASK_STOPPED is still able to receive a SIGKILL signal.

3.4.2.4. Blocked to Ready

The transition of a process from blocked to ready occurs upon acquisition of the data or hardware on which the process was waiting. The two Linux-specific transitions that occur under this category are TASK_INTERRUPTIBLE to TASK_RUNNING and TASK_UNINTERRUPTIBLE to TASK_RUNNING.

3.5. Process Termination

A process can terminate voluntarily and explicitly, voluntarily and implicitly, or involuntarily. Voluntary termination can be attained in two ways:

Returning from the main() function (implicit) Calling exit() (explicit)

Executing a return from the main() function literally translates into a call to exit(). The linker introduces the call to exit() under these circumstances.

Involuntary termination can be attained in three ways:

The process might receive a signal that it cannot handle. An exception might be raised during its kernel mode execution. The process might have received the SIGABRT or other termination signal.

The termination of a process is handled differently depending on whether the parent is alive or dead. A process can

In the first case, the child is turned into a zombie process until the parent makes the call to wait/waitpid(). In the second case, the child's parent status will have been inherited by the init() process. We see that when any process terminates, the kernel reviews all the active processes and verifies whether the terminating process is parent to any process that is still alive and active. If so, it changes that child's parent PID to 1.

Let's look at the example again and follow it through its demise. The process explicitly calls exit(0). (Note that it could have just as well called _exit(), return(0), or fallen off the end of main with neither call.) The exit() C library function then calls the sys_exit() system call. We can review the following code to see what happens to the process from here onward.

We now look at the functions that terminate a process. As previously mentioned, our process foo calls exit(), which calls the first function we look at, sys_exit(). We delve through the call to sys_exit() and into the details of do_exit().

3.5.1. sys_exit() Function

-----------------------------------------------------------------------

kernel/exit.c

asmlinkage long sys_exit(int error_code)

{

do_exit((error_code&0xff)<<8);

}

-----------------------------------------------------------------------

sys_exit() does not vary between architectures, and its job is fairly straightforwardall it does is call do_exit() and convert the exit code into the format required by the kernel.

3.5.2. do_exit() Function

-----------------------------------------------------------------------

kernel/exit.c

707 NORET_TYPE void do_exit(long code)

708 {

709 struct task_struct *tsk = current;

710

711 if (unlikely(in_interrupt()))

712 panic("Aiee, killing interrupt handler!");

713 if (unlikely(!tsk->pid))

714 panic("Attempted to kill the idle task!");

715 if (unlikely(tsk->pid == 1))

716 panic("Attempted to kill init!");

717 if (tsk->io_context)

718 exit_io_context();

719 tsk->flags |= PF_EXITING;

720 del_timer_sync(&tsk->real_timer);

721

722 if (unlikely(in_atomic()))

723 printk(KERN_INFO "note: %s[%d] exited with preempt_count %d\n",

724 current->comm, current->pid,

725 preempt_count());

-----------------------------------------------------------------------

Line 707

The parameter code comprises the exit code that the process returns to its parent.

Lines 711716

Verify against unlikely, but possible, invalid circumstances. These include the following:

Making sure we are not inside an interrupt handler. Ensure we are not the idle task (PID0=0) or the init task (PID=1). Note that the only time the init process is killed is upon system shutdown.

Line 719

Here, we set PF_EXITING in the flags field of the processes' task struct. This indicates that the process is shutting down. For example, this is used when creating interval timers for a given process. The process flags are checked to see if this flag is set and thus helps prevent wasteful processing.

-----------------------------------------------------------------------

kernel/exit.c

...

727 profile_exit_task(tsk);

728

729 if (unlikely(current->ptrace & PT_TRACE_EXIT)) {

730 current->ptrace_message = code;

731 ptrace_notify((PTRACE_EVENT_EXIT << 8) | SIGTRAP);

732 }

733

734 acct_process(code);

735 __exit_mm(tsk);

736

737 exit_sem(tsk);

738 __exit_files(tsk);

739 __exit_fs(tsk);

740 exit_namespace(tsk);

741 exit_thread();

...

-----------------------------------------------------------------------

Lines 729732

If the process is being ptraced and the PT_TRACE_EXIT flag is set, we pass the exit code and notify the parent process.

Lines 735742

These lines comprise the cleaning up and reclaiming of resources that the task has been using and will no longer need. __exit_mm() frees the memory allocated to the process and releases the mm_struct associated with this process. exit_sem() disassociates the task from any IPC semaphores. __exit_files() releases any files the task allocated and decrements the file descriptor counts. __exit_fs() releases all file system data.

-----------------------------------------------------------------------

kernel/exit.c

...

744 if (tsk->leader)

745 disassociate_ctty(1);

746

747 module_put(tsk->thread_info->exec_domain->module);

748 if (tsk->binfmt)

749 module_put(tsk->binfmt->module);

...

-----------------------------------------------------------------------

Lines 744745

If the process is a session leader, it is expected to have a controlling terminal or tty. This function disassociates the task leader from its controlling tty.

Lines 747749

In these blocks, we decrement the reference counts for the module:

-----------------------------------------------------------------------

kernel/exit.c

...

751 tsk->exit_code = code;

752 exit_notify(tsk);

753

754 if (tsk->exit_signal == -1 && tsk->ptrace == 0)

755 release_task(tsk);

756

757 schedule();

758 BUG();

759 /* Avoid "noreturn function does return". */

760 for (;;) ;

761 }

...

-----------------------------------------------------------------------

Line 751

Set the task's exit code in the task_struct field exit_code.

Line 752

Send the SIGCHLD signal to parent and set the task state to TASK_ZOMBIE. exit_notify() notifies the relations of the impending task's death. The parent is informed of the exit code while the task's children have their parent set to the init process. The only exception to this is if another existing process exists within the same process group: In this case, the existing process is used as a surrogate parent.

Line 754

If exit_signal is -1 (indicating an error) and the process is not being ptraced, the kernel calls on the scheduler to release the process descriptor of this task and to reclaim its timeslice.

Line 757

Yield the processor to a new process. As we see in

Chapter 7, the call to schedule() will not return. All code past this point catches impossible circumstances or avoids compiler warnings.

3.5.3. Parent Notification and sys_wait4()

When a process is terminated, its parent is notified. Prior to this, the process is in a zombie state where all its resources have been returned to the kernel, but the process descriptor remains. The parent task (for example, the Bash shell) receives the signal SIGCHLD that the kernel sends to it when the child process terminates. In the example, the shell calls wait() when it wants to be notified. A parent process can ignore the signal by not implementing an interrupt handler and can instead choose to call wait() (or waitpid()) at any point.

The wait family of functions serves two general roles:

Our parent program can choose to call one of the four functions in the wait family:

pid_t wait(int *status) pid_t waitpid(pid_t pid, int *status, int options) pid_t wait3(int *status, int options, struct rusage *rusage) pid_t wait4(pid_t pid, int *status, int options, struct rusage *rusage)

Each function will in turn call sys_wait4(), which is where the bulk of the notification occurs.

A process that calls one of the wait functions is blocked until one of its children terminates or returns immediately if the child has terminated (or if the parent is childless). The sys_wait4() function shows us how the kernel manages this notification:

-----------------------------------------------------------------------

kernel/exit.c

1031 asmlinkage long sys_wait4(pid_t pid,unsigned int * stat_addr,

int options, struct rusage * ru)

1032 {

1033 DECLARE_WAITQUEUE(wait, current);

1034 struct task_struct *tsk;

1035 int flag, retval;

1036

1037 if (options & ~(WNOHANG|WUNTRACED|__WNOTHREAD|__WCLONE|__WALL))

1038 return -EINVAL;

1039

1040 add_wait_queue(¤t->wait_chldexit,&wait);

1041 repeat:

1042 flag = 0;

1043 current->state = TASK_INTERRUPTIBLE;

1044 read_lock(&tasklist_lock);

...

-----------------------------------------------------------------------

Line 1031

The parameters include the PID of the target process, the address in which the exit status of the child should be placed, flags for sys_wait4(), and the address in which the resource usage information of the child should be placed.

Lines 1033 and 1040

Declare a wait queue and add the process to it. (This is covered in more detail in the "

Wait Queues" section.)

Line 10371038

This code mostly checks for error conditions. The function returns a failure code if the system call is passed options that are invalid. In this case, the error EINVAL is returned.

Line 1042

The flag variable is set to 0 as an initial value. This variable is changed once the pid argument is found to match one of the calling task's children.

Line 1043

This code is where the calling process is set to blocking. The state of the task is moved from TASK_RUNNING to TASK_INTERRUPTIBLE.

-----------------------------------------------------------------------

kernel/exit.c

...

1045 tsk = current;

1046 do {

1047 struct task_struct *p;

1048 struct list_head *_p;

1049 int ret;

1050

1051 list_for_each(_p,&tsk->children) {

1052 p = list_entry(_p,struct task_struct,sibling);

1053

1054 ret = eligible_child(pid, options, p);

1055 if (!ret)

1056 continue;

1057 flag = 1;

1058 switch (p->state) {

1059 case TASK_STOPPED:

1060 if (!(options & WUNTRACED) &&

1061 !(p->ptrace & PT_PTRACED))

1062 continue;

1063 retval = wait_task_stopped(p, ret == 2,

1064 stat_addr, ru);

1065 if (retval != 0) /* He released the lock. */

1066 goto end_wait4;

1067 break;

1068 case TASK_ZOMBIE:

...

1072 if (ret == 2)

1073 continue;

1074 retval = wait_task_zombie(p, stat_addr, ru);

1075 if (retval != 0) /* He released the lock. */

1076 goto end_wait4;

1077 break;

1078 }

1079 }

...

1091 tsk = next_thread(tsk);

1092 if (tsk->signal != current->signal)

1093 BUG();

1094 } while (tsk != current);

...

-----------------------------------------------------------------------

Lines 1046 and 1094

The do while loop iterates once through the loop while looking at itself, then continues while looking at other tasks.

Line 1051

Repeat the action on every process in the task's children list. Remember that this is the parent process that is waiting on its children's exit. The process is currently in TASK_INTERRUPTIBLE and iterating over its children list.

Line 1054

Determine if the pid parameter passed is unreasonable.

Line 10581079

Check the state of each of the task's children. Actions are performed only if a child is stopped or if it is a zombie. If a task is sleeping, ready, or running (the remaining states), nothing is done. If a child is in TASK_STOPPED and the UNtrACED option has been used (which means that the task wasn't stopped because of a process trace), we verify if the status of that child has been reported and return the child's information. If a child is in TASK_ZOMBIE, it is reaped.

-----------------------------------------------------------------------

kernel/exit.c

...

1106 retval = -ECHILD;

1107 end_wait4:

1108 current->state = TASK_RUNNING;

1109 remove_wait_queue(¤t->wait_chldexit,&wait);

1110 return retval;

1111 }

-----------------------------------------------------------------------

Line 1106

If we have gotten to this point, the PID specified by the parameter is not a child of the calling process. ECHILD is the error used to notify us of this event.

Line 11071111

At this point, the children list has been processed, and any children that needed to be reaped have been reaped. The parent's block is removed and its state is set to TASK_RUNNING once again. Finally, the wait queue is removed.

At this point, you should be familiar with the various stages that a process goes through during its lifecycle, the kernel functions that make all this happen, and the structures the kernel uses to keep track of all this information. Now, we look at how the scheduler manipulates and manages processes to create the effect of a multithreaded system. We also see in more detail how processes go from one state to another.

3.6. Keeping Track of Processes: Basic Scheduler Construction

Until this point, we kept the concepts of the states and the transitions process-centric. We have not spoken about how the transition is managed, nor have we spoken about the kernel infrastructure, which performs the running and stopping of processes. The scheduler handles all these details. Having finished the exploration of the process lifecycle, we now introduce the basics of the scheduler and how it interacts with the do_fork() function during process creation.

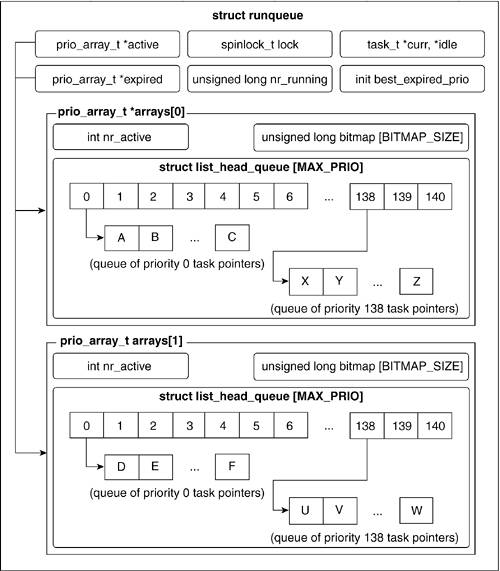

3.6.1. Basic Structure

The scheduler operates on a structure called a run queue. There is one run queue per CPU on the system. The core data structures within a run queue are two priority-ordered arrays. One of these contains active tasks and the other contains expired tasks. In general, an active task runs for a set amount of time, the length of its timeslice or quantum, and is then inserted into the expired array to wait for more CPU time. When the active array is empty, the scheduler swaps the two arrays by exchanging the active and expired pointers. The scheduler then begins executing tasks on the new active array.

Figure 3.12 illustrates the priority arrays within the run queue. The definition of the priority array structure is as follows:

-----------------------------------------------------------------------

kernel/sched.c

192 struct prio_array {

193 int nr_active;

194 unsigned long bitmap[BITMAP_SIZE];

195 struct list_head queue[MAX_PRIO];

196 };

-----------------------------------------------------------------------

The fields of the prio_array struct are as follows:

nr_active.

A counter that keeps track of the number of tasks held in the priority array.

bitmap.

This keeps track of the priorities within the array. The actual length of bitmap depends on the size of unsigned longs on the system. It will always be enough to store MAX_PRIO bits, but it could be longer.

queue.

An array that stores lists of tasks. Each list holds tasks of a certain priority. Thus, queue[0] holds a list of all tasks of priority 0, queue[1] holds a list of all tasks of priority 1, and so on.

With this basic understanding of how a run queue is organized, we can now embark on following a task through the scheduler on a single CPU system.

3.6.2. Waking Up from Waiting or Activation

Recall that when a process calls fork(), a new process is made. As previously mentioned, the process calling fork() is called the parent, and the new process is called the child. The newly created process needs to be scheduled for access to the CPU. This occurs via the do_fork() function.

Two important lines deal with the scheduler in do_fork() related to waking up processes. copy_process(), called on line 1184 of linux/kernel/fork.c, calls the function sched_fork(), which initializes the process for an impending insertion into the scheduler's run queue. wake_up_forked_process(), called on line 1222 of linux/kernel/fork.c, takes the initialized process and inserts it into the run queue. Initialization and insertion have been separated to allow for the new process to be killed, or otherwise terminated, before being scheduled. The process will only be scheduled if it is created, initialized successfully, and has no pending signals.

3.6.2.1. sched_fork(): Scheduler Initialization for Newly Forked Process

The sched_fork()function performs the infrastructure setup the scheduler requires for a newly forked process:

-----------------------------------------------------------------------

kernel/sched.c

719 void sched_fork(task_t *p)

720 {

721 /*

722 * We mark the process as running here, but have not actually

723 * inserted it onto the runqueue yet. This guarantees that

724 * nobody will actually run it, and a signal or other external

725 * event cannot wake it up and insert it on the runqueue either.

726 */

727 p->state = TASK_RUNNING;

728 INIT_LIST_HEAD(&p->run_list);

729 p->array = NULL;

730 spin_lock_init(&p->switch_lock);

-----------------------------------------------------------------------

Line 727

The process is marked as running by setting the state attribute in the task structure to TASK_RUNNING to ensure that no event can insert it on the run queue and run the process before do_fork() and copy_process() have verified that the process was created properly. When that verification passes, do_fork() adds it to the run queue via wake_up_forked_process().

Line 728730

The process' run_list field is initialized. When the process is activated, its run_list field is linked into the queue structure of a priority array in the run queue. The process' array field is set to NULL to represent that it is not part of either priority array on a run queue. The next block of sched_fork(), lines 731 to 739, deals with kernel preemption. (Refer to Chapter 7 for more information on preemption.)

-----------------------------------------------------------------------

kernel/sched.c

740 /*

741 * Share the timeslice between parent and child, thus the

742 * total amount of pending timeslices in the system doesn't change,

743 * resulting in more scheduling fairness.

744 */

745 local_irq_disable();

746 p->time_slice = (current->time_slice + 1) >> 1;

747 /*

748 * The remainder of the first timeslice might be recovered by

749 * the parent if the child exits early enough.

750 */

751 p->first_time_slice = 1;

752 current->time_slice >>= 1;

753 p->timestamp = sched_clock();

754 if (!current->time_slice) {

755 /*

756 * This case is rare, it happens when the parent has only

757 * a single jiffy left from its timeslice. Taking the

758 * runqueue lock is not a problem.

759 */

760 current->time_slice = 1;

761 preempt_disable();

762 scheduler_tick(0, 0);

763 local_irq_enable();

764 preempt_enable();

765 } else

766 local_irq_enable();

767 }

-----------------------------------------------------------------------

Lines 740753

After disabling local interrupts, we divide the parent's timeslice between the parent and the child using the shift operator. The new process' first timeslice is set to 1 because it hasn't been run yet and its timestamp is initialized to the current time in nanosec units.

Lines 754767

If the parent's timeslice is 1, the division results in the parent having 0 time left to run. Because the parent was the current process on the scheduler, we need the scheduler to choose a new process. This is done by calling scheduler_tick() (on line 762). Preemption is disabled to ensure that the scheduler chooses a new current process without being interrupted. Once all this is done, we enable preemption and restore local interrupts.

At this point, the newly created process has had its scheduler-specific variables initialized and has been given an initial timeslice of half the remaining timeslice of its parent. By forcing a process to sacrifice a portion of the CPU time it's been allocated and giving that time to its child, the kernel prevents processes from seizing large chunks of processor time. If processes were given a set amount of time, a malicious process could spawn many children and quickly become a CPU hog.

After a process has been successfully initialized, and that initialization verified, do_fork() calls wake_up_forked_process():

-----------------------------------------------------------------------

kernel/sched.c

922 /*

923 * wake_up_forked_process - wake up a freshly forked process.

924 *

925 * This function will do some initial scheduler statistics housekeeping

926 * that must be done for every newly created process.

927 */

928 void fastcall wake_up_forked_process(task_t * p)

929 {

930 unsigned long flags;

931 runqueue_t *rq = task_rq_lock(current, &flags);

932

933 BUG_ON(p->state != TASK_RUNNING);

934

935 /*

936 * We decrease the sleep average of forking parents

937 * and children as well, to keep max-interactive tasks

938 * from forking tasks that are max-interactive.

939 */

940 current->sleep_avg = JIFFIES_TO_NS(CURRENT_BONUS(current) *

941 PARENT_PENALTY / 100 * MAX_SLEEP_AVG / MAX_BONUS);

942

943 p->sleep_avg = JIFFIES_TO_NS(CURRENT_BONUS(p) *

944 CHILD_PENALTY / 100 * MAX_SLEEP_AVG / MAX_BONUS);

945

946 p->interactive_credit = 0;

947

948 p->prio = effective_prio(p);

949 set_task_cpu(p, smp_processor_id());

950

951 if (unlikely(!current->array))

952 __activate_task(p, rq);

953 else {

954 p->prio = current->prio;

955 list_add_tail(&p->run_list, ¤t->run_list);

956 p->array = current->array;

957 p->array->nr_active++;

958 rq->nr_running++;

959 }

960 task_rq_unlock(rq, &flags);

961 }

-----------------------------------------------------------------------

Lines 930934

The first thing that the scheduler does is lock the run queue structure. Any modifications to the run queue must be made with the lock held. We also throw a bug notice if the process isn't marked as TASK_RUNNING, which it should be thanks to the initialization in sched_fork() (see Line 727 in kernel/sched.c shown previously).

Lines 940947

The scheduler calculates the sleep average of the parent and child processes. The sleep average is the value of how much time a process spends sleeping compared to how much time it spends running. It is incremented by the amount of time the process slept, and it is decremented on each timer tick while it's running. An interactive, or I/O bound, process spends most of its time waiting for input and normally has a high sleep average. A non-interactive, or CPU-bound, process spends most of its time using the CPU instead of waiting for I/O and has a low sleep average. Because users want to see results of their input, like keyboard strokes or mouse movements, interactive processes are given more scheduling advantages than non-interactive processes. Specifically, the scheduler reinserts an interactive process into the active priority array after its timeslice expires. To prevent an interactive process from creating a non-interactive child process and thereby seizing a disproportionate share of the CPU, these formulas are used to lower the parent and child sleep averages. If the newly forked process is interactive, it soon sleeps enough to regain any scheduling advantages it might have lost.

Line 948

The function effective_prio() modifies the process' static priority. It returns a priority between 100 and 139 (MAX_RT_PRIO to MAX__PRIO-1). The process' static priority can be modified by up to 5 in either direction based on its previous CPU usage and time spent sleeping, but it always remains in this range. From the command line, we talk about the nice value of a process, which can range from +19 to -20 (lowest to highest priority). A nice priority of 0 corresponds to a static priority of 120.

Line 749

The process has its CPU attribute set to the current CPU.

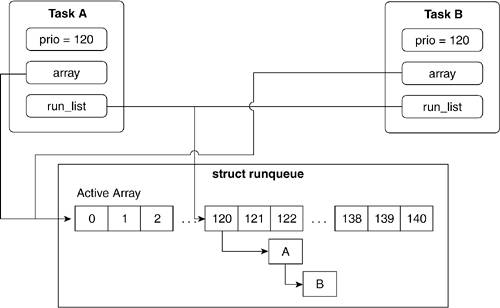

Lines 951960

The overview of this code block is that the new process, or child, copies the scheduling information from its parent, which is current, and then inserts itself into the run queue in the appropriate place. We have finished our modifications of the run queue, so we unlock it. The following paragraph and Figure 3.13 discuss this process in more detail.

The pointer array points to a priority array in the run queue. If the current process isn't pointing to a priority array, it means that the current process has finished or is asleep. In that case, the current process' runlist field is not in the queue of the run queue's priority array, which means that the list_add_tail() operation (on line 955) would fail. Instead, we insert the newly created process using __activate_task(), which adds the new process to the queue without referring to its parent.

In the normal case, when the current process is waiting for CPU time on a run queue, the process is added to the queue residing at slot p->prio in the priority array. The array that the process was added to has its process counter, nr_active, incremented and the run queue has its process counter, nr_running, incremented. Finally, we unlock the run queue lock.

The case where the current process doesn't point to a priority array on the run queue is useful in seeing how the scheduler manages the run queue and priority array attributes.

-----------------------------------------------------------------------

kernel/sched.c

366 static inline void __activate_task(task_t *p, runqueue_t *rq)

367 {

368 enqueue_task(p, rq->active);

369 rq->nr_running++;

370 }

-----------------------------------------------------------------------

__activate_task() places the given process p on to the active priority array on the run queue rq and increments the run queue's nr_running field, which is the counter for total number of processes that are on the run queue.

-----------------------------------------------------------------------

kernel/sched.c

311 static void enqueue_task(struct task_struct *p, prio_array_t *array)

312 {

313 list_add_tail(&p->run_list, array->queue + p->prio);

314 __set_bit(p->prio, array->bitmap);

315 array->nr_active++;

316 p->array = array;

317 }

-----------------------------------------------------------------------

Lines 311312

enqueue_task() takes a process p and places it on priority array array, while initializing aspects of the priority array.

Line 313

The process' run_list is added to the tail of the queue located at p->prio in the priority array.

Line 314

The priority array's bitmap at priority p->prio is set so when the scheduler runs, it can see that there is a process to run at priority p->prio.

Line 315

The priority array's process counter is incremented to reflect the addition of the new process.

Line 316

The process' array pointer is set to the priority array to which it was just added.

To recap, the act of adding a newly forked process is fairly straightforward, even though the code can be confusing because of similar names throughout the scheduler. A process is placed at the end of a list in a run queue's priority array at the slot specified by the process' priority. The process then records the location of the priority array and the list it's located in within its structure.

|

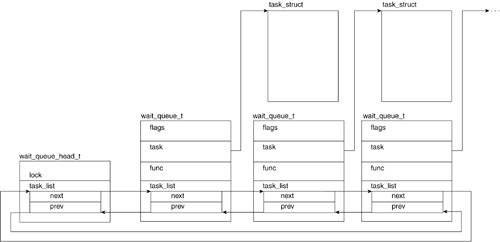

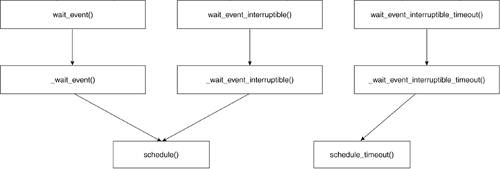

3.7. Wait Queues

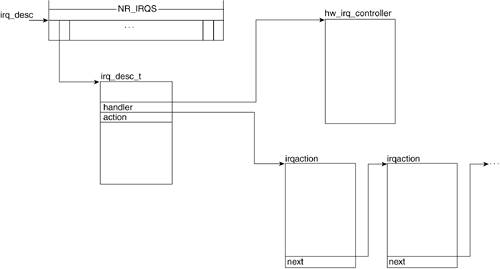

We discussed the process transition between the states of TASK_RUNNING and TASK_INTERRUPTIBLE or TASK_UNINTERRUPTIBLE. Now, we look at another structure that's involved in this transition. When a process is waiting on an external event to occur, it is removed from the run queue and placed on a wait queue. Wait queues are doubly linked lists of wait_queue_t structures. The wait_queue_t structure is set up to hold all the information required to keep track of a waiting task. All tasks waiting on a particular external event are placed in a wait queue. The tasks on a given wait queue are woken up, at which point the tasks verify the condition they are waiting for and either resume sleep or remove themselves from the wait queue and set themselves back to TASK_RUNNING. You might recall that sys_wait4() system calls use wait queues when a parent requests status of its forked child. Note that a task waiting for an external event (and therefore is no longer on the run queue) is either in the TASK_INTERRUPTIBLE or TASK_UNINTERRUPTIBLE states.

A wait queue is a doubly linked list of wait_queue_t structures that hold pointers to the process task structures of the processes that are blocking. Each list is headed up by a wait_queue_head_t structure, which marks the head of the list and holds the spinlock to the list to prevent wait_queue_t additional race conditions. Figure 3.14 illustrates wait queue implementation. We now look at the wait_queue_t and the wait_queue_head_t structures:

-----------------------------------------------------------------------

include/linux/wait.h

19 typedef struct __wait_queue wait_queue_t;

...

23 struct __wait_queue {

24 unsigned int flags;

25 #define WQ_FLAG_EXCLUSIVE 0x01

26 struct task_struct * task;

27 wait_queue_func_t func;

28 struct list_head task_list;

29 };

30

31 struct __wait_queue_head {

32 spinlock_t lock;

33 struct list_head task_list;

34 };

35 typedef struct __wait_queue_head wait_queue_head_t;

-----------------------------------------------------------------------

The wait_queue_t structure is comprised of the following fields:

flags.

Can hold the value WQ_FLAG_EXCLUSIVE, which is set to 1, or ~WQ_FLAG_EXCLUSIVE, which would be 0. The WQ_FLAG_EXCLUSIVE flag marks this process as an exclusive process. Exclusive and non-exclusive processes are discussed in the next section.

task.