Монтирование каталогов внутри UML

Есть 2 варианта монтирования каталога в UML-hostfs и humfs.

hostfs-более старый и ограниченный метод,но и более удобен.

В обоих случаях это виртуальная файловая система,которая хранится внутри ядра.

Если посмотреть на /proc/filesystems,

то можно увидеть что-то типа:

host% cat /proc/filesystems

nodev sysfs

nodev rootfs

nodev bdev

nodev proc

nodev sockfs

nodev binfmt_misc

nodev debugfs

nodev usbfs

nodev pipefs

nodev futexfs

nodev tmpfs

nodev eventpollfs

nodev devpts

ext2

nodev ramfs

nodev hugetlbfs

iso9660

nodev mqueue

nodev selinuxfs

ext3

nodev rpc_pipefs

nodev autofs

Все,что относится к nodev-это виртуальная файловая система

и относится к ядру.

Переменные и структуры данных ядра становятся видимыми как файл.

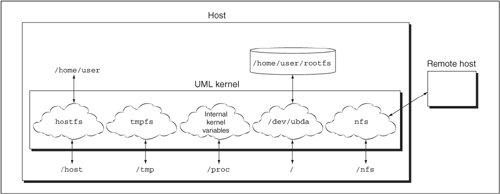

Следующий рисунок показывает разницу между различными типами файловых систем.

hostfs и humfs входят в состав ядра.

Они концептуально более похожи на сеть типа NFS.

Данные,хранимые в них,прозрачны и доступны снаружи.

With a network filesystem, file accesses are translated into network requests to the server, which sends data and status back. With hostfs and humfs, file accesses are translated into file accesses on the host. You can think of this as a one-to-one translation of requestsa read, write, or mkdir within UML translates directly into a read, write, or mkdir to the host. This is actually not true in the most literal sense. An operation such as mkdir within one of these filesystems must create a directory on the host; therefore, it must translate into a mkdir there, but won't necessarily do so immediately. Because of caching with the filesystem, the operation may not happen until a long time later. Operations such as a read or write may not translate into a host read or write at all. They may, in fact translate into an mmap followed by directly reading or writing memory. And in any case, the lengths of the read and write operations will certainly change when they reach the host. Linux filesystem operations typically have page granularitythe minimum I/O size is a machine page, 4K on most extant systems. For example, a sequence of 1-byte reads will be converted into a single page-length read to the host followed by simply passing out bytes one at a time from the buffer into which that page was read.

So, while it is conceptually true that hostfs and humfs operations correspond one-to-one to host operations, the reality is somewhat different. This difference will become relevant later in this chapter when we look at simultaneous access to data from a UML and the host, or from two UMLs.

hostfs

hostfs is the older and simpler of the two ways to mount a host directory as a UML directory. It uses the most obvious mapping of UML file operations to host operations in order to provide access to the host files. This is complicated only by some technical aspects, such as making use of the UML page cache. This simplicity results in a number of limitations, which we will see shortly and which I will use to motivate humfs.

So, let's get a UML instance and make a hostfs mount inside it:

UML# mount none /mnt -t hostfs

Now we have a new filesystem mounted on /mnt:

UML# mount

/dev/ubd0 on / type ext3 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=4,mode=620)

shm on /dev/shm type tmpfs (rw)

none on /mnt type hostfs (rw)

Its contents show that it looks a lot like the host's root filesystem:

UML# ls /mnt

bin etc lib media opt sbin sys usr

boot home lib64 misc proc selinux tmp var

dev initrd lost+found mnt root srv tools

You can do the same ls on the host's / to verify this. Basically, we have mounted the host's root on the UML instance's /mnt, creating a completely normal Linux filesystem within the UML. For getting access to files on the host within UML, this is very convenient. You can do anything within this filesystem that you can do with a disk-based filesystem, with some restrictions that we will talk about later.

By default, when you make a hostfs mount, you get the host's root filesystem. This isn't always desirable, so there is an option to mount a different host directory:

UML# mkdir /mnt-home

UML# mount none /mnt-home/ -t hostfs -o /home

UML# ls /mnt-home/

jdike lost+found

The -o option specifies the host directory to mount. From that mount point, it is impossible to access any files outside that directory. In our case, the /mnt-home mount point gives us access to the host's /home, but, from there, we can't access anything outside of that. The obvious trick of using .. to try to access files outside of /home won't work because it's the UML that will interpret the .., not the host. Trying to "dotdot" your way out of this will get you to the UML instance's /, not the host's /.

Using -o is at the option of the user within the instance. Many times, the host administrator wants all hostfs mounts confined to a host subdirectory and makes it impossible to access the host's /. There is a command-line option to UML to allow this, hostfs=/path/to/UML/jail. With this enabled, hostfs mounts within the UML will be restricted to the specified host subdirectory. If the UML user does a mount specifying a mount path with -o, that path will be appended to the directory on the command line. So, -o can be used to mount subdirectories of whatever directory the UML's hostfs has been confined to, but can't be used to escape it.

Now, let's create a file within the host mount:

UML# touch /mnt/tmp/uml-file

UML# ls -l /mnt/tmp/uml-file

-rw-r--r-- 1 500 500 0 Jun 10 13:02 /mnt/tmp/uml-file

The ownerships on this new file are somewhat unexpected. We are root inside the UML, and thus expect that any new files we create will be owned by root. However, we are creating files on the host, and the host is responsible for the file, including its ownerships. The UML instance is owned by user ID (UID) 500, so from its point of view, a process owned by UID 500 created a file in /tmp. It's perfectly natural that it would end up being owned by that UID. The host doesn't know or care that the process contains another Linux kernel that would like that file to be owned by root.

This seems perfectly reasonable and innocent, but it has a number of consequences that make hostfs unusable for a number of purposes. To demonstrate this, let's become a different, unprivileged user inside UML and see how hostfs behaves:

UML# su user

UML% cd /mnt/tmp

UML% echo foo > x

UML% ls -l x

-rw-r--r-- 1 500 500 4 Jun 10 14:31 x

UML% echo bar >> x

sh: x: Permission denied

UML% rm x

rm: remove write-protected regular file `x'? y

rm: cannot remove `x': Operation not permitted

UML% chmod 777 x

chmod: changing permissions of `x': Operation not permitted

Here we see a number of unexpected permission problems arising from the ownership of the new file. We created a file in the host's /tmp and found that we couldn't subsequently append to it, remove it, or change its permissions.

It is created with the owner UID 500 on the host and is writable by that UID. However, I became user, with UID 1001, inside the UML instance, so my attempts to modify the file don't even make it past the UML's permission checking. When the file was created on the host, it was given its ownership and permissions by the host. hostfs shows those permissions, rather than the ones the UML instance provided, because they are more "real."

The ownership and permissions are interpreted locally by the UML when seeing whether a file operation should succeed. The fact that the file ownerships are set by the host to something different from what the UML expects can cause files to be unmodifiable by their owner within UML.

This isn't a problem for the root user within UML because the superuser doesn't undergo the same permission checks as a normal user, so the permission checks occur on the host.

However, this issue does make it impossible for multiple users within the UML to use hostfs. In fact, only root within the UML can realistically use it. The only way for a normal UML user to use hostfs is for its UID to match the host UID that the UML is running as. So, if user within UML had UID 500 (matching the UML instance's UID on the host), the previous example would have been more successful.

Let's look at another problem, in which root within the UML doesn't have permission to do some things that it should be able to do:

UML# mknod ubda b 0 98

mknod: `ubda': Operation not permitted

Here, creating a device node for ubda doesn't work, even for root. Again, the reason is that the operation is forwarded to the host, where it is attempted as the nonroot UML user, and fails because this operation requires root privileges. You will see similar problems with creating a couple of other types of files.

If you experiment long enough with hostfs, you will discover other problems, such as accessing UNIX sockets. If the hostfs mount contains sockets, they were created by processes on the host. When one is opened on the host, it can be used to communicate with the process that created it. However, they are visible within a hostfs mount, but a UML process opening one will fail to communicate with anything. The UML kernel, not the host kernel, will interpret the open request and attempt to find the process that created it. Within the UML kernel, this will fail because there is no such process.

Creating a directory on the host with a UML root filesystem in it, and booting from it, is also problematic. The filesystem, by and large, should be owned by root, and it won't be. All of the files are owned by whoever created them on the host. At this writing, there is a kludge in the hostfs code that changes (internally to the UML kernel) the ownerships of these files to root when the hostfs filesystem is the UML root filesystem. This makes booting from hostfs work, more or less, but all the problems described above are still there. Other kernel developers have objected to this ownership changing, and this kludge likely won't be available much longer. When this "feature" does disappear, booting from a hostfs root filesystem likely won't work anymore.

I've spent a good amount of time describing the deficiencies of hostfs, but I'd like to point out that, for a common use case, hostfs is exactly what you want. If you have a private UML instance, are logged in to it as root, and want access to your own files on the host, hostfs is perfect. The filesystem semantics will be exactly what you expect, and no prior host setup is needed. Just run the hostfs mount command, and you have all of your files available.

Most of the problems with hostfs that I've described stem from the fact that all hostfs file operations go through both the UML's and the host's permission checking. This is because both systems look at the same data, the file metadata on the host, in order to decide what's allowed and what's not.

UNIX domain sockets and named pipes are sort of a reflection of a process within the filesystemthere is supposed to be a process at the other end of it. When the filesystem (including the sockets) is exported to another system, whether a UML instance with a hostfs mount or another system with an NFS mount, the process isn't present on the other system. In this case, the file doesn't have the meaning it does on its home system.

humfs

We can fix these problems by making sure we see, inside UML, distinct file ownerships, permissions, and types from the host. To achieve this, UML can store these in a separate place, freeing itself from the host's permission checks. This is what humfs does. The actual file data is stored in exactly the same way that hostfs doesin a directory hierarchy on the host. However, permissions information is stored separately, by default, in a parallel directory hierarchy.

For example, here are the data and metadata for a file stored in this way:

host% ls -l data/usr/bin/ls

-rwxr-x--x 1 jdike jdike 201642 May 1 10:01 data/usr/bin/ls

host% ls -l file_metadata/usr/bin/ls

-rw-r--r-- 1 jdike jdike 8 Jun 10 18:04 file_metadata/usr/bin/ls

host% cat file_metadata/usr/bin/ls

493 0 0

The actual ls binary is stored in data/usr/bin/ls, while its ownership and permissions are stored in file_metadata/usr/bin/ls. Notice that the permissions on the binary are wide open for the file's owner. This, in effect, disables permission checking on the host, allowing UML's ideas about what's allowed and what's not to prevail.

Next, notice the contents of the metadata file. For a normal file, such as /usr/bin/ls, the permissions and ownerships are stored here. In the last line of the output, 493 is the decimal equivalent of 0755, and the zeros are UID root and group ID (GID) root.

We can see this by looking at this file inside UML:

UML# ls -l usr/bin/ls

-rwxr-xr-x 1 root root 201642 May 1 10:01 usr/bin/ls

The humfs filesystem has taken the file size and date from data/usr/bin/ls and merged the ownership and permission information from file_metadata/usr/bin/ls.

By storing this metadata as the contents of a file on the host, UML may modify it in any way it sees fit. We can go through the list of hostfs problems I described earlier and see why this approach fixes them all.

In the case of a new file having unexpected ownerships, we can see that this just doesn't happen in humfs. The data file's ownership will, in fact, be determined by the UID and GID of the UML process, but this doesn't matter since the ownerships you will see inside UML will be determined by the contents of the file_metadata file.

So, you will be able to create a file on a humfs mount and do anything with it, such as append to it, remove it, or change permissions.

Now, let's try to make a block device:

UML# mknod ubda b 98 0

UML# ls -l ubda

brw-r--r-- 2 root root 98, 0 Jun 10 18:46 ubda

This works, and it looks as we would expect. To see why, let's look at what occurred on the host:

host% ls -l data/tmp/ubda

-rwxrw-rw- 1 jdike jdike 0 Jun 10 18:46 data/tmp/ubda

host% ls -l file_metadata/tmp/ubda

-rw-r--r-- 1 jdike jdike 15 Jun 10 18:46 file_metadata/tmp/ubda

host% cat file_metadata/tmp/ubda

420 0 0 b 98 0

The file is empty, just a token to let the UML filesystem know a file is there. Almost all of the device's data is in the metadata file. The first three elements are the same permissions and ownership information that we saw earlier. The rest, which don't appear for normal files, describe the type of file, namely, a block device with major number 98 and minor number 0.

The host definitely won't recognize this as a block device, which is why this works. Creating a device requires root privileges, so hostfs can't create one unless the UML is run by root. Under humfs, creating a device is simply a matter of creating this new file with contents that describe the device.

It is apparent that the host socket and named pipe problem can't happen on this filesystem. Everything in this directory on the host is a normal file or directory. Host sockets and named pipes just don't exist. If a UML process makes a UNIX domain socket or a named pipe, that will cause the file's type to appear in the metadata file.

Along with these advantages, humfs has one disadvantage: It needs to be set up beforehand. You can't just take an arbitrary host subdirectory and mount it as a humfs filesystem. So, humfs is not really useful for quick access to your files on the host.

In order to set up humfs, you need to decide what's going to be in your humfs mount, create an empty directory, copy the files to the data subdirectory, and run a script that will create the metadata. As a quick example, here's how to create a humfs version of your host's /bin.

host% mkdir humfs-test

host% cd humfs-test

host# cp -a /bin data

host# perl ..humfsify.pl jdike jdike 100M

host% ls -al

total 24

drwxrw-rw- 5 jdike jdike 4096 Jun 10 19:40 .

drwxrw-r-- 16 jdike jdike 4096 Jun 10 19:40 ..

drwxrwxrwx 2 jdike jdike 4096 May 23 12:12 data

drwxr-xr-x 2 jdike jdike 4096 Jun 10 19:40 dir_metadata

drwxr-xr-x 2 jdike jdike 4096 Jun 10 19:40 file_metadata

-rw-r--r-- 1 jdike jdike 58 Jun 10 19:40 superblock

Two of the commands, the creation of the data subdirectory and the running of humfsify, have to be run as root. The copying of the directory needs to preserve file ownerships so that humfsify can record them in the metadata, and humfsify needs to change those ownerships so that you own all the files.

We now have two metadata directories, one for files and one for directories, and a superblock file. This file contains information about the filesystem as a whole, rather like the superblock on a disk-based filesystem:

host% cat superblock

version 2

metadata shadow_fs

used 6877184

total 104857600

This tells the UML filesystem:

What version of humfs it is dealing with What metadata format is being used How much disk space is used How much total disk space is available

The shadow_fs metadata format describes the parallel metadata directories. There are some other possibilities, which will be described later in this section. The total disk space amount is simply the number given to humfsify. This number is used by the filesystem within UML to enforce the limit on disk consumption. Quotas on the host can be used, but they are not necessary.

You may have noticed that it would be particularly easy to change the amount of disk space in this filesystem. Simply changing the total field by editing this file would seem to do the trick, and it does. At this writing, this ability is not implemented, but it is simple enough and easy enough to do that it will be implemented at some point.

Now, having created the humfs directory, we can mount it within the UML:

UML# mkdir /mnt-test

UML# mount none /mnt-test -t humfs -o \

path=/home/jdike/linux/humfs-test

UML# cd /mnt-test

If you do an ls at this point, you'll see your copy of the host's /bin. Note that the mount command is very similar to the hostfs mount command. It's a virtual filesystem, so we're telling it to mount none since there is no block device associated with it, and we specify the filesystem type and the host mount point. In the case of humfs, specifying the host mount point is mandatory because it must be prepared ahead of time. humfs is passed the root of the humfs tree, which is the directory in which the data and metadata directories were created.

You can now do all the things that didn't work under humfs and see that they do work here. humfs works as expected in all cases, with no interference from the host's permission checking. So, humfs is usable as a UML root filesystem, whereas hostfs can be used only with some trickery.

Now I'll cover some aspects of humfs that I didn't explain earlier. First, version 2 of humfs was created because version 1 had a bug, and fixing that bug led to the separate file_metadata and dir_metadata directories. As we've seen, the metadata files for files are straightforward. Directories have ownerships and permissions and need meta-data files, but they introduce problems in some corner cases.

The initial shadowfs design required a file called metadata in each directory in the metadata tree that would hold the ownerships and permissions for the parent directory. Of course, each file in the original directory would have a file in the metadata tree with the same name. But I missed this case: What metadata file should be used for a file called metadata? Both the file and the parent directory would want to use the same metadata file, metadata.

Another problem occurs with a subdirectory called metadata. In this case, the metadata file will want to be both a directory (because the metadata directory structure is identical to the data directory structure) and a file (because the parent directory will want to put its metadata there.

The solution I chose was to separate the file and directory metadata information from each other. With them in separate directory trees, the first collision I described doesn't exist. However, the second does. The solution to that is to allow the metadata directory to be created, and rename the parent directory's metadata file. It turns out that it can be renamed to anything that doesn't collide with a subdirectory. The reason is that in the dir_metadata tree, there will be only one normal file in each directory. If metadata is a directory, the humfs filesystem will need to scan the directory for a normal file, and that will be the metadata file for the parent directory.

The next question is this: Why do we specify the metadata format in the superblock file? When I first introduced humfs, with the version 1 shadow_fs format, there were a bunch of suggestions for alternate formats. They generally have advantages and disadvantages compared to the shadow_fs format, and I thought it would be interesting to support some of them and let system administrators choose among them.

These proposals came in two classesthose that preserved some sort of shadow metadata directory hierarchy, and those that put the metadata in some sort of database. An interesting example of the first class was to make all of the metadata files symbolic links, rather than normal files, and store the metadata in the link target. This would make them dangling links, as the targets would not exist, but it would allow somewhat more efficient reading of the metadata.

Reading a file requires three system calls: an open, a read, and a close. Reading the target of a symbolic link requires onea readlink. Against this slight performance gain, there would be some loss of manageability, as system administrators and their tools expect to read contents of files, not targets of symbolic links.

The second class of proposals, storing metadata in databases of various sorts, is also interesting. Depending on the database, it could allow for more efficient retrieval of metadata, which is nice. However, what makes it more interesting to me is that the database could be used on the host to do queries much more quickly than with a normal filesystem. The host administrator could ask questions about what files had been modified recently or what files are setuid root and could get answers very quickly, without having to search the entire filesystem.

Even more interesting would be the ability to import this capability into the UML, where the UML administrator, who probably cares about the answers more than the host administrator does, could ask these questions. I'm planning to allow this through yet another filesystem, which would make a database look like a filesystem. The UML admin would mount this filesystem inside the UML and query the database underneath it this like:

UML# cat /sqlfs/"select name from root_fs where setuid = 1"

/usr/bin/newgrp

/usr/bin/traceroute6

/usr/bin/chfn

/usr/bin/chsh

/usr/bin/gpasswd

/usr/bin/passwd

The "file" associated with a query would contain the results of that query. In the example above, we searched the database for all setuid files, and the results came back as the contents of a file.

With humfs, only the file metadata would be indexed in the data-base. It is possible to do the same thing with the contents of files. This would take a different framework than that which enables humfs but is still not difficult. It would be possible to load a UML filesystem into a database, be it SQL, Glimpse, or Google, and have that database imported into UML as a bootable filesystem. Queries to the database would be provided by a separate filesystem, as described earlier. In this way, UML users would have access to their files through any database the host administrator is willing to provide.

An alternate use of this is to load some portion of your data, such as your mail, into such a database-backed filesystem. These directories and files will remain accessible in the normal way, but the database interface to them will allow you to search the file contents more quickly than is possible with utilities such as find and grep. For example, loading your mail directory into a filesystem indexed by something like Glimpse would give you a very fast way to search your mail. It would still be a normal Linux filesystem, so mail clients and the like would still work on it, and the index would be kept up to date constantly since the filesystem sees all changes and feeds them into the index. This means that you could search for something soon after it is created (and find it) rather than waiting for the next indexing run, which would probably be in the wee hours, making the change visible in the index the following day.

Host Access to UML Filesystems

To round out this discussion of UML filesystem options, we need to take another look at the standard ubd block device. Both humfs and hostfs allow easy access on the host to the UML's file since both mount host directory hierarchies into UML. With hostfs, these files can be manipulated directly.

With humfs, some knowledge of the directory layout is necessary. Changing the contents of a file is done in the expected way, while changing metadatapermissions, ownerships, and file type in the case of devices, sockets, and named pipesrequires that the contents of the metadata file be changed, rather than simply using the usual tools such as chmod and chown. In the case of a database representation of the metadata, this would require a database update.

A ubd device allows even less convenient access to the UML's files, as a filesystem image is a rather opaque storage medium. However, loop-mounting the image on the host provides hostfs-like access to the files. This works as follows:

host# mount uml-root-fs host-mount-point -o loop

After this, the UML filesystem is available as a normal directory hierarchy under host-mount-point. However, the UML should not be running at this point, since there is no guarantee that the filesystem is consistent. There may be data cached inside the UML that hasn't been flushed out to the filesystem image and that is needed in order for the filesystem to be consistent. Second, any sort of mount requires root privileges. So, while a loopback-mount makes a ubd device look like a hostfs directory, it is necessary to be root on the host and, normally, for the UML to not be running. In the next section, we'll look at a way around this last restriction and describe a method for getting a consistent backup from a running UML instance.

This consistency problem is also present with hostfs and humfs. By default, they cache changes to their files inside the UML page cache, writing them out later. If you change a hostfs or humfs file, you probably won't see the change on the host immediately. When hostfs is used as a file transfer mechanism between the UML instance and the host, this can be a problem. It can be solved by mounting the filesystem synchronously, so that all changes are written immediately to the host. This is most easily done by adding sync to the options field in the UML /etc/fstab file:

none /host hostfs sync 0 0

If the filesystem is already mounted, it can be remounted to be synchronous without disturbing anything that might already be using it:

mount -o remount,sync /host

Doing this will decrease the performance of the filesystem, as the amount of I/O that it does will be greatly increased.

hostfs is more likely to be used as a file transfer mechanism between the UML instance and the host since the humfs directory structure doesn't lend itself as well to being used in this way. A host directory can also be shared with hostfs between multiple UML instances without problems because the filesystem consistency is maintained by the host. Delays in seeing file updates will happen with a hostfs mount shared by multiple UML instances just as they happen when the mount is shared by the host and UML instance. To avoid this, the hostfs directories have to be mounted synchronously by all of the UML instances.

The hostfs directory does not have to be mounted synchronously on the hostchanges made by the host are immediately visible.

Making Backups

The final point of comparison between ubd devices, hostfs, and humfs is how to back them up on the host. hostfs should normally be used only for access to host files that don't form a UML filesystem, so the question of specifically backing them up shouldn't arise. However, if a directory on the host is expected to be mounted as a hostfs mount, backing it up on the host can be done normally, using any backup utility desired. The consistency of the hierarchy is guaranteed by the host since it's a normal host filesystem. Any changes that are still cached inside the UML will obviously not be captured by a backup, but this won't affect the consistency of a backup.

humfs is a bit more difficult. Since file metadata is stored separately from the file, a straightforward backup on the host could possibly be inconsistent if the filesystem is active within the UML. For example, when a humfs file is deleted, both the data file and the meta-data file (in the case of the shadow_fs metadata format) must be deleted. If the backup is taken between these two deletions, it will be inconsistent, as it will show a partially deleted file. The obvious way around this problem is to ensure that the humfs filesystem isn't mounted at the time of the backup, either by shutting down the UML or by having it unmount the filesystem. This last option might be difficult if the humfs filesystem is the UML's root.

However, there is a neat trick to get around this problem: a facility within Linux called Magic SysRq. On a physical system, this involves using the SysRq key in combination with some other key in order to get the kernel to do one of a set of operations. This is normally used in emergencies, to exercise some degree of control over the machine when nothing else works. One of the functions provided by the facility is to flush all filesystem changes to stable storage. On a physical machine, this would normally be done prior to crashing it by turning off the power or hitting the reset button. Flushing out dirty data ensures that the filesystems will be in good shape when the system is rebooted.

In addition to this, UML's mconsole facility provides the ability to stop the virtual machine, so that it only listens to mconsole requests, and later continue it.

The trick involves these three operations:

host% uml_mconsole umid stop

OK

host% uml_mconsole umid sysrq s

OK

host% uml_mconsole umid go

OK

Here, we stop the UML, force it to sync all data to disk (sysrqs), and restart it.

When this is being done as part of a backup procedure, the actual backup would take place between the sysrq s command and continuing the UML.

Finally, backing up ubd filesystem images involves the same considerations as humfs filesystems. Without taking care, you may back up an inconsistent image, and booting a UML on it may not work. However, in lieu of shutting down the UML, the mconsole trick I just described for a humfs filesystem will work just as well for a ubd image. If the ubd filesystem uses a COW layer, this can be extremely fast. In this case, only the COW file needs to be copied, and if it is largely empty, and the backup tool is aware of sparse files, a multigigabyte COW file can be copied to a safe place in a few seconds.

Extending Filesystems

Sometimes you might set up a filesystem for a UML instance that sub-sequently turns out to be too small. For the different types of file-systems we have covered in this chapter, there are different options.

By default, the space available in a hostfs mount is the same as in the host filesystem in which the data resides. Increasing this requires either deleting files to increase the amount of free space or increasing the size of the filesystem somehow. If the filesystem resides on a logical volume, a free disk partition can be added to the corresponding volume group. Otherwise, you will need to move the hostfs data to a different partition or repartition the disk to increase the size of the existing partition.

Another option is to control the space consumption on hostfs mounts by using quotas on the host. By running different UML instances as different UIDs and assigning disk quotas to those UIDs, you can control the disk consumption independently of the space that's actually available on the host filesystem. In this case, increasing the space available to a UML instance on a hostfs mount is a matter of adjusting its disk quota on the host.

As we saw earlier, you can change the size of a humfs mount by changing the value on the total line in the superblock file.

The situation with a ubd block device is more complicated. Increasing the size of the host file is simple:

host% dd if=/dev/zero of=root_fs bs=1024 \

seek=$[ 2 * 1024 * 1024 ] count=1

This increases the size of the root_fs file to 2GB. A more complicated problem is making that extra space available within the UML filesystem. Some but not all filesystems support being resized without making a backup and recreating the filesystem from scratch. Fewer support being resized without unmounting the filesystem. One that does is ext2 (and ext3 since it has a nearly identical on-disk format). By default, ext2online resizes the filesystem to fill the disk that it resides on, which is what you almost always want:

UML# ext2online /dev/ubda

You can also specify the mount point rather than the block device, which may be more intuitive and less error prone:

With other filesystems, you may have to unmount the filesystem before resizing it to fill the device. If the filesystem in question is the UML instance's root filesystem, you will likely need to halt the instance and resize the filesystem on the host.

For filesystems that don't support resizing at all, you have to copy the data to someplace else and recreate the filesystem from scratch using mkfs. Then you can copy your data back into it. Again, if this is the root filesystem of the UML instance, you will need to shut it down and then recreate the filesystem on the host.

When to Use What

Now that you have learned about these three mechanisms for providing filesystem data to a UML, the question remains: Under what circumstances should you use each of them? The answer is fairly easy for hostfsnormally, it should be used only for access to host files that belong to the user owning the UML or to files that are available readonly. In the first case, the user should be logged in to the UML as root, and there should be no other UML users accessing the hostfs mount. In the second, the read-only restriction avoids all of the permission and ownership issues with hostfs.

humfs hierarchies and ubd images can be used to provide general-purpose filesystems, including root filesystems. humfs provides easier access to the UML files, although some care is needed when changing those files in order to ensure that the file metadata is updated properly.

There are also some potential efficiency advantages with both humfs and ubd devices. An issue with host memory consumption is that both the host and UML will generally cache file data separately. As a result, the host's memory will contain multiple copies of UML file data, one in the host's page cache and one for each UML that has read the data.

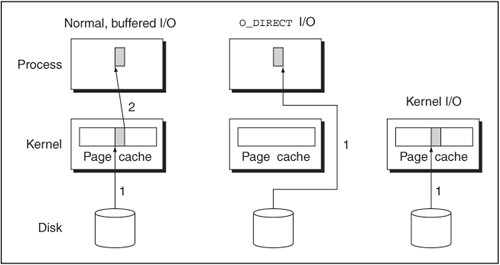

ubd devices can avoid this double caching by using O_DIRECT I/O on 2.6 hosts. O_DIRECT avoids the use of the host page cache, so the only copies of the data will be in the UMLs that have read it. In order to truly minimize host memory consumption, this should be used only for data that's private to the UML, such as a private filesystem image or a COW file. For a COW file, the memory savings obtained by avoiding the double caching are probably outweighed by the duplicate caching of the backing file data in the UMLs that are sharing it.

For shared data, humfs avoids the double caching by mapping the data from the host. The data is cached on the host, but mapping it provides the UML with the same page of memory that's in the host page cache. Taking advantage of this would require a form of COW for humfs, which currently doesn't exist. A file-level form of COW is possible and may exist by the time you read this. With this, a humfs equivalent of a backing file, in the form of a read-only host directory hierarchy, would be mapped into the UMLs that share it. They would all share the same memory, so there would be only one copy of it in the host's memory.

In short, both ubd devices and humfs directories have a place in a well-run UML installation. The use of one or the other should be driven by the importance of convenient host access to the UML filesystem, the ease and speed of making backups of the data, and avoidance of excessive host memory consumption.

Chapter 7. UML Networking in Depth

Manually Setting Up Networking

TUN/TAP with Routing

In earlier chapters we briefly looked at how to put a UML on the network. Now we will go into this area in some depth. The most involved part is setting up the host when the UML will be given access to the physical network. The host is responsible for transmitting packets between the network and the UML, so correct setup is essential for a working UML network.

There are two different methods for configuring the host to allow a UML access to the outside world: routing packets to and from the UML and bridging the host side of the virtual interface to the physical Ethernet device. First we will use the former method, which is more complicated. We will start with a completely unconfigured host, on which a UML will fail to get access to the network, and, step by step, we'll debug it until the UML is a fully functional network node. This will provide a good understanding of exactly what needs to be done to the host and will allow you to adapt it to your own needs. The step-by-step debugging will also show you how to debug connectivity problems that you encounter on your own.

Later in this chapter, we will cover the second method, bridging. It is simpler but has disadvantages and pitfalls of its own.

Configuring a TUN/TAP Device

We are going to use the same host mechanism, TUN/TAP, as before. Since we are doing this entirely by hand, we need to provide a TUN/TAP device for the UML to attach to. This is done with the tunctl utility:

host% tunctl

Failed to open '/dev/net/tun' : Permission denied

This is the first of many roadblocks we will encounter and overcome. In this case, we can't manipulate TUN/TAP devices as a normal user because the permissions on the control device are too restrictive:

host% ls -l /dev/net/tun

crw------- 1 root root 10, 200 Jul 30 07:36 /dev/net/tun

I am going to do something that's a bit risky from a security standpointchange the permissions to allow any user to create TUN/TAP devices:

host# chmod 666 /dev/net/tun

When the TUN/TAP control device is open like this, any user can create an interface and use it to inject arbitrary packets into the host networking system. This sounds nasty, but the actual practicality of an attack is doubtful. When you can construct packets and get the host to route them, you can do things like fake name server, DHCP, or Web responses to client requests. You could also take over an existing connection by faking packets from one of the parties. However, faking a server response requires knowing there was a request from a client and what its contents were. This is difficult because you have set yourself up to create packets, not receive them. Receiving packets still requires help from root.

Faking a server response without knowing whether there was an appropriate request requires guessing and spraying responses out to the network, hoping that some host has just sent a matching request and will be faked out by the response. If successful, such an attack could persuade a DHCP client to use a name server of your choice. With a maliciously configured name server, this would allow the attacker to see essentially all of the client's subsequent network traffic since nearly all transactions start with a name lookup.

Another possibility is to fake a name server response. If successful, this would allow the attacker to intercept the resulting connection, with the possibility of seeing sensitive data if the intercepted connection is to a bank Web site or something similar.

However, opening up /dev/net/tun as I have just done would require that such an attack be done blind, without being able to see any incoming packets. So, attacks on clients must be done randomly, which would require very high amounts of traffic for even a remote chance of success. Attacks on existing connections must similarly be done blind, with the added complication that the attack must correctly guess a random TCP sequence number.

So, normally, a successful attack would be remote. However, you should take this possibility seriously. The permissions on /dev/net/ tun are a layer of protection against this sort of attack, and removing it increases the possibility of being attacked using an unrelated vulnerability. For example, if there was an exploit that allowed an attacker to sniff the network, the arguments I just made about how unlikely a successful attack would be go right out the window. Attacks would no longer be blind, and the attacker could see DHCP and name requests and try to respond to them through a TUN/TAP device, with good chances of success. In this case, the /dev/net/tun permissions would have likely stopped the attacker.

So, before opening up /dev/net/tun, consider whether you have untrusted, and possibly malicious, users on the host and whether you think there is any possibility of holes that would allow outsiders to gain shell access to the host. If that is remotely possible, you may consider a better option, which is used by Debiancreate a uml-users group and make /dev/net/tun accessible only to members of that group. This reduces the number of accounts that could possibly be used to attack your network. It doesn't eliminate the risk, as one of those users could be malicious, or an outsider could gain access to one of those accounts.

However you have decided to set up /dev/net/tun, you should have read and write access to it, either as a normal user or as a member of a uml-users group. Once this is done, you can try the tunctl command again and it will succeed:

host% tunctl

Set 'tap0' persistent and owned by uid 500

This created a new TUN/TAP device and made it usable by the tunctl user.

For scripting purposes, a -b option makes tunctl output only the new device name:

This eliminates the need to parse the relatively verbose output from the first form of the command.

There are also -u and -t options, which allow you to specify, respectively, which user the new TUN/TAP device will belong to and which TUN/TAP device that will be:

host# tunctl -u jdike -t jeffs-uml

Set 'jeffs-uml' persistent and owned by uid 500

This demonstrates a highly useful feature: the ability to give arbitrary names to the devices. Suitably chosen, these can serve as partial documentation of your UML network setup. We will use this jeffs-uml device from now on.

For cleanliness, we should shut down all of the TUN/TAP devices created by our playing with tunctl with commands such as the following:

host% tunctl -d tap0

Set 'tap0' nonpersistent

ifconfig -a will show you all the network interfaces on the system, so you should probably shut down all of the TUN/TAP devices except for the last one you made and any others created for some other specific reason.

The first thing to do is to enable the device:

host# ifconfig jeffs-uml 192.168.0.254 up

host# ifconfig jeffs-uml

jeffs-uml Link encap:Ethernet HWaddr 2A:B1:37:41:72:D5

inet addr:192.168.0.254 Bcast:192.168.0.255 \

Mask:255.255.255.0

inet6 addr: fe80::28b1:37ff:fe41:72d5/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:5 overruns:0 carrier:0

collisions:0 txqueuelen:500

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

As usual, choose an IP address that's suitable for your network. If IP addresses are scarce, you can reuse one that's already in use by the host, such as the one assigned to its eth0.

Basic Connectivity

Let's add a new interface to a UML instance. If you have an instance already running, you can plug a new network interface into it by using uml_mconsole:

uml_mconsole debian config eth0=tuntap,jeffs-uml

OK

If you are booting a new UML instance, you can do the same thing on the command line by adding eth0=tuntap,jeffs-uml.

This differs from the syntax we saw earlier. Before, we specified an IP address and no device name. Here, we specify the device name but not an IP address. When no device name is given, that signals the driver to invoke the uml_net helper to configure the host. When a name is given, the driver uses it and assumes that it has already been configured appropriately.

Now that the instance has an Ethernet device, we can configure it and bring it up:

UML# ifconfig eth0 192.168.0.253 up

Let's try pinging the host:

UML# ping 192.168.0.254

PING 192.168.0.254 (192.168.0.254): 56 data bytes

--- 192.168.0.254 ping statistics ---

28 packets transmitted, 0 packets received, 100% packet loss

Nothing but silence. The usual way to start debugging problems like this is to sniff the interface using tcpdump or a similar tool. With the ping running again, we see this:

host# tcpdump -i jeffs-uml -l -n

tcpdump: verbose output suppressed, use -v or -vv for full \

protocol decode

listening on jeffs-uml, link-type EN10MB (Ethernet), capture \

size 96 bytes

18:12:34.115634 IP 192.168.0.253 > 192.168.0.254: icmp 64: echo \

request seq 0

18:12:35.132054 IP 192.168.0.253 > 192.168.0.254: icmp 64: echo \

request seq 256

Ping requests are coming out, but no replies are getting back to it. This is a routing problemwe have not yet set any routes to the TUN/TAP device, so the host doesn't know where to send the ping replies. This is easily fixed:

host# route add -host 192.168.0.253 dev jeffs-uml

Now, pinging from the UML instance works:

UML# ping 192.168.0.254

PING 192.168.0.254 (192.168.0.254): 56 data bytes

64 bytes from 192.168.0.254: icmp_seq=0 ttl=64 time=0.7 ms

64 bytes from 192.168.0.254: icmp_seq=1 ttl=64 time=0.1 ms

64 bytes from 192.168.0.254: icmp_seq=2 ttl=64 time=0.1 ms

--- 192.168.0.254 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.1/0.3/0.7 ms

It's always a good idea to check connectivity in both directions, in case there is a problem in one direction but not the other. So, check whether the host can ping the instance:

host% ping 192.168.0.253

PING 192.168.0.253 (192.168.0.253) 56(84) bytes of data.

64 bytes from 192.168.0.253: icmp_seq=0 ttl=64 time=0.169 ms

64 bytes from 192.168.0.253: icmp_seq=1 ttl=64 time=0.077 ms

--- 192.168.0.253 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.077/0.123/0.169/0.046 ms, pipe 2

So far, so good. The next step is to ping a host on the local network by its IP address:

UML# ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3): 56 data bytes

--- 192.168.0.3 ping statistics ---

7 packets transmitted, 0 packets received, 100% packet loss

No joy. Using tcpdump to check what's happening shows this:

host# tcpdump -i jeffs-uml -l -n

tcpdump: verbose output suppressed, use -v or -vv for full \

protocol decode

listening on jeffs-uml, link-type EN10MB (Ethernet), capture \

size 96 bytes

18:20:29.522769 arp who-has 192.168.0.3 tell 192.168.0.253

18:20:30.524576 arp who-has 192.168.0.3 tell 192.168.0.253

18:20:31.522430 arp who-has 192.168.0.3 tell 192.168.0.253

The UML instance is trying to figure out the Ethernet MAC address of the target. To this end, it's broadcasting an arp request on its eth0 interface and hoping for a response. It's not getting one because the target machine can't hear the request. arp requests, like other Ethernet broadcast protocols, are limited to the Ethernet segment on which they originate, and the UML eth0 to host TUN/TAP connection is effectively an isolated Ethernet strand with only two hosts on it. So, the arp requests never reach the physical Ethernet where the other machine could hear it and respond.

This can be fixed by using a mechanism known as proxy arp and enabling packet forwarding. First, turn on forwarding:

host# echo 1 > /proc/sys/net/ipv4/ip_forward

Then enable proxy arp on the host for the TUN/TAP device:

host# echo 1 > /proc/sys/net/ipv4/conf/jeffs-uml/proxy_arp

This will cause the host to arp to the UML instance on behalf of the rest of the network, making the host's arp database available to the instance. Retrying the ping and watching tcpdump shows this:

host# tcpdump -i jeffs-uml -l -n tcpdump: verbose output \

suppressed, use -v or -vv for full protocol decode

listening on jeffs-uml, link-type EN10MB (Ethernet), capture \

size 96 bytes

19:25:16.465574 arp who-has 192.168.0.3 tell 192.168.0.253

19:25:16.510440 arp reply 192.168.0.3 is-at ae:42:d1:20:37:e5

19:25:16.510648 IP 192.168.0.253 > 192.168.0.3: icmp 64: echo \

request seq 0

19:25:17.448664 IP 192.168.0.253 > 192.168.0.3: icmp 64: echo \

request seq 256

There is still no pinging, but the arp request did get a response. We can verify this by seeing what's in the UML arp cache.

UML# arp

Address HWtype HWaddress Flags Mask \

Iface

192.168.0.3 ether AE:42:D1:20:37:E5 C \

eth0

If you see nothing here, it's likely because too much time elapsed between running the ping and the arp, and the arp entry got flushed from the cache. In this case, rerun the ping, and run arp immediately afterward.

Since the instance is now getting arp service for the rest of the network, and ping requests are making it out through the TUN/TAP device, we need to follow those packets to see what's going wrong. On my host, the outside network device is etH1, so I'll watch that. On other machines, the outside network will likely be eth0. It's also a good idea to select only packets involving the UML, to eliminate the noise from other network activity:

host# tcpdump -i eth1 -l -n host 192.168.0.253

tcpdump: verbose output suppressed, use -v or -vv for full \

protocol decode

listening on eth1, link-type EN10MB (Ethernet), capture size \

96 bytes

19:36:14.459076 IP 192.168.0.253 > 192.168.0.3: icmp 64: echo \

request seq 0

19:36:14.461960 arp who-has 192.168.0.253 tell 192.168.0.3

19:36:15.460608 arp who-has 192.168.0.253 tell 192.168.0.3

Here we see a ping request going out, which is fine. We also see an arp request from the other host for the MAC address of the UML instance. This is going unanswered, so this is the next problem.

We set up proxy arp in one direction, for the UML instance on behalf of the rest of the network. Now we need to set it up in the other direction, for the rest of the network on behalf of the instance, so that the host will respond to arp requests for the instance:

host# arp -Ds 192.168.0.253 eth1 pub

Retrying the ping gets some good results:

UML# ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3): 56 data bytes

64 bytes from 192.168.0.3: icmp_seq=0 ttl=63 time=133.1 ms

64 bytes from 192.168.0.3: icmp_seq=1 ttl=63 time=4.0 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=63 time=4.9 ms

--- 192.168.0.3 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 4.0/47.3/133.1 ms

To be thorough, let's make sure we have connectivity in the other direction and ping the UML instance from the other host:

192.168.0.3% ping 192.168.0.254

PING 192.168.0.254 (192.168.0.254) from 192.168.0.3 : 56(84) \

bytes of data.

64 bytes from 192.168.0.254: icmp_seq=1 ttl=64 time=6.48 ms

64 bytes from 192.168.0.254: icmp_seq=2 ttl=64 time=2.76 ms

64 bytes from 192.168.0.254: icmp_seq=3 ttl=64 time=2.75 ms

--- 192.168.0.254 ping statistics ---

3 packets transmitted, 3 received, 0% loss, time 2003ms

rtt min/avg/max/mdev = 2.758/4.000/6.483/1.756 ms

We now have basic network connectivity between the UML instance and the rest of the local network. Here's a summary of the steps we took.

Create the TUN/TAP device for the UML instance to use to communicate with the host. Enable packet forwarding on the host. Enable proxy arp in both directions between the UML instance and the rest of the network.

Thoughts on Security

At this point, the machinations of the uml_net helper should make sense. To recap, let's add another interface to the instance and let uml_net set it up for us:

host% uml_mconsole debian config eth1=tuntap,,,192.168.0.252

OK

Configuring the new device in the instance shows us this:

UML# ifconfig eth1 192.168.0.251 up

* modprobe tun

* ifconfig tap0 192.168.0.252 netmask 255.255.255.255 up

* bash -c echo 1 > /proc/sys/net/ipv4/ip_forward

* route add -host 192.168.0.251 dev tap0

* bash -c echo 1 > /proc/sys/net/ipv4/conf/tap0/proxy_arp

* arp -Ds 192.168.0.251 jeffs-uml pub

* arp -Ds 192.168.0.251 eth1 pub

Here we can see the helper doing just about everything we just finished doing by hand. The one thing that's missing is actually creating the TUN/TAP device. uml_net does that itself, without invoking an outside utility, so that doesn't show up in the list of commands it runs on our behalf.

Aside from knowing how to configure the host in order to support a networked UML instance, this is also important for understanding the security implications of what we have done and for customizing this setup for a particular environment.

What uml_net does is not secure against a nasty root user inside the instance. Consider what would happen if the UML user decided to configure the UML eth0 with the same IP address as your local name server. uml_net would set up proxy arp to direct name requests to the UML instance. The real name server would still be there getting requests, but some requests would be redirected to the UML instance. With a name server in the UML instance providing bogus responses, this could easily be a real security problem. For this reason, uml_net should not be used in a serious UML establishment. Its purpose is to make UML networking easy to set up for the casual UML user. For any more serious uses of UML, the host should be configured according to the local needs, security and otherwise.

What we just did by hand isn't that bad because we set the route to the instance and proxy arp according to the IP address we expected it to use. If root inside our UML instance decides to use a different IP address, such as that of our local name server, it will see no traffic. The host will only arp on behalf of the IP we expect it to use, and the route is only good for that IP. All other traffic will go elsewhere.

A nasty root user can still send out packets purporting to be from other hosts, but since it can't receive any responses to them, it would have to make blind attacks. As I discussed earlier, this is unlikely to enable any successful attacks on its own, but it does remove a layer of protection that might prove useful if another exploit on the host allows the attacker to see the local network traffic.

So, it is probably advisable to filter out any unexpected network traffic at the iptables level. First, let's see that the UML instance can send out packets that pretend to be from some other host. As usual for this discussion, these will be pings, but they could just as easily be any other protocol.

UML# ifconfig eth0 192.168.0.100 up

UML# ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3): 56 data bytes

--- 192.168.0.3 ping statistics ---

4 packets transmitted, 0 packets received, 100% packet loss

Here I am pretending to be 192.168.0.100, which we will consider to be an important host on the local network. Watching the jeffs-uml device on the host shows this:

host# tcpdump -i jeffs-uml -l -n

tcpdump: verbose output suppressed, use -v or -vv for full \

protocol decode

listening on jeffs-uml, link-type EN10MB (Ethernet), capture \

size 96 bytes

20:20:34.978090 arp who-has 192.168.0.3 tell 192.168.0.100

20:20:35.506878 arp reply 192.168.0.3 is-at ae:42:d1:20:37:e5

20:20:35.508062 IP 192.168.0.100 > 192.168.0.3: icmp 64: echo \

request seq 0

We can see those faked packets reaching the host. Looking at the host's interface to the rest of the network, we can see they are reaching the local network:

tcpdump -i eth1 -l -n

tcpdump: verbose output suppressed, use -v or -vv for full \

protocol decode

listening on eth1, link-type EN10MB (Ethernet), capture size \

96 bytes

20:23:30.741482 IP 192.168.0.100 > 192.168.0.3: icmp 64: echo \

request seq 0

20:23:30.744305 arp who-has 192.168.0.100 tell 192.168.0.3

Notice that arp request. It will be answered correctly, so the ping responses will go to the actual host that legitimately owns 192.168.0.100, which is not expecting them. That host will discard them, so they will cause no harm except for some wasted network bandwidth and CPU cycles. However, it would be preferable for those packets not to reach the network or the host in the first place. This can be done as follows:

host# iptables -A FORWARD -i jeffs-uml -s \! 192.168.0.253 -j \

DROP

Warning: wierd character in interface `jeffs-uml' (No aliases, \

:, ! or *).

iptables is apparently complaining about the dash in the interface name, but it does create the rule, as we can see here:

host# iptables -L

Chain FORWARD (policy ACCEPT)

target prot opt source destination

DROP all -- !192.168.0.253 anywhere

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

So, we have just told iptables to discard any packet it sees that:

Is supposed to be forwarded Enters the host through the jeffs-uml interface Has a source address other than 192.168.0.253

After creating this firewall rule, you should be able to rerun the previous ping and tcpdump will show that those packets are not reaching the outside network.

At this point, we have a reasonably secure setup. As originally configured, the UML instance couldn't see any traffic not intended for it. With the new firewall rule, the rest of the network will see only traffic from the instance that originates from the IP address assigned to it. A possible enhancement to this is to log any attempts to use an unauthorized IP address so that the host administrator is aware of any such attempts and can take any necessary action.

You could also block any packets from coming in to the UML instance with an incorrect IP address. This shouldn't happen because the proxy arp we have set up shouldn't attract any packets for IP addresses that don't belong somehow to the host, and any such packets that do reach the host won't be routed to the UML instance. However, an explicit rule to prevent this might be a good addition to a layered security model. In the event of a malfunction or compromise of this configuration, such a rule could end up being the one thing standing in the way of a UML instance seeing traffic that it shouldn't. This rule would look like this:

host# iptables -A FORWARD -o jeffs-uml -d \! 192.168.0.253 -j \

DROP

Warning: wierd character in interface `jeffs-uml' (No aliases, \

:, ! or *).

Access to the Outside Network

We still have a bit of work to do, as we have demonstrated access only to the local network, using IP addresses rather than more convenient host names. So, we need to provide the UML instance with a name service. For a single instance, the easiest thing to do is copy it from the host:

host# cat > /etc/resolv.conf

; generated by /sbin/dhclient-script

search user-mode-linux.org

nameserver 192.168.0.3

I cut the contents of the host's /etc/resolv.conf and pasted them into the UML. You should do the same on your own machine, as my resolv.conf will almost certainly not work for you.

We also need a default route, which hasn't been necessary for the limited testing we've done so far but is needed for almost anything else:

UML# route add default gw 192.168.0.254

I normally use the IP address of the host end of the TUN/TAP device as the default gateway.

If you still have the unauthorized IP address assigned to your instance's eth0, reassign the original address:

ifconfig eth0 192.168.0.253

Now we should have name service:

UML# host 192.168.0.3

Name: laptop.user-mode-linux.org

Address: 192.168.0.3

That's a local namelet's check for a remote one:

UML# host www.user-mode-linux.org

www.user-mode-linux.org A 66.59.111.166

Now let's try pinging it, to see if we have network access to the outside world:

UML# ping www.user-mode-linux.org

PING www.user-mode-linux.org (66.59.111.166): 56 data bytes

64 bytes from 66.59.111.166: icmp_seq=0 ttl=52 time=487.2 ms

64 bytes from 66.59.111.166: icmp_seq=1 ttl=52 time=37.8 ms

64 bytes from 66.59.111.166: icmp_seq=2 ttl=52 time=36.0 ms

64 bytes from 66.59.111.166: icmp_seq=3 ttl=52 time=73.0 ms

--- www.user-mode-linux.org ping statistics ---

4 packets transmitted, 4 packets received, 0% packet loss

round-trip min/avg/max = 36.0/158.5/487.2 ms

Copying /etc/resolv.conf from the host and setting the default route by hand works but is not the right thing to do. The real way to do these is with DHCP. The reason this won't work here is the same reason that ARP didn't workthe UML is on a different Ethernet strand than the rest of the network, and DHCP, being an Ethernet broadcast protocol, doesn't cross Ethernet broadcast domain boundaries.

DHCP through a TUN/TAP Device

Some tools work around the DHCP problem by forwarding DHCP requests from one Ethernet domain to another and relaying whatever replies come back. One such tool is dhcp-fwd. It needs to be installed on the host and configured. It has a fairly scary-looking default config file. You need to specify the interface from which client requests will come and the interface from which server responses will come.

In the default config file, these are etH2 and eth1, respectively.

On my machine, the client interface is jeffs-uml and the server interface is eth1. So, a global replace of eth2 with jeffs-uml, and leaving eth1 alone, is sufficient to get a working dhcp-fwd.

Let's get a clean start by unplugging the UML eth0 and plugging it back in. First we need to bring the interface down:

Then, on the host, remove the device:

host% uml_mconsole debian remove eth0

OK

Now, let's plug it back in:

host% uml_mconsole debian config eth0=tuntap,,fe:fd:c0:a8:00:fd,\

192.168.0.254

OK

Notice that we have a new parameter to this command. We are specifying a hardware MAC address for the interface. We never did this before because the UML network driver automatically generates one when it is assigned an IP address for the first time. It is important that these be unique. Physical Ethernet cards have a unique MAC burned into their hardware or firmware. It's tougher for a virtual interface to get a unique identity. It's also important for its IP address to be unique, and I have taken advantage of this in order to generate a unique MAC address for a UML's Ethernet device.

When the administrator provides an IP address, which is very likely to be unique on the local network, to a UML Ethernet device, the driver uses that as part of the MAC address it assigns to the device. The first two bytes of the MAC will be 0xFE and 0xFD, which is a private Ethernet range. The next four bytes are the IP address. If the IP address is unique on the network, the MAC will be, too.

When configuring the interface with DHCP, the MAC is needed before the DHCP server can assign the IP. Thus, we need to assign the MAC on the command line or when plugging the device into a running UML instance.

There is another case where you may need to supply a MAC on the UML command line, which I will discuss in greater detail later in this chapter. That is when the distribution you are using brings the interface up before giving it an IP address. In this case, the driver can't supply the MAC after the fact, when the interface is already up, so it must be provided ahead of time, on the command line.

Now, assuming the dhcp-fwd service has been started on the host, dhclient will work inside UML:

UML# dhclient eth0

Internet Software Consortium DHCP Client 2.0pl5

Copyright 1995, 1996, 1997, 1998, 1999 The Internet Software \

Consortium.

All rights reserved.

Please contribute if you find this software useful.

For info, please visit http://www.isc.org/dhcp-contrib.html

Listening on LPF/eth0/fe:fd:c0:a8:00:fd

Sending on LPF/eth0/fe:fd:c0:a8:00:fd

Sending on Socket/fallback/fallback-net

DHCPREQUEST on eth0 to 255.255.255.255 port 67

DHCPACK from 192.168.0.254

bound to 192.168.0.9 -- renewal in 21600 seconds.

Final Testing

At this point, we have full access to the outside network. There is still one thing that could go wrong. Ping packets are relatively small; in some situations small packets will be unmolested but large packets, contained in full-size Ethernet frames, will be lost. To check this, we can copy in a large file:

UML# wget http://www.kernel.org/pub/linux/kernel/v2.6/\

linux-2.6.12.3.tar.bz2

--01:35:56-- http://www.kernel.org/pub/linux/kernel/v2.6/\

linux-2.6.12.3.tar.bz2 => `linux-2.6.12.3.tar.bz2'

Resolving www.kernel.org... 204.152.191.37, 204.152.191.5

Connecting to www.kernel.org[204.152.191.37]:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 37,500,159 [application/x-bzip2]

100%[====================================>] 37,500,159 \

87.25K/s ETA 00:00

01:43:04 (85.92 KB/s) - `linux-2.6.12.3.tar.bz2' saved \

[37500159/37500159]

Copying in a full Linux kernel tarball is a pretty good test, and in this case, it's fine. If this does nothing for you, it's likely that there's a problem with large packets. If so, you need to lower the Maximal Transfer Unit (MTU) of the UML's eth0:

UML# ifconfig eth0 mtu 1400

You can determine the exact value by experiment. Lower it until large transfers start working.

The cases where I've seen this involved a PPPoE connection to the outside world. PPPoE usually means a DSL connection, and I've seen UML connectivity problems when the host was my DSL gateway. Lowering the MTU to 1400 made the network fully functional. In fact, the MTU for a PPPoE connection is 1492, so lowering it to 1400 was overkill.

Bridging

As mentioned at the start of this chapter, there are two ways to configure a host to give a UML access to the outside world. We just explored one of them. The alternative, bridging, doesn't require the host to route packets to and from the UML, and so doesn't require new routes to be created or proxy arp to be configured. With bridging, the TUN/TAP device used by the UML instance is combined with the host's physical Ethernet device into a sort of virtual switch. The bridge interface forwards Ethernet frames from one interface to another based on their destination MAC addresses. This effectively merges the broadcast domains associated with the bridged interfaces. Since this caused DHCP and arp to not work when we were doing IP forwarding, bridging provides a neat solution to these problems.

If you currently have an active UML network, you should shut it down before continuing:

Then, on the host, remove the device:

host% uml_mconsole debian remove eth0

OK

Bring down and remove the TUN/TAP interface, which will delete the route and one side of the proxy arp, and delete the other side of the proxy arp:

host# ifconfig jeffs-uml down

host% tunctl -d jeffs-uml

Set 'jeffs-uml' nonpersistent

host# arp -i jeffs-uml -d 192.168.0.253 pub

Now, with everything cleaned up, we can start from scratch:

host% tunctl -u jdike -t jeffs-uml

Let's start setting up bridging. The idea is that a new interface will provide the host with network access to the outside world. The two interfaces we are currently using, eth0 and jeffs-uml, will be added to this new interface. The bridge device will forward frames from one interface to the other as needed, so that both eth0 and jeffs-uml will see traffic that's intended for them (or that needs to be sent to the local network, in the case of eth0).

The first step is to create the device using the brctl utility, which is in the bridge-utilities package of your favorite distribution:

host# brctl addbr uml-bridge

In the spirit of giving interfaces meaningful names, I've called this one uml-bridge.

Now we want to add the two existing interfaces to it. For the physical interface, choose a wired Ethernetfor some reason, wireless interfaces don't seem to work in bridges. The virtual interface will be the jeffs-uml TUN/TAP interface.

We need to do some configuration to make it usable:

host# ifconfig jeffs-uml 0.0.0.0 up

These interfaces can't have their own IP addresses, so we have to clear the one on eth0. This is a step you want to think about carefully. If you are logged in to the host remotely, this will likely kill your session and any network access you have to it. If the host has two network interfaces, and you know that your session and all other network activity you care about is traveling over the other, then it should be safe to remove the IP address from this one:

host# ifconfig eth0 0.0.0.0

We can now add the two interfaces to the bridge:

host# brctl addif uml-bridge jeffs-uml

host# brctl addif uml-bridge eth0

And then we can look at our work:

host# brctl show

bridge name bridge id STP enabled \

interfaces

uml-bridge 8000.0012f04be1fa no \

eth0

\

jeffs-uml

At this point, the bridge configuration is done and we need to bring it up as a new network interface:

host# dhclient uml-bridge

Internet Systems Consortium DHCP Client V3.0.2

Copyright 2004 Internet Systems Consortium.

All rights reserved.

For info, please visit http://www.isc.org/products/DHCP

/sbin/dhclient-script: configuration for uml-bridge not found. \

Continuing with defaults.

Listening on LPF/uml-bridge/00:12:f0:4b:e1:fa

Sending on LPF/uml-bridge/00:12:f0:4b:e1:fa

Sending on Socket/fallback

DHCPDISCOVER on uml-bridge to 255.255.255.255 port 67 interval 4

DHCPOFFER from 192.168.0.10

DHCPREQUEST on uml-bridge to 255.255.255.255 port 67

DHCPACK from 192.168.0.10

/sbin/dhclient-script: configuration for uml-bridge not found. \

Continuing with defaults.

bound to 192.168.0.2 -- renewal in 20237 seconds.

The bridge is functioning, but for any local connectivity to the UML instance, we'll need to set a route to it:

host# route add -host 192.168.0.253 dev uml-bridge

Now we can plug the interface into the UML instance and configure it there:

host% uml_mconsole debian config eth0=tuntap,jeffs-uml,\

fe:fd:c0:a8:00:fd

OK

UML# ifconfig eth0 192.168.0.253 up

Note that we plugged the jeffs-uml TUN/TAP interface into the UML instance. The bridge is merely a container for the other two interfaces, which can actually send and receive frames.

Also note that we assigned the MAC address ourselves rather than letting the UML driver do it. A MAC is necessary in order to make a DHCP request for an IP address, while the driver requires the IP address before it can construct the MAC. In order to break this circular requirement, we need to assign the MAC that the interface will get.

Now we can see some benefit from the extra setup that the bridge requires. DHCP within the UML instance now works:

UML# dhclient eth0

Internet Systems Consortium DHCP Client V3.0.2-RedHat

Copyright 2004 Internet Systems Consortium.

All rights reserved.

For info, please visit http://www.isc.org/products/DHCP

Listening on LPF/eth0/fe:fd:c0:a8:00:fd

Sending on LPF/eth0/fe:fd:c0:a8:00:fd

Sending on Socket/fallback

DHCPDISCOVER on eth0 to 255.255.255.255 port 67 interval 5

DHCPOFFER from 192.168.0.10

DHCPREQUEST on eth0 to 255.255.255.255 port 67

DHCPACK from 192.168.0.10

bound to 192.168.0.253 -- renewal in 16392 seconds.

This requires no messing around with arp or dhcp-fwd. Binding the TUN/TAP interface and the host's Ethernet interface makes each see broadcast frames from the other. So, DHCP and arp requests sent from the TUN/TAP device are also sent through the eth0 device. Similarly, arp requests from the local network are forwarded to the TUN/ TAP interface (and thus the UML instance's eth0 interface), which can respond on behalf of the UML instance.

The bridge also forwards nonbroadcast frames, based on their MAC addresses. So, DHCP and arp replies will be forwarded as necessary between the two interfaces and thus between the UML instance and the local network. This makes the DHCP forwarding and the proxy arp that we did earlier completely unnecessary.

The main downside to bridging is the need to remove the IP address from the physical Ethernet interface before adding it to the bridge. This is a rather pucker-inducing step when the host is accessible only remotely over that one interface. Many people will use IP forwarding and proxy arp instead of bridging rather than risk taking their remote server off the net. Others have written scripts that set up the bridge, taking the server's Ethernet interface offline and bringing the bridge interface online.

Bridging and Security

Bridging provides access to the outside network in a different way than we got with routing and proxy arp. However, the security concerns are the samewe need to prevent a malicious root user from making the UML instance pretend to be an important server. Before, we filtered traffic going through the TUN/TAP device with iptables. This was appropriate for a situation that involved IP-level routing and forwarding, but it won't work here because the forwarding is done at the Ethernet level.

There is an analogous framework for doing Ethernet filtering and an analogous tool for configuring it: ebtables, with the "eb" standing for "Ethernet Bridging."

First, in order to demonstrate that we can do nasty things to our network, let's change our Ethernet MAC to one we will presume belongs to our name server or DHCP server. Then let's verify that we still have network access:

UML# ifconfig eth0 hw ether fe:fd:ba:ad:ba:ad

# ping -c 2 192.168.0.10

PING 192.168.0.10 (192.168.0.10) 56(84) bytes of data.

64 bytes from 192.168.0.10: icmp_seq=0 ttl=64 time=3.75 ms

64 bytes from 192.168.0.10: icmp_seq=1 ttl=64 time=1.85 ms

--- 192.168.0.10 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1018ms

rtt min/avg/max/mdev = 1.850/2.803/3.756/0.953 ms, pipe 2

We do, so we need to fix things so that the UML instance has network access only with the MAC we assigned to it.

Precisely, we want any Ethernet frame leaving the jeffs-uml interface on its way to the bridge that doesn't have a source MAC of fe:fd:c0:a8:00:fd to be dropped. Similarly, we want any frame being forwarded from the bridge to the jeffs-uml interface without that destination MAC to be dropped.

The ebtables syntax is very similar to iptables, and the following commands do what we want:

host# ebtables -A INPUT --in-interface jeffs-uml \

--source \! FE:FD:C0:A8:00:FD -j DROP

host# ebtables -A OUTPUT --out-interface jeffs-uml \

--destination \! FE:FD:C0:A8:00:FD -j DROP

host# ebtables -A FORWARD --out-interface jeffs-uml \

--destination \! FE:FD:C0:A8:00:FD -j DROP

host# ebtables -A FORWARD --in-interface jeffs-uml \

--source \! FE:FD:C0:A8:00:FD -j DROP

There is a slight subtlety heremy first reading of the ebtables man page suggested that using the FORWARD chain would be sufficient since that covers frames being forwarded by the bridge from one interface to another. This works for external traffic but not for traffic to the host itself. These frames aren't forwarded, so we could spoof our identity to the host if the ebtables configuration used only the FORWARD chain. To close this hole, I also use the INPUT and OUTPUT chains to drop packets intended for the host as well as those that are forwarded.

At this point the ebtables configuration should look like this:

ebtables -L

Bridge table: filter

Bridge chain: INPUT, entries: 1, policy: ACCEPT

-s ! fe:fd:c0:a8:0:fd -i jeffs-uml -j DROP

Bridge chain: FORWARD, entries: 2, policy: ACCEPT

-d ! fe:fd:c0:a8:0:fd -o jeffs-uml -j DROP

-s ! fe:fd:c0:a8:0:fd -i jeffs-uml -j DROP

Bridge chain: OUTPUT, entries: 1, policy: ACCEPT

-d ! fe:fd:c0:a8:0:fd -o jeffs-uml -j DROP

We can check our work by trying to ping an outside host again:

host# ping -c 2 192.168.0.10

PING 192.168.0.10 (192.168.0.10) 56(84) bytes of data.

From 192.168.0.253 icmp_seq=0 Destination Host Unreachable

From 192.168.0.253 icmp_seq=1 Destination Host Unreachable

--- 192.168.0.10 ping statistics ---