A Bit of History

I started working on UML in earnest in February 1999 after having the idea that porting Linux to itself might be practical. I tossed the idea around in the back of my head for a few months in late 1998 and early 1999. I was thinking about what facilities it would need from the host and whether the system call interface provided by Linux was rich enough to provide those facilities. Ultimately, I decided it probably was, and in the cases where I wasn't sure, I could think of workarounds.

So, around February, I pulled a copy of the 2.0.32 kernel tree off of a Linux CD (probably a Red Hat source CD) because it was too painful to try to download it through my dialup. Within the resulting kernel tree, I created the directories my new port was going to need without putting any files in them. This is the absolute minimum amount of infrastructure you need for a new port. With the directories present, the kernel build process can descend into them and try to build what's there.

Needless to say, with nothing in those directories, the build didn't even start to work. I needed to add the necessary build infrastructure, such as Makefiles. So, I added the minimal set of things needed to get the kernel build to continue and looked at what failed next. Missing were a number of header files used by the generic (hardware-independent) portions of the kernel that the port needs to provide. I created them as empty files, so that the #include preprocessor directives would at least succeed, and proceeded onward.

At this point, the kernel build started complaining about missing macros, variables, and functionsthe things that should have been present in my empty header files and nonexistent C source files. This told me what I needed to think about implementing. I did so in the same way as before: For the most part, I implemented the functions as stubs that didn't do anything except print an error message. I also started adding real headers, mostly by copying the x86 headers into my include directory and removing the things that had no chance of compiling.

After defining many of these useless procedures, I got the UML build to "succeed." It succeeded in the sense that it produced a program I could run. However, running it caused immediate failures due to the large number of procedures I defined that didn't do what they were supposed tothey did nothing at all except print errors. The utility of these errors is that they told me in what order I had to implement these things for real.

So, for the most part, I plodded along, implementing whatever function printed its name first, making small increments of progress through the boot process with each addition. In some cases, I needed to implement a subsystem, resulting in a related set of functions.

Implementation continued in this vein for a few months, interrupted by about a month of real, paying work. In early June, I got UML to boot a small filesystem up to a login prompt, at which point I could log in and run commands. This may sound impressive, but UML was still bug-ridden and full of design mistakes. These would be rooted out later, but at the time, UML was not much more than a proof of concept.

Because of design decisions made earlier, such fundamental things as shared libraries and the ability to log in on the main console didn't work. I worked around the first problem by compiling a minimal set of tools statically, so they didn't need shared libraries. This minimal set of tools was what I populated my first UML filesystem with. At the time of my announcement, I made this filesystem available for download since it was the only way anyone else was going to get UML to boot.

Because of another design decision, UML, in effect, put itself in the background, making it impossible for it to accept input from the terminal. This became a problem when you tried to log in. I worked around this by writing what amounted to a serial line driver, allowing me to attach to a virtual serial line on which I could log in.

These are two of the most glaring examples of what didn't work at that point. The full list was much longer and included other things such as signal delivery and process preemption. They didn't prevent UML from working convincingly, even though they were fairly fundamental problems, and they would get fixed later.

At the time, Linus was just starting the 2.3 development kernel series. My first "UML-ized" kernel was 2.0.32, which, even at the time, was fairly old. So, I bit the bullet and downloaded a "modern" kernel, which was 2.3.5 or so. This started the process, which continues to this day, of keeping in close touch with the current development kernels (and as of 2.4.0, the stable ones as well).

Development continued, with bugs being fixed, design mistakes rectified (and large pieces of code rewritten from scratch), and drivers and filesystems added. UML spent a longer than usual amount of time being developed out of pool, that is, not integrated into the mainline Linus' kernel tree. In part, this was due to laziness. I was comfortable with the development methodology I had fallen into and didn't see much point in changing it.

However, pressure mounted from various sources to get UML into the main kernel tree. Many people wanted to be able to build UML from the kernel tree they downloaded from http://www.kernel.org. or got with their distribution. Others, wanting the best for the UML project, saw inclusion in Linus' kernel as being a way of getting some public recognition or as a stamp of approval from Linus, thus attracting more users to UML. More pragmatically, some people, who were largely developers, noted that inclusion in the official kernel would cause updates and bug fixes to happen in UML "automatically." This would happen as someone made a pass over the kernel sources, for example, to change an interface or fix a family of bugs, and would cover UML as part of that pass. This would save me the effort of looking through the patch representing a new kernel release, finding those changes, figuring out the equivalent changes needed in UML, and making them. This had become my habit over the roughly four years of UML development before it was merged by Linus. It had become a routine part of UML development, so I didn't begrudge the time it took, but there is no denying that it did take time that would have been better spent on other things.

So, roughly in the spring of 2002, I started sending updated UML patches to Linus, requesting that they be merged. These were ignored for some months, and I was starting to feel a bit discouraged, when out of the blue, he merged my 2.5.34 patch on September 12, 2002. I had sent the patch earlier to Linus as well as the kernel mailing list and one of my own UML lists, as usual, and had not thought about it further. That day, I was idling on an Internet Relay Chat (IRC) channel where a good number of the kernel developers hang around and talk. Suddenly, Arnaldo Carvalho de Melo (a kernel contributor from Brazil and the CTO of Conectiva, the largest Linux distribution in South America) noticed that Linus had merged my patch into his tree.

The response to this from the other kernel hackers, and a little later, from the UML community and wider Linux community, was gratifying positive. A surprisingly (to me) large number of people were genuinely happy that UML had been merged, and, in doing so, got the recognition they thought it deserved.

At this writing, it is three years later, and UML is still under very active development. There have been ups and downs. Some months after UML was merged, I started finding it hard to get Linus to accept updated patches. After a number of ignored patches, I started maintaining UML out of tree again, with the effect that the in-tree version of UML started to bit-rot. It stopped compiling because no one was keeping it up to date with changes to internal kernel interfaces, and of course bugs stopped being fixed because my fixes weren't being merged by Linus.

Late in 2004, an energetic young Italian hacker named Paolo Giarrusso got Andrew Morton, Linus' second-in-command, to include UML in his tree. The so-called "-mm" tree is a sort of purgatory for kernel patches. Andrew merges patches that may or may not be suitable for Linus' kernel in order to give them some wider exposure and see if they are suitable. Andrew took patches representing the current UML at the time from Paolo, and I followed that up with some more patches. Presently, Andrew forwarded those patches, along with many others, to Linus, who included them in his tree. All of a sudden, UML was up to date in the official kernel tree, and I had a reliable conduit for UML updates.

I fed a steady stream of patches through this conduit, and by the time of the 2.6.9 release, you could build a working UML from the official tree, and it was reasonably up to date.

Throughout this period, I had been working on UML on a volunteer basis. I took enough contracting work to keep the bills paid and the cats fed. Primarily, this was spending a day a week at the Institute for Security Technology Studies at Dartmouth College, in northern New Hampshire, about an hour from my house. This changed around May and June of 2004, when, nearly simultaneously, I got job offers from Red Hat and Intel. Both were very generous, offering to have me spend my time on UML, with no requirements to move. I ultimately accepted Intel's offer and have been an Intel employee in the Linux OS group since.

Coincidentally, the job offers came on the fifth anniversary of UML's first public announcement. So, in five years, UML went from nothing to a fully supported part of the official Linux kernel.

What Is UML Used For?

During the five years since UML began, I have seen steady growth in the UML user base and in the number and variety of applications and uses for UML. My users have been nothing if not inventive, and I have seen uses for UML that I would never have thought of.

Server Consolidation

Naturally, the most common applications of UML are the obvious ones. Virtualization has become a hot area of the computer industry, and UML is being used for the same things as other virtualization technologies. Server consolidation is a major one, both internally within organizations and externally between them. Internal consolidation usually takes the form of moving several physical servers into the same number of virtual machines running on a single physical host. External consolidation is usually an ISP or hosting company offering to rent UML instances to the public just as they rent physical servers. Here, multiple organizations end up sharing physical hardware with each other.

The main attraction is cost savings. Computer hardware has become so powerful and so cheap that the old model of one service, or maybe two, per machine now results in hardware that is almost totally idle. There is no technical reason that many services, and their data and configurations, couldn't be copied onto a single server. However, it is easier in many cases to copy each entire server into a virtual machine and run them all unchanged on a single host. It is less risky since the configuration of each is the same as on the physical server, so moving it poses no chance of upsetting an already-debugged environment.

In other cases, virtual servers may offer organizational or political benefits. Different services may be run by different organizations, and putting them on a single physical server would require giving the root password to each organization. The owner of the hardware would naturally tend to feel queasy about this, as would any given organization with respect to the others. A virtual server neatly solves this by giving each service its own virtual machine with its own root password. Having root privileges in a virtual machine in no way requires root privileges on the host. Thus, the services are isolated from the physical host, as well as from each other. If one of them gets messed up, it won't affect the host or the other services.

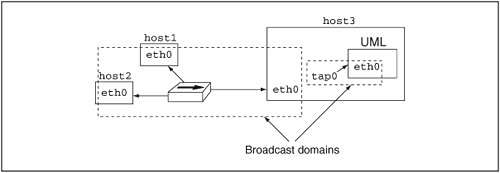

Moving from production to development, UML virtual machines are commonly used to set up and test environments before they go live in production. Any type of environment from a single service running on a single machine to a network running many services can be tested on a single physical host. In the latter case, you would set up a virtual network of UMLs on the host, run the appropriate services on the virtual hosts, and test the network to see that it behaves properly.

In a complex situation like this, UML shines because of the ease of setting up and shutting down a virtual network. This is simply a matter of running a set of commands, which can be scripted. Doing this without using virtual machines would require setting up a network of physical machines, which is vastly more expensive in terms of time, effort, space, and hardware. You would have to find the hardware, from systems to network cables, find some space to put it in, hook it all together, install and configure software, and test it all. In addition to the extra time and other resources this takes, compared to a virtual test environment, none of this can be automated.

In contrast, with a UML testbed, this can be completely automated. It is possible, and fairly easy, to full automate the configuration and booting of a virtual network and the testing of services running on that network. With some work, this can be reduced to a single script that can be run with one command. In addition, you can make changes to the network configuration by changing the scripts that set it up, rather than rewiring and rearranging hardware. Different people can also work independently on different areas of the environment by booting virtual networks on their own workstations. Doing this in a physical environment would require separate physical testbeds for each person working on the project.

Implementing this sort of testbed using UML systems instead of physical ones results in the near-elimination of hardware requirements, much greater parallelism of development and testing, and greatly reduced turnaround time on configuration changes. This can reduce the time needed for testing and improve the quality of the subsequent deployment by increasing the amount and variety of testing that's possible in a virtual environment.

A number of open source projects, and certainly a much larger number of private projects, use UML in this way. Here are a couple that I am aware of.

Openswan (http://www.openswan.org), the open source IPSec project, uses a UML network for nightly regression testing and its kernel development. BusyBox (http://www.busybox.net), a small-footprint set of Linux utilities, uses UML for its testing.

Education

Consider moving the sort of UML setup I just described from a corporate environment to an educational one. Instead of having a temporary virtual staging environment, you would have a permanent virtual environment in which students will wreak havoc and, in doing so, hopefully learn something.

Now, the point of setting up a complicated network with interrelated services running on it is simply to get it working in the virtual environment, rather than to replicate it onto a physical network once it's debugged. Students will be assigned to make things work, and once they do (or don't), the whole thing will be torn down and replaced with the next assignment.

The educational uses of UML are legion, including courses that involve any sort of system administration and many that involve programming. System administration requires the students to have root privileges on the machines they are learning on. Doing this with physical machines on a physical network is problematic, to say the least.

As root, a student can completely destroy the system software (and possibly damage the hardware). With the system on a physical network, a student with privileges can make the network unusable by, wittingly or unwittingly, spoofing IP addresses, setting up rogue DNS or DHCP servers, or poisoning ARP (Address Resolution Protocol) caches on other machines on the network.

These problems all have solutions in a physical environment. Machines can be completely reimaged between boots to undo whatever damage was done to the system software. The physical network can be isolated from any other networks on which people are trying to do real work. However, all this takes planning, setup, time, and resources that just aren't needed when using a UML environment.

The boot disk of a UML instance is simply a file in the host's filesystem. Instead of reimaging the disk of a physical machine between boots, the old UML root filesystem file can be deleted and replaced with a copy of the original. As will be described in later chapters, UML has a technology called COW (Copy-On-Write) files, which allow changes to a filesystem to be stored in a host file separate from the filesystem itself. Using this, undoing changes to a filesystem is simply a matter of deleting the file that contains the changes. Thus, reimaging a UML system takes a fraction of a second, rather than the minutes that reimaging a disk can take.

Looking at the network, a virtual network of UMLs is by default isolated from everything else. It takes effort, and privileges on the host, to allow a virtual network to communicate with a physical one. In addition, an isolated physical network is likely to have a group of students on it, so that one sufficiently malign or incompetent student could prevent any of the others from getting anything done. With a UML instance, it is feasible (and the simplest option) to give each student a private network. Then, an incompetent student can't mess up anyone else's network.

UML is also commonly used for learning kernel-level programming. For novice to intermediate kernel programming students, UML is a perfect environment in which to learn. It provides an authentic kernel to modify, with the development and debugging tools that should already be familiar. In addition, the hardware underneath this kernel is virtualized and thus better behaved than physical hardware. Failures will be caused by buggy software, not by misbehaving devices. So, students can concentrate on debugging the code rather than diagnosing broken or flaky hardware.

Obviously, dealing with broken, flaky, slightly out-of-spec, not-quite-standards-compliant devices are an essential part of an expert kernel developer's repertoire. To reach that exalted status, it is necessary to do development on physical machines. But learning within a UML environment can take you most of the way there.

Over the years, I have heard of education institutions teaching many sort of Linux administration courses using UML. Some commercial companies even offer system administration courses over the Internet using UML. Each student is assigned a personal UML, which is accessible over the Internet, and uses it to complete the coursework.

Development

Moving from system administration to development, I've seen a number of programming courses that use UML instances. Kernel-level programming is the most obvious place for UMLs. A system-level programming course is similar to a system administration course in that each student should have a dedicated machine. Anyone learning kernel programming is probably going to crash the machine, so you can't really teach such a course on a shared machine.

UML instances have all the advantages already described, plus a couple of bonuses. The biggest extra is that, as a normal process running on the host, a UML instance can be debugged with all the tools that someone learning system development is presumably already familiar with. It can be run under the control of gdb, where the student can set breakpoints, step through code, examine data, and do everything else you can do with gdb. The rest of the Linux development environment works as well with UML as with anything else. This includes gprof and gcov for profiling and test coverage and strace and ltrace for system call and library tracing.

Another bonus is that, for tracking down tricky timing bugs, the debugging tool of last resort, the print statement, can be used to dump data out to the host without affecting the timing of events within the UML kernel. With a physical machine, this ranges from extremely hard to impossible. Anything you do to store information for later retrieval can, and probably will, change the timing enough to obscure the bug you are chasing. With a UML instance, time is virtual, and it stops whenever the virtual machine isn't in the host's userspace, as it is when it enters the host kernel to log data to a file.

A popular use for UML is development for hardware that does not yet exist. Usually, this is for a piece of embedded hardwarean appliance of some sort that runs Linux but doesn't expose it. Developing the software inside UML allows the software and hardware development to run in parallel. Until the actual devices are available, the software can be developed in a UML instance that is emulating the hardware.

Examples of this are hard to come by because embedded developers are notoriously close-lipped, but I know of a major networking equipment manufacturer that is doing development with UML. The device will consist of several systems hooked together with an internal network. This is being simulated by a script that runs a set of UML instances (one per system in the device) with a virtual network running between them and a virtual network to the outside. The software is controlling the instances in exactly the same that it will control the systems within the final device.

Going outside the embedded device market, UML is used to simulate large systems. A UML instance can have a very large amount of memory, lots of processors, and lots of devices. It can have more of all these things than the host can, making it an ideal way to simulate a larger system than you can buy. In addition to simulating large systems, UML can also simulate clusters. A couple of open source clustering systems and a larger number of cluster components, such as filesystems and heartbeats, have been developed using UML and are distributed in a form that will run within a set of UMLs.

Disaster Recovery Practice

A fourth area of UML use, which is sort of a combination of the previous two, is disaster recovery practice. It's a combination in the sense that this would normally be done in a corporate environment, but the UML virtual machines are used for training.

The idea is that you make a virtual copy of a service or set of services, mess it up somehow, and figure out how to fix it. There will likely be requirements beyond simply fixing what is broken. You may require that the still-working parts of the service not be shut down or that the recovery be done in the least amount of time or with the smallest number of operations.

The benefits of this are similar to those mentioned earlier. Virtual environments are far more convenient to set up, so these sorts of exercises become far easier when virtual machines are available. In many cases, they simply become possible since hardware can't be dedicated to disaster recovery practice. The system administration staff can practice separately at their desks, and, given a well-chosen set of exercises, they can be well prepared when disaster strikes.

The Future

This chapter provided a summary of the present state of UML and its user community. This book will also describe what I have planned for the future of UML and what those plans mean for its users.

Among the plans is a project to port UML into the host kernel so that it runs inside the kernel rather than in a process. With some restructuring of UML, breaking it up into independent subsystems that directly use the resources provided by the host kernel, this in-kernel UML can be used for a variety of resource limitation applications such as resource control and jailing.

This will provide highly customizable jailing, where a jail is constructed by combining the appropriate subsystems into a single package. Processes in such a jail will be confined with respect to the resources controlled by the jail, and otherwise unconfined. This structure of layering subsystems on top of each other has some other advantages as well. It allows them to be nested, so that a user confined within a jail could construct a subjail and put processes inside it. It also allows the nested subsystems to use different algorithms than the host subsystems. So, a workload with unusual scheduling or memory needs could be run inside a jail with algorithms suitable for it.

However, the project I'm most excited about is using UML as a library, allowing other applications to link against it and thereby gain a captive virtual machine. This would have a great number of uses:

Managing an application or service from the inside, by logging in to the embedded UML Running scripts inside the embedded UML to control, monitor, and extend the application Using clustering technology to link multiple embedded UMLs into a cluster and use scripts running on this cluster to integrate the applications in ways that are currently not possible

Chapter 2. Краткий обзор UML

Основное внимание будет уделено взаимодействию UML и хоста.

Для начинающих всегда загадка , где кончается хост и начинается виртуальная машина.

Обычно виртуальная машина - это часть хоста , поскольку она не может существовать без него.

Вы можете быть рутом в UML и не иметь привилегий на хосте.

Когда UML запущена, она использует хостовые ресурсы как свои собственные.

Рутовый пользователь внутри UML имеет абсолютные права, но только внутри.

UML - это одновременно и ядро , и процесс.

Ядро и процесс - это 2 разныз вещи. Мы будем рассматривать UML как снаружи , так и изнутри.

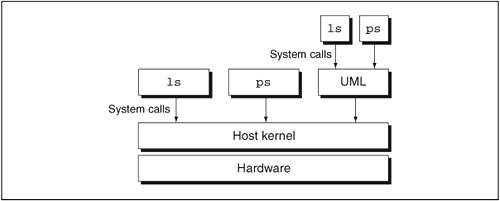

Figure 2.1

показывает связь между UML и хостом.

Для хоста UML - это обычный процесс.

Для UML-процессов, сам UML - это ядро.

Процессы взаимодействуют с ядром через системные вызовы.

Как любой другой процесс на хосте, UML делает системные вызовы .

В отличие от других процессов, UML имеет свой собственный system call interface .

Давайте посмотрим на бинарник UML , который обычно называется linux:

host% ls -l linux

-rwxrw-rw- 2 jdike jdike 23346513 Jan 27 12:16 linux

Он имеет стандарный формат Linux ELF :

host% file linux

linux: ELF 32-bit LSB executable, Intel 80386, version 1 (SYSV), \

for GNU/Linux 2.2.5, statically linked, not stripped

Текущая версия ядра :

host% ls -l /boot/vmlinuz-2.4.26

-rw-r--r-- 1 root root 945800 Sep 18 17:12 /boot/vmlinuz-2.4.26

UML binary - это не совсем хостовое ядро, у него стандартная внутрення символьная таблица.

А теперь давайте посмотрим на него :

host% ls -l linux

-rwxrw-rw- 2 jdike jdike 2236936 Jan 27 15:01 linux

Оно больше по размеру , чем хостовое ядро.

Настроечные опции встроены внутри UML.

Проверим модули загруженные на хосте :

host% lsmod

Module Size Used by

usblp 17473 0

parport_pc 31749 1

lp 16713 0

parport 39561 2 parport_pc,lp

autofs4 23493 2

sunrpc 145541 1

...

host% lsmod | awk '{n += $2} END {print n}'

1147092

Увеличенный вес UML обьясняется тем , что модули встроены в него.

Т.о. UML может быть запущен как обычный процесс , такой как bash или ls.

Первая загрузка UML

Запустим UML :

Результат показан ниже Figure 2.2.

Figure 2.2. Output from the first boot of UML

Checking for /proc/mm...not found

Checking for the skas3 patch in the host...not found

Checking PROT_EXEC mmap in /tmp...OK

Linux version 2.6.11-rc1-mm1 (jdike@tp.user-mode-linux.org) (gcc version 3.3.2

20031022 (Red Hat Linux 3.3.2-1)) #83 Thu Jan 27 12:16:00 EST 2005

Built 1 zonelists

Kernel command line: root=98:0

PID hash table entries: 256 (order: 8, 4096 bytes)

Dentry cache hash table entries: 8192 (order: 3, 32768 bytes)

Inode-cache hash table entries: 4096 (order: 2, 16384 bytes)

Memory: 29368k available

Mount-cache hash table entries: 512 (order: 0, 4096 bytes)

Checking for host processor cmov support...Yes

Checking for host processor xmm support...No

Checking that ptrace can change system call numbers...OK

Checking syscall emulation patch for ptrace...missing

Checking that host ptys support output SIGIO...Yes

Checking that host ptys support SIGIO on close...No, enabling workaround

Checking for /dev/anon on the host...Not available (open failed with errno 2)

NET: Registered protocol family 16

mconsole (version 2) initialized on /home/jdike/.uml/3m3vDd/mconsole

VFS: Disk quotas dquot_6.5.1

Dquot-cache hash table entries: 1024 (order 0, 4096 bytes)

io scheduler noop registered

io scheduler anticipatory registered

io scheduler deadline registered

io scheduler cfq registered

NET: Registered protocol family 2

IP: routing cache hash table of 512 buckets, 4Kbytes

TCP established hash table entries: 2048 (order: 2, 16384 bytes)

TCP bind hash table entries: 2048 (order: 1, 8192 bytes)

TCP: Hash tables configured (established 2048 bind 2048)

NET: Registered protocol family 1

NET: Registered protocol family 17

Initialized stdio console driver

Console initialized on /dev/tty0

Initializing software serial port version 1

VFS: Waiting 19sec for root device...

VFS: Waiting 18sec for root device...

VFS: Waiting 17sec for root device...

VFS: Waiting 16sec for root device...

VFS: Waiting 15sec for root device...

VFS: Waiting 14sec for root device...

VFS: Waiting 13sec for root device...

VFS: Waiting 12sec for root device...

VFS: Waiting 11sec for root device...

VFS: Waiting 10sec for root device...

VFS: Waiting 9sec for root device...

VFS: Waiting 8sec for root device...

VFS: Waiting 7sec for root device...

VFS: Waiting 6sec for root device...

VFS: Waiting 5sec for root device...

VFS: Waiting 4sec for root device...

VFS: Waiting 3sec for root device...

VFS: Waiting 2sec for root device...

VFS: Waiting 1sec for root device...

VFS: Cannot open root device "98:0" or unknown-block(98,0)

Please append a correct "root=" boot option

Kernel panic - not syncing: VFS: Unable to mount root fs on unknown-block(98,0)

EIP: 0023:[<a015a751>] CPU: 0 Not tainted ESP: 002b:40001fa0 EFLAGS: 00000206

Not tainted

EAX: 00000000 EBX: 00002146 ECX: 00000013 EDX: 00002146

ESI: 00002145 EDI: 00000000 EBP: 40001fbc DS: 002b ES: 002b

Call Trace:

a0863af0: [<a0030446>] printk+0x12/0x14

a0863b00: [<a003ff32>] notifier_call_chain+0x22/0x40

a0863b30: [<a002f9f2>] panic+0x56/0x108

a0863b40: [<a003c0f6>] msleep+0x42/0x4c

a0863b50: [<a0002d96>] mount_block_root+0xd6/0x188

a0863bb0: [<a0002e9c>] mount_root+0x54/0x5c

a0863bc0: [<a0002f07>] prepare_namespace+0x63/0xa8

a0863bd0: [<a0002ebb>] prepare_namespace+0x17/0xa8

a0863bd4: [<a000e190>] init+0x0/0x108

a0863be4: [<a000e190>] init+0x0/0x108

a0863bf0: [<a000e291>] init+0x101/0x108

a0863c00: [<a0027131>] run_kernel_thread+0x39/0x40

a0863c18: [<a000e190>] init+0x0/0x108

a0863c28: [<a0027117>] run_kernel_thread+0x1f/0x40

a0863c50: [<a0013211>] unblock_signals+0xd/0x10

a0863c70: [<a002c51c>] finish_task_switch+0x24/0xa4

a0863c84: [<a000e190>] init+0x0/0x108

a0863c90: [<a002c5ad>] schedule_tail+0x11/0x124

a0863cc4: [<a000e190>] init+0x0/0x108

a0863cd0: [<a001ad58>] new_thread_handler+0xb0/0x104

a0863cd4: [<a000e190>] init+0x0/0x108

a0863d20: [<a015a508>] __restore+0x0/0x8

a0863d60: [<a015a751>] kill+0x11/0x20

|

Вывод похож на вывод при загрузке линукса.

В данном случае загрузка не была завершена успешно.

Вывод похож на то , что обычно выдает dmesg.

UML не нуждается в полном наборе драйверов , что и хост,

поэтому вывод будет отличаться.

UML имеет архитектуру , отличную от архитектуры ядра , запускаемого на хосте.

Давайте посмотрим повнимательнее.

Checking for /proc/mm...not found

Checking for the skas3 patch in the host...not found

Checking PROT_EXEC mmap in /tmp...OK

Идет проверка оборудования хоста .

Checking for host processor cmov support...Yes

Checking for host processor xmm support...No

Checking that ptrace can change system call numbers...OK

Сначала проверяется процессор , затем проверяется , можно ли вообще запустить UML на хосте.

mconsole (version 2) initialized on /home/jdike/.uml/3m3vDd/mconsole

...

Initialized stdio console driver

...

Initializing software serial port version 1

UML инициализирует свои драйвера.

UML использует ресурсы хоста , и представлены несколько типов таких ресурсов.

Например block device внутри UML могут иметь доступ как host file,

поэтому для block devices требуется один UML driver.

Хотя на хосте имеется несколько block drivers, для IDE disks, SCSI disks, SATA disks, и т.д..

Драйвер под названием mconsole

позволяет осуществлять контроль над ним со стороны хоста.

Также имеются console и serial line драйверы, которые будут использовать т.н. pseudo-terminals.

VFS: Waiting 1sec for root device...

VFS: Cannot open root device "98:0" or unknown-block(98,0)

Please append a correct "root=" boot option

Kernel panic - not syncing: VFS: Unable to mount root fs on \

unknown-block(98,0)

Тут возникает паника , и UML убивается.

Проблема в том , что нужен т.н. рутовый девайс , поскольку UML не может быть замонтирован на рутовый каталог.

Для UML не требуется загрузчик-bootloader типа LILO или GRUB.

Он запускается из командной строки, и хост фактически является загрузчиком.

В результате мы вернемся в промпт , и ничего страшного не произойдет.

Повторная загрузка UML

Нужно указать UML на рутовый девайс.

В качестве примера файловой системы рассмотрим :

host% ls -l ~/roots/debian_22

-rw-rw-r-- 1 jdike jdike 1074790400 Jan 27 18:31 \

/home/jdike/roots/debian_22

Запустим команду file

host% file ~/roots/debian_22

/home/jdike/roots/debian_22: Linux rev 1.0 ext2 filesystem data

Теперь выполним loopback-mount для нее :

host# mount ~/roots/debian_22 ~/mnt -o loop

host% ls ~/mnt

bfs boot dev floppy initrd lib mnt root tmp var

bin cdrom etc home kernel lost+found proc sbin usr

При запуске UML теперь нужно будет указать опцию :

Тем самым мы говорим , что первым блочным устройством для UML будет ~/roots/debian_22.

Добавим еще один параметр командной строки :

Будет создан файл размером 128MB , который будет использоваться вместо виртуальной памяти

и записан в собственной файловой системе.

Реально память , выделяемая хостом для нужд UML , будет меньше 128 м.

Память , необходимая для нужд UML , будет свопиться хостом.

Кроме этого , у UML будет свой собственный своп.

Итак , запускаем повторно UML:

~/linux mem=128M ubda=/home/jdike/roots/debian_22

Figure 2.3 shows the results.

Figure 2.3. Output from the first successful boot of UML

~/linux/2.6/2.6.10 22849: ./linux mem=128M ubda=/home/jdike/roots/debian_22

Checking for /proc/mm...not found

Checking for the skas3 patch in the host...not found

Checking PROT_EXEC mmap in /tmp...OK

Linux version 2.6.11-rc1-mm1 (jdike@tp.user-mode-linux.org) (gcc version 3.3.2

20031022 (Red Hat Linux 3.3.2-1)) #83 Thu Jan 27 12:16:00 EST 2005

Built 1 zonelists

Kernel command line: mem=128M ubda=/home/jdike/roots/debian_22 root=98:0

PID hash table entries: 1024 (order: 10, 16384 bytes)

Dentry cache hash table entries: 32768 (order: 5, 131072 bytes)

Inode-cache hash table entries: 16384 (order: 4, 65536 bytes)

Memory: 126720k available

Mount-cache hash table entries: 512 (order: 0, 4096 bytes)

Checking for host processor cmov support...Yes

Checking for host processor xmm support...No

Checking that ptrace can change system call numbers...OK

Checking syscall emulation patch for ptrace...missing

Checking that host ptys support output SIGIO...Yes

Checking that host ptys support SIGIO on close...No, enabling workaround

Checking for /dev/anon on the host...Not available (open failed with errno 2)

NET: Registered protocol family 16

mconsole (version 2) initialized on /home/jdike/.uml/igpn9r/mconsole

VFS: Disk quotas dquot_6.5.1

Dquot-cache hash table entries: 1024 (order 0, 4096 bytes)

io scheduler noop registered

io scheduler anticipatory registered

io scheduler deadline registered

io scheduler cfq registered

NET: Registered protocol family 2

IP: routing cache hash table of 512 buckets, 4Kbytes

TCP established hash table entries: 8192 (order: 4, 65536 bytes)

TCP bind hash table entries: 8192 (order: 3, 32768 bytes)

TCP: Hash tables configured (established 8192 bind 8192)

NET: Registered protocol family 1

NET: Registered protocol family 17

Initialized stdio console driver

Console initialized on /dev/tty0

Initializing software serial port version 1

ubda: unknown partition table

VFS: Mounted root (ext2 filesystem) readonly.

line_ioctl: tty0: ioctl KDSIGACCEPT called

INIT: version 2.78 booting

Activating swap...

Checking root file system...

Parallelizing fsck version 1.18 (11-Nov-1999)

/dev/ubd0: clean, 9591/131328 files, 64611/262144 blocks

Calculating module dependencies... depmod: get_kernel_syms: Function not

implemented

done.

Loading modules: cat: /etc/modules: No such file or directory

modprobe: Can't open dependencies file /lib/modules/2.6.11-rc1-mm1/modules.dep

(No such file or directory)

Checking all file systems...

Parallelizing fsck version 1.18 (11-Nov-1999)

Setting kernel variables.

Mounting local filesystems...

mount: devpts already mounted on /dev/pts

none on /tmp type tmpfs (rw)

Setting up IP spoofing protection: rp_filter.

Configuring network interfaces: done.

Setting the System Clock using the Hardware Clock as reference...

line_ioctl: tty1: unknown ioctl: 0x4b50

hwclock is unable to get I/O port access: the iopl(3) call failed.

System Clock set. Local time: Thu Jan 27 18:51:28 EST 2005

Cleaning: /tmp /var/lock /var/run.

Initializing random number generator... done.

Recovering nvi editor sessions... done.

INIT: Entering runlevel: 2

Starting system log daemon: syslogd syslogd: /dev/xconsole: No such file or

directory

klogd.

Starting portmap daemon: portmap.

Starting NFS common utilities: statd lockdlockdsvc: Function not implemented

.

Starting internet superserver: inetd.

Starting MySQL database server: mysqld.

Not starting NFS kernel daemon: No exports.

Starting OpenBSD Secure Shell server: sshd.

Starting web server: apache.

/usr/sbin/apachectl start: httpd started

Debian GNU/Linux 2.2 usermode tty0

usermode login:

|

Тут мы уже наблюдаем загрузку файловой системы .

Синхронизация internal kernel clock с системным hardware clock:

Setting the System Clock using the Hardware Clock as reference...

line_ioctl: tty1: unknown ioctl: 0x4b50

hwclock is unable to get I/O port access: the iopl(3) call \

failed.

Для доступа к таймеру будет использован системный вызов gettimeofday.

Далее идет подключение таких сервисов как NFS, MySQL, Apache.

Каждый из них запускается так , как будто он находится на физической машине.

У автора все про все заняло 5 секунд, показывая способность UML к быстрой загрузке.

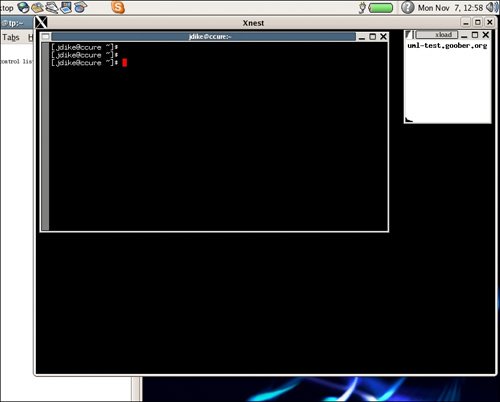

Looking at a UML from the Inside and Outside

Finally, we'll see a login prompt. Actually, I see three on my screen. One is in the xterm window in which I ran UML. The other two are in xterm windows run by UML in order to hold the second console and the first serial line, which are configured to have gettys running on them. We'll log in as root (using the highly secure default root password of root that most of my UML filesystems have) and get a shell:

usermode login: root

Password:

Last login: Thu Jan 27 18:51:35 2005 on tty0

Linux usermode 2.6.11-rc1-mm1 #83 Thu Jan 27 12:16:00 EST 2005 \

i686 unknown

usermode:~#

Again, this is identical to what you'd see if you logged in to a physical machine booted on this filesystem.

Now it's time to start poking around inside this UML and see what it looks like. First, we'll look at what processes are running, as shown in Figure 2.4.

Figure 2.4. Output from ps uax inside UML

usermode:~# ps uax

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.0 0.3 1100 464 ? S 19:17 0:00 init [2]

root 2 0.0 0.0 0 0 ? RWN 19:17 0:00 [ksoftirqd/0]

root 3 0.0 0.0 0 0 ? SW< 19:17 0:00 [events/0]

root 4 0.0 0.0 0 0 ? SW< 19:17 0:00 [khelper]

root 5 0.0 0.0 0 0 ? SW< 19:17 0:00 [kthread]

root 6 0.0 0.0 0 0 ? SW< 19:17 0:00 [kblockd/0]

root 7 0.0 0.0 0 0 ? SW 19:17 0:00 [pdflush]

root 8 0.0 0.0 0 0 ? SW 19:17 0:00 [pdflush]

root 10 0.0 0.0 0 0 ? SW< 19:17 0:00 [aio/0]

root 9 0.0 0.0 0 0 ? SW 19:17 0:00 [kswapd0]

root 96 0.0 0.4 1420 624 ? S 19:17 0:00 /sbin/syslogd

root 98 0.0 0.3 1084 408 ? S 19:17 0:00 /sbin/klogd

daemon 102 0.0 0.3 1200 420 ? S 19:17 0:00 /sbin/portmap

root 105 0.0 0.4 1128 548 ? S 19:17 0:00 /sbin/rpc.statd

root 111 0.0 0.4 1376 540 ? S 19:17 0:00 /usr/sbin/inetd

root 120 0.0 0.6 1820 828 ? S 19:17 0:00 /bin/sh /usr/bin/

mysql 133 0.1 1.2 19244 1540 ? S 19:17 0:00 /usr/sbin/mysqld

mysql 135 0.0 1.2 19244 1540 ? S 19:17 0:00 /usr/sbin/mysqld

mysql 136 0.0 1.2 19244 1540 ? S 19:17 0:00 /usr/sbin/mysqld

root 144 0.9 0.9 2616 1224 ? S 19:17 0:00 /usr/sbin/sshd

root 149 0.0 1.0 2588 1288 ? S 19:17 0:00 /usr/sbin/apache

root 152 0.0 0.9 2084 1220 tty0 S 19:17 0:00 -bash

root 153 0.0 0.3 1084 444 tty1 S 19:17 0:00 /sbin/getty 38400

root 154 0.0 0.3 1084 444 tty2 S 19:17 0:00 /sbin/getty 38400

root 155 0.0 0.3 1084 444 ttyS0 S 19:17 0:00 /sbin/getty 38400

www-data 156 0.0 1.0 2600 1284 ? S 19:17 0:00 /usr/sbin/apache

www-data 157 0.0 1.0 2600 1284 ? S 19:17 0:00 /usr/sbin/apache

www-data 158 0.0 1.0 2600 1284 ? S 19:17 0:00 /usr/sbin/apache

www-data 159 0.0 1.0 2600 1284 ? S 19:17 0:00 /usr/sbin/apache

www-data 160 0.0 1.0 2600 1284 ? S 19:17 0:00 /usr/sbin/apache

root 162 2.0 0.5 2384 736 tty0 R 19:17 0:00 ps uax

usermode:~#

|

There's not much to comment on except the total normality of this output. What's interesting here is to look at the host. Figure 2.5 shows the corresponding processes on the host.

Figure 2.5. Partial output from ps uax on the host

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

jdike 9938 0.1 3.1 131112 16264 pts/3 R 19:17 0:03 ./linux [ps]

jdike 9942 0.0 3.1 131112 16264 pts/3 S 19:17 0:00 ./linux [ps]

jdike 9943 0.0 3.1 131112 16264 pts/3 S 19:17 0:00 ./linux [ps]

jdike 9944 0.0 0.0 472 132 pts/3 T 19:17 0:00

jdike 10036 0.0 0.5 8640 2960 pts/3 S 19:17 0:00 xterm -T Virtual

jdike 10038 0.0 0.0 1368 232 ? S 19:17 0:00 /usr/lib/uml/port

jdike 10039 0.0 1.5 131092 8076 pts/6 S 19:17 0:00 ./linux [hwclock]

jdike 10095 0.0 0.1 632 604 pts/3 T 19:17 0:00

jdike 10099 0.0 0.0 416 352 pts/3 T 19:17 0:00

jdike 10107 0.0 0.0 428 332 pts/3 T 19:17 0:00

jdike 10113 0.0 0.1 556 516 pts/3 T 19:17 0:00

jdike 10126 0.0 0.0 548 508 pts/3 T 19:17 0:00

jdike 10143 0.0 0.0 840 160 pts/3 T 19:17 0:00

jdike 10173 0.0 0.2 1548 1140 pts/3 T 19:17 0:00

jdike 10188 0.0 0.1 1232 780 pts/3 T 19:17 0:00

jdike 10197 0.0 0.1 1296 712 pts/3 T 19:17 0:00

jdike 10205 0.0 0.0 452 452 pts/3 T 19:17 0:00

jdike 10207 0.0 0.0 452 452 pts/3 T 19:17 0:00

jdike 10209 0.0 0.0 452 452 pts/3 T 19:17 0:00

jdike 10210 0.0 0.5 8640 2960 pts/3 S 19:17 0:00 xterm -T Virtual

jdike 10212 0.0 0.0 1368 232 ? S 19:17 0:00 /usr/lib/uml/port

jdike 10213 0.0 2.9 131092 15092 pts/7 S 19:17 0:00 ./linux [/sbin/ge

jdike 10214 0.0 0.1 1292 688 pts/3 T 19:17 0:00

jdike 10215 0.0 0.1 1292 676 pts/3 T 19:17 0:00

jdike 10216 0.0 0.1 1292 676 pts/3 T 19:17 0:00

jdike 10217 0.0 0.1 1292 676 pts/3 T 19:17 0:00

jdike 10218 0.0 0.1 1292 676 pts/3 T 19:17 0:00

jdike 10220 0.0 0.1 1228 552 pts/3 T 19:17 0:00

|

Each of the nameless host processes corresponds to an address space inside this UML instance. Except for application and kernel threads, there's a one-to-one correspondence between UML processes and these host processes.

Notice that the properties of the UML processes and the corresponding host processes don't have much in common. All of the host processes are owned by me, whereas the UML processes have various owners, including root. The process IDs are totally different, as are the virtual and resident memory sizes.

This is because the host processes are simply containers for UML address spaces. All of the properties visible inside UML are maintained by UML totally separate from the host. For example, the owner of the host processes will be whoever ran UML. However, many UML processes will be owned by root. These processes have root privileges inside UML, but they have no special privileges on the host. This important fact means that root can do anything inside UML without being able to do anything on the host. A user logged in to a UML as root has no special abilities on the host and, in fact, may not have any abilities at all on the host.

Now, let's look at the memory usage information in /proc/meminfo, shown in Figure 2.6.

Figure 2.6. The UML /proc/meminfo

usermode:~# cat /proc/meminfo

MemTotal: 126796 kB

MemFree: 112952 kB

Buffers: 512 kB

Cached: 7388 kB

SwapCached: 0 kB

Active: 6596 kB

Inactive: 3844 kB

HighTotal: 0 kB

HighFree: 0 kB

LowTotal: 126796 kB

LowFree: 112952 kB

SwapTotal: 0 kB

SwapFree: 0 kB

Dirty: 0 kB

Writeback: 0 kB

Mapped: 5424 kB

Slab: 2660 kB

CommitLimit: 63396 kB

Committed_AS: 23100 kB

PageTables: 248 kB

VmallocTotal: 383984 kB

VmallocUsed: 24 kB

VmallocChunk: 383960 kB

|

The total amount of memory shown, 126796K, is close to the 128MB we specified on the command line. It's not exactly 128MB because some memory allocated during early boot isn't counted in the total. Going back to the host ps output in Figure 2.5, notice that the linux processes have a virtual size (the VSZ column) of almost exactly 128MB. The difference of 50K is due to a small amount of memory in the UML binary, which isn't counted as part of its physical memory.

Now, let's go back to the host ps output and pick one of the UML processes:

jdike 9938 0.1 3.1 131112 16264 pts/3 R 19:17 0:03 \

./linux [ps]

We can look at its open files by looking at the /proc/9938/fd directory, which shows an entry like this:

lrwx------ 1 jdike jdike 64 Jan 28 12:48 3 -> \

/tmp/vm_file-AwBs1z (deleted)

This is the host file that holds, and is the same size (128MB in our case) as, the UML "physical" memory. It is created in /tmp and then deleted. The deletion prevents something else on the host from opening it and corrupting it. However, this has the somewhat undesirable side effect that /tmp can become filled with invisible files, which can confuse people who don't know about this aspect of UML's behavior.

To make matters worse, it is recommended for performance reasons to use tmpfs on /tmp. UML performs noticeably better when its memory file is on tmpfs rather than on a disk-based filesystem such as ext3. However, a tmpfs mount is smaller than the disk-based filesystem /tmp would normally be on and thus more likely to run out of space when running multiple UML instances. This can be handled by making the tmpfs mount large enough to hold the maximum physical memories of all the UML instances on the host or by creating a tmpfs mount for each UML instance that is large enough to hold its physical memory.

Take a look at the root directory:

UML# ls /

bfs boot dev floppy initrd lib mnt root tmp var

bin cdrom etc home kernel lost+found proc sbin usr

This looks strikingly similar to the listing of the loopback mount earlier and somewhat different from the host. Here UML has done the equivalent of a loopback mount of the ~/roots/debian_22 file on the host.

Note that making the loopback mount on the host required root privileges, while I ran UML as my normal, nonroot self and accomplished the same thing. You might think this demonstrates that either the requirement of root privileges on the host is unnecessary or that UML is some sort of security hole for not requiring root privileges to do the same thing. Actually, neither is true because the two operations, the loopback mount on the host and UML mounting its root filesystem, aren't quite the same thing. The loopback mount added a mount point to the host's filesystem, while the mount of / within UML doesn't. The UML mount is completely separate from the host's filesystem, so the ability to do this has no security implications.

However, from a different point of view, some security implications do arise. There is no access from the UML filesystem to the host filesystem. The root user inside the UML can do anything on the UML filesystem, and thus, to the host file that contains it, but can't do anything outside it. So, inside UML, even root is jailed and can't break out.

This is a general property of UMLa UML is a full-blown Linux machine with its own resources. With respect to those resources, the root user within UML can do anything. But it can do nothing at all to anything on the host that's not explicitly provided to the UML. We've just seen this with disk space and files, and it's also true for networking, memory, and every other type of host resource that can be made accessible within UML.

Next, we can see some of UML's hardware support by looking at the mount table:

UML# mount

/dev/ubd0 on / type ext2 (rw)

proc on /proc type proc (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

none on /tmp type tmpfs (rw)

Here we see the ubd device we configured on the command line now mounted as the root filesystem. The other mounts are normal virtual filesystems, procfs and devpts, and a tmpfs mount on /tmp. df will show us how much space is available on the virtual disk:

UML# df

Filesystem 1k-blocks Used Available Use% Mounted on

/dev/ubd0 1032056 242108 737468 25% /

none 63396 0 63396 0% /tmp

Compare the total size of /dev/ubd0 (1032056K) to that of the host file:

-rw-rw-r-- 1 jdike jdike 1074790400 Jan 27 18:31 \

/home/jdike/roots/debian_22

They are nearly the same, with the difference probably being the ext2 filesystem overhead. The entire UML filesystem exists in and is confined to that host file. This is another way in which users inside the UML are confined or jailed. A UML user has no way to consume more disk space than is in that host file.

However, on the host, it is possible to extend the filesystem file, and the extra space becomes available to UML. In Chapter 6 we will see exactly how this is done, but for now, it's just important to note that this is a good example of how much more flexible virtual hardware is in comparison to physical hardware. Try adding extra space to a physical disk or a physical disk partition. You can repartition the disk in order to extend a partition, but that's a nontrivial, angst-ridden operation that potentially puts all of the data on the disk at risk if you make a mistake. You can also add a new volume to the volume group you wish to increase, but this requires that the volume group be set up beforehand and that you have a spare partition to add to it. In comparison, extending a file using dd is a trivial operation that can be done as a normal user, doesn't put any data at risk except that in the file, and doesn't require any prior setup.

We can poke around /proc some more to compare and contrast this virtual machine with the physical host it's running on. For some similarities, let's look at /proc/filesystems:

UML# more /proc/filesystems

nodev sysfs

nodev rootfs

nodev bdev

nodev proc

nodev sockfs

nodev pipefs

nodev futexfs

nodev tmpfs

nodev eventpollfs

nodev devpts

reiserfs

ext3

ext2

nodev ramfs

nodev mqueue

There's no sign of any UML oddities here at all. The reason is that the filesystems are not hardware dependent. Anything that doesn't depend on hardware will be exactly the same in UML as on the host. This includes things such as virtual devices (e.g., pseudo-terminals, loop devices, and TUN/TAP network interfaces) and network protocols, as well as the filesystems.

So, in order to see something different from the host, we have to look at hardware-specific stuff. For example, /proc/interrupts contains information about all interrupt sources on the system. On the host, it contains information about devices such as the timer, keyboard, and disks. In UML, it looks like this:

UML# more /proc/interrupts

CPU0

0: 211586 SIGVTALRM timer

2: 87 SIGIO console, console, console

3: 0 SIGIO console-write, console-write, \

console-write

4: 2061 SIGIO ubd

6: 0 SIGIO ssl

7: 0 SIGIO ssl-write

9: 0 SIGIO mconsole

10: 0 SIGIO winch, winch, winch

11: 56 SIGIO write sigio

The timer, keyboard, and disks are here (entries 0, 2 and 6, and 4, respectively), as are a bunch of mysterious-looking entries. The -write entries stem from a weakness in the host Linux SIGIO support. SIGIO is a signal generated when input is available, or output is possible, on a file descriptor. A process wishing to do interrupt-driven I/O would set up SIGIO support on the file descriptors it's using. An interrupt when input is available on a file descriptor is obviously useful. However, an interrupt when output is possible is also sometimes needed.

If a process is writing to a descriptor, such as one belonging to a pipe or a network socket, faster than the process on the other side is reading it, then the kernel will buffer the extra data. However, only a limited amount of buffering is available. When that limit is reached, further writes will fail, returning EAGAIN. It is necessary to know when some of the data has been read by the other side and writes may be attempted again. Here, a SIGIO signal would be very handy. The trouble is that support of SIGIO when output is possible is not universal. Some IPC mechanisms support SIGIO when input is available, but not when output is possible.

In these cases, UML emulates this support with a separate thread that calls poll to wait for output to become possible on these descriptors, interrupting the UML kernel when this happens. The interrupt this generates is represented by one of the -write interrupts.

The other mysterious entry is the winch interrupt. This appears because UML wants to detect when one of its consoles changes size, as when you resize the xterm in which you ran UML. Obviously this is not a concern for the host, but it is for a virtual machine. Because of the interface for registering for SIGWINCH on a host device, a separate thread is created to receive SIGWINCH, and it interrupts UML itself whenever one comes in. Thus, SIGWINCH looks like a separate device from the point of view of /proc/interrupts.

/proc/cpuinfo is interesting:

UML# more /proc/cpuinfo

processor : 0

vendor_id : User Mode Linux

model name : UML

mode : skas

host : Linux tp.user-mode-linux.org 2.4.27 #6 \

Thu Jan 13 17:06:15 EST 2005 i686

bogomips : 1592.52

Much of the information in the host's /proc/cpuinfo makes no sense in UML. It contains information about the physical CPU, which UML doesn't have. So, I just put in some information about the host, plus some about the UML itself.

Conclusion

At this point, we've seen a UML from both the inside and the outside. We've seen how a UML can use host resources for its hardware and how it's confined to whatever has been provided to it.

A UML is both very similar to and very different from a physical machine. It is similar as long as you don't look at its hardware. When you do, it becomes clear that you are looking at a virtual machine with virtual hardware. However, as long as you stay away from the hardware, it is very hard to tell that you are inside a virtual machine.

Both the similarities and the differences have advantages. Obviously, having a UML run applications in exactly the same way as on the host is critical for it to be useful. In this chapter we glimpsed some of the advantages of virtual hardware. Soon we will see that virtualized hardware can be plugged, unplugged, extended, and managed in ways that physical hardware can't. The next chapter begins to show you what this means.

Logging In as a Normal User

In this chapter we will explore a UML instance in more detail, looking at how it is similar to and how it differs from a physical Linux machine. While doing a set of fairly simple, standard system administration chores in the instance, we will see some UML twists to them. For example, we will add swap space and mount filesystems. The twist is that we will do these things by plugging the required devices into the UML at runtime, from the host, without rebooting the UML.

First, let's log in to the UML instance, as we did in the previous chapter. When the UML boots, we see a login prompt in the window in which we started it. Some xterm windows pop up on the screen, which we ignore. They also contain login prompts. We could log in as root, but let's log in as a normal user, username user, with the very secure password user:

Debian GNU/Linux 2.2 usermode tty1

usermode login: user

Password:

Last login: Sun Dec 22 21:50:44 2002 from uml on pts/0

Linux usermode 2.6.11-rc3-mm1 #2 Tue Feb 8 15:41:40 EST 2005 \

i686 unknown

UML% pwd

/home/user

This is basically the same as a physical system. In this window, we are a normal, unprivileged user, in a normal home directory. We can test our lack of privileges by trying to do something nasty:

UML% rm -f /bin/ls

rm: cannot unlink `/bin/ls': Permission denied

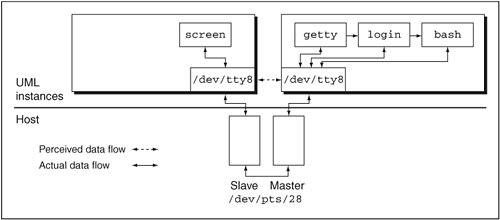

Consoles and Serial Lines

In addition to the xterm consoles that made themselves visible, some others have attached themselves less visibly to other host resources. You can attach UML consoles to almost any host device that can be used for that purpose. For example, they can be (and some, by default, are) attached to host pseudo-terminals. They announce themselves in the kernel log, which we can see by running dmesg:

UML% dmesg | grep "Serial line"

Serial line 0 assigned device '/dev/pts/13'

This tells us that one UML serial line has been configured in /etc/inittab to have a login prompt on it. The serial line has been configured at the "hardware" level to be attached to a host pseudo-terminal, and it has allocated the host's /dev/pts/13.

Now we can run a terminal program, such as screen or minicom, on the host, attach it to /dev/pts/13, and log in to UML on its one serial line. After running

we see a blank screen session. Hitting return gives us another UML login prompt, as advertised:

Debian GNU/Linux 2.2 usermode ttyS0

usermode login:

Notice the ttyS0 in the banner, in comparison to the tty0 we saw while logging in as root in the previous chapter and the tty1 we just saw while logging in as user. The tty0 and tty1 devices are UML consoles, while ttyS0 is the first serial line. On a physical machine, the consoles are devices that are displayed on the screen, and the serial lines are ports coming out of the back of the box. There's a clear difference between them.

In contrast, there is almost no difference between the consoles and serial lines in UML. They plug themselves into the console and serial line infrastructures, respectively, in the UML kernel. This is the cause of the different device names. However, in all other ways, they are identical in UML. They share essentially all their code, they can be configured to attach to exactly the same host devices, and they behave in the same ways.

In fact, the serial line driver in UML owes its existence to a historical quirk. Because of a limitation in the first implementation of UML, it was impossible to log in on a console in the window in which you ran it. To allow logging in to UML at all, I implemented the serial line driver to connect itself to a host device, and you would attach to this using something like screen.

As time went on and limitations disappeared, I implemented a real console driver. After a while, it dawned on me that there was no real difference between it and the serial line driver, so I started merging the two drivers, making them share more and more code. Now almost the only differences between them are that they plug themselves into different parts of the kernel.

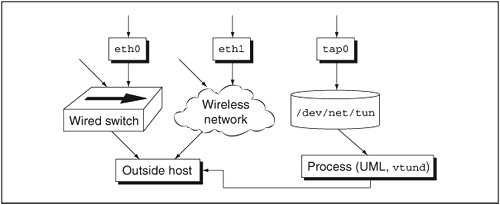

UML consoles and serial lines can be attached to the same devices on the host, and we've seen a console attached to stdin and stdout of the linux process, consoles appearing in xterms, and a serial line attached to a host pseudo-terminal. They can also be attached to host ports, allowing you to telnet to the specified port on the host and log in to the UML from there. This is a convenient way to make a UML accessible from the network without enabling the network within UML.

Finally, UML consoles and serial lines can be attached to host terminals, which can be host consoles, such as /dev/tty*, or the slave side of pseudo-terminals. Attaching a UML console to a host virtual console has the interesting effect of putting the UML login prompt on the host console, making it appear (to someone not paying sufficient attention) to be the host login.

Let's look at some examples. First, let's attach a console to a host port. We need to find an unused console to work with, so let's use the UML management console tool to query the UML configuration:

host% uml_mconsole debian config con0

OK fd:0,fd:1

host% uml_mconsole debian config con1

OK none

host% uml_mconsole debian config con2

OK pts:/dev/pts/10

host% uml_mconsole debian config con3

OK pts

We will cover the full capabilities of uml_mconsole in Chapter 8, but this gives us an initial look at it. The first argument, debian, specifies which UML we wish to talk to. A UML can be named and given a unique machine ID, or umid. When I ran this UML, I added umid=debian to the command line, giving this instance the name debian. uml_mconsole knows how to use this name to communicate with the debian UML.

If you didn't specify the umid on the command line, UML gives itself a random umid. There are a couple of ways to tell what it chose. First, look through the boot output or output from dmesg for a line that looks like this:

mconsole (version 2) initialized on /home/jdike/.uml/3m3vDd/mconsole

In this case, the umid is 3m3vDd. You can communicate with this instance by using that umid on the uml_mconsole command line.

Second, UML puts a directory with the same name as the umid in a special parent directory, by default, ~/.uml. So, you could also look at the subdirectory of your ~/.uml directory for the umid to use.

The rest of the uml_mconsole command line is the command to send to the specified UML. In this case, we are asking for the configurations of the first few consoles. Console names start with con; serial line names begin with ssl.

I will describe as much of the output format as needed here; Figure 3.1 contains a more complete and careful description.

Figure 3.1. Detailed description of UML console and serial line configurationA UML console or serial line configuration can consist of separate input and output configurations, or a single configuration for both. If both are present, they are separated by a colon. For example, fd:0,fd:1 specifies that console input comes from UML's file descriptor 0 and that output goes to file descriptor 1. In contrast, fd:3 specifies that both input and output are attached to file descriptor 3, which should have been set up on the UML command line with something like 3<>filename.

A single device configuration consists of a device type (fd in the examples above) and device-specific information separated by a colon. The possible device types and additional information are as follows.

fdA host file descriptor belonging to the UML process; specify the file descriptor number after the colon. ptyA BSD pseudo-terminal; specify the /dev/ptyxx name of the pseudo-terminal you wish to attach the console to. To access it, you will attach a terminal program, such as screen or minicom, to the corresponding /dev/ttyxx file. ptsA devpts pseudo-terminal; there is no pts-specific data you need to add. In order to connect to it, you will need to find which pts device it allocated by reading the UML kernel log through dmesg or by using uml_mconsole to query the configuration. portA host port; specify the port number. You access the port by telnetting to it. If you're on the host, you will telnet to localhost: host% telnet localhost port-number You can also telnet to that port from another machine on the network: host% telnet uml-host port-number xtermNo extra information needed. This will display an xterm on your screen with the console in it. UML needs a valid DISPLAY environment variable and xterm installed on the host, so this won't work on headless servers. This is the default for consoles other than console 0, so for headless servers, you will need to change this. nullNo extra information needed. This makes the console available inside UML, but output is ignored and there is never any input. This would be very similar to attaching the console to the host's /dev/null. noneNo extra information needed. This removes the device from UML, so that attempts to access it will fail with "No such device."

When requesting configuration information through uml_mconsole for pts consoles, it will report the actual device that it allocated after the colon, as follows:

host% uml_mconsole debian config con2

OK pts:/dev/pts/10

The syntax for specifying console and serial line configurations is the same on the UML and uml_mconsole command lines, except that the UML command line allows giving all devices the same configuration. A specific console or serial line is specified as either con n or ssl n.

On the UML command line, all consoles or serial lines may be given the same configuration with just con= configuration or ssl= configuration.

Any specific device configurations that overlap this will override it. So

attaches all consoles to pts devices, except for the first one, which is attached to stdin and stdout.

Console input and output can be specified separately. They are completely independentthe host device types don't even need to match. For example,

will attach the second serial line's input to a host pts device and the output to an xterm. The effect of this is that when you attach screen or another terminal program to the host pts device, that's the input to the serial line. No output will appear in screenthat will all be directed to the xterm. Most input will also appear in the xterm because that is echoed in the shell.

This can have unexpected effects. Repeating a configuration for both the input and output will, in some cases, attach them to distinct host devices of the same type. For example,

will create two xtermsone will accept console input, and the other will display the console's output. The same is true for pts. |

Looking at the output about the UML configuration, we see an OK on each response, which means that the command succeeded in communicating with the UML and getting a response. The con0 response says that console 0 is attached to stdin and stdout. This bears some explaining, so let's pull apart that response. There are two pieces to it, fd:0 and fd:1, separated by a comma. In a comma-separated configuration like this, the first part refers to input to the console (or serial line), and the second part refers to output from it.

The fd:0 part also has two pieces, fd and 0, separated by a colon. fd says that the console input is to be attached to a file descriptor of the linux process, and 0 says that file descriptor will be stdin, file descriptor zero. Similarly, the output is specified to be file descriptor one, stdout.

When the console input and output go to the same device, as we can see with con2 being attached to pts:/dev/pts/10, input and output are not specified separately. There is only a single colon-separated device description. As you might have guessed, pts refers to a devpts pseudo-terminal, and /dev/pts/10 tells you specifically which pseudo-terminal the console is attached to.

The con1 configuration is one we haven't seen before. It simply says that the console doesn't existthere is no such device.

The configuration for con3 is the one we are looking for. pts says that this is a pts console, and there's no specific pts device listed, so it has not yet been activated by having a UML getty running on it. We will reconfigure this one to be attached to a host port:

host% uml_mconsole debian config con3=port:9000

OK

port:9000 says that the console should be attached to the host's port 9000, which we will access by telnetting to that port.

We can double-check that the change actually happened:

host% uml_mconsole debian config con3

OK port:9000

So far, so good. Let's try telnetting there now:

host% telnet localhost 9000

Trying 127.0.0.1...

telnet: connect to address 127.0.0.1: Connection refused

This failed because UML hasn't run a getty on its console 3. We can fix this by editing its /etc/inittab. Looking there on my machine, I see:

#3:2345:respawn:/sbin/getty 38400 tty3

I had enabled this one in the past but since disabled it. You may not have a tty3 entry at all. You want to end up with a line that looks like this:

3:2345:respawn:/sbin/getty 38400 tty3

I'll just uncomment mine; you may have to add the line in its entirety, so fire up your favorite editor on /etc/inittab and fix it. Now, tell init it needs to reread the inittab file:

Let's go back to the host and try the telnet again:

host% telnet localhost 9000

Trying 127.0.0.1...

Connected to localhost.

Escape character is '^]'.

Fedora Core release 1 (Yarrow)

Kernel 2.4.27 on an i686

Debian GNU/Linux 2.2 usermode tty3

usermode login:

Here we have the UML's console, as advertised. Notice the discrepancy between the telnet banner and the login banner. Telnet is telling us that we are attaching to a Fedora Core 1 (FC1) system running a 2.4.27 kernel, while login is saying that we are attaching to a Debian system. This is because the host is the FC1 system, and telnetd running on the host and attaching us to the host's port 9000 is telling us about the host. There is some abuse of telnetd's capabilities going on in order to allow the redirection of traffic between the host port and UML, and this is responsible for the confusion.

Now, let's stick a UML console on a host console. First, we need to make sure there's no host getty or login running on the chosen console. Looking at my host's /etc/inittab, I see:

6:2345:respawn:/sbin/mingetty tty6

for the last console, and hitting Ctrl-Alt-F6 to switch to that virtual console confirms that a getty is running on it. I'll comment it out, so it looks like this:

#6:2345:respawn:/sbin/mingetty tty6

I tell init to reread inittab:

and switch back to that console to make sure it is not being used by the host any more. I now need to make sure that UML can open it:

host% ls -l /dev/tty6

crw------ 1 root root 4, 6 Feb 17 16:26 /dev/tty6

This not being the case, I'll change the permissions so that UML has both read and write access to it:

host# chmod 666 /dev/tty6

After you make any similar changes needed on your own machine, we can tell UML to take over the console. We used the UML tty3 for the host port console, so let's look at tty4:

host% uml_mconsole debian config con4

OK pts

So, let's assign con4 to the host's /dev/tty6 in the usual way:

host% uml_mconsole debian config con4=tty:/dev/tty6

OK

After enabling tty4 in the UML /etc/inittab and telling init to reread the file, we should be able to switch to the host's virtual console 6 and see the UML login prompt. Taken to extremes, this can be somewhat mind bending. Applying this technique to the other virtual con soles results in them all displaying UML, not host, login prompts.

For the security conscious, this sort of redirection and fakery can be valuable. It allows potential attacks on the host to be redirected to a jail, where they can be contained, logged, and analyzed. For the rest of us, it serves as an example of the flexibility of the UML consoles.

Now that we've seen all the ways to access our UML console, it's time to stay logged in on the console and see what we can do inside the UML.

Adding Swap Space

UML is currently running everything in the memory that it has been assigned since it has no swap space. Normal Linux machines have some swap, so let's fix that now.

We need some sort of disk to swap onto, and since UML disks are generally host files, we need to make a file on the host to be the swap device:

host% dd if=/dev/zero of=swap bs=1024 seek=$[ 1024 * 1024 ] count=1

1+0 records in

1+0 records out

host% ls -l swap

-rw-rw-rw- 1 jdike jdike 1073742848 Feb 18 12:31 swap

This technique uses dd to create a 1GB sparse file on the host by seeking 1 million 1K blocks and then writing a 1K block of zeros there. The use of sparse files is pretty standard with UML since it allows host disk space to be allocated only when it is needed. So, this swap device file consumes only 1K of disk space, even though it is technically 1GB in length.

We can see the true size, that is, the actual disk space consumption, of the file by adding -s to the ls command line:

host% ls -ls swap

12 -rw-rw-r- 1 jdike jdike 1073742848 Oct 27 17:27 swap

The 12 in the first column is the number of disk blocks actually occupied by the file. A disk block is 512 bytes, so this file that looks like it's 1GB in length is taking only 6K of disk space.

Now, we need to plug this new file into the UML as an additional block device, which we will do with the management console:

host% uml_mconsole debian config ubdb=swap

OK

We can check this by asking for the configuration of ubdb in the same way we asked about consoles earlier:

host% uml_mconsole debian config ubdb

OK /home/jdike/swap

Now, back in the UML, we have a brand-new second block device, so let's set it up for swapping, then swap on it, and look at /proc/meminfo to check our work:

UML# mkswap /dev/ubdb

Setting up swapspace version 1, size = 1073737728 bytes

UML# swapon /dev/ubdb

UML# grep Swap /proc/meminfo

SwapCached: 0 kB

SwapTotal: 1048568 kB

SwapFree: 1048568 kB

Let's further check our work by forcing the new swap device to be used. The following command creates a large amount of data by repeatedly converting the contents of /dev/mem (the UML's memory) into readable hex and feeds that into a little perl script that turns it into a very large string. We will use this string to fill up the system's memory and force it into swap.

UML# while true; do od -x /dev/mem ; done | perl -e 'my $s ; \

while(<STDIN>){ $s .= $_; } print length($s);'

At the same time, let's log in on a second console and watch the free memory disappear:

UML# while true; do free; sleep 10; done

You'll see the system start with almost all of its memory free:

total used free shared buffers \

cached

Mem: 126696 21624 105072 0 536 \

7808

-/+ buffers/cache: 13280 113416

Swap: 1048568 0 1048568

The free memory will start disappearing, until we see a nonzero entry under used for the Swap row:

total used free shared buffers cached

Mem: 126696 124548 2148 0 76 7244

-/+ buffers/cache: 121823 9468

Swap: 1048568 6524 1042044

Here UML is behaving exactly as any physical system wouldit is swapping when it is out of memory. Note that the host may have plenty of free memory, but the UML instance is confined to the memory we gave it.

Partitioned Disks

You may have noticed another difference between the way we're using disks in UML and the way they are normally used on a physical machine. We haven't been partitioning them and putting filesystems and swap space on the partitions. This is a consequence of the ease of creating and adding new virtual disks to a virtual machine. With a physical disk, it's much less convenient, and sometimes impossible, to add more disks to a system. Therefore, you want to make the best of what you have, and that means being able to slice a physical disk into partitions that can be treated separately.

When UML was first released, there was no partition support for exactly this reason. I figured there was no need for partitions, given that if you want more disk space in your UML, you just create a new host file for it, and away you go.

This was a mistake. I underestimated the desire of my users to treat their UMLs exactly like their physical machines. In part, this meant they wanted to be able to partition their virtual disks. So, partition support for UML block devices ultimately appeared, and everyone was happy.

However, my original mistake resulted in some naming conventions that can be extremely confusing to a UML newcomer. Initially, UML block devices were referred to by number, for example, ubd0, ubd1, and so on. At first, these numbers corresponded to their minor device numbers, so when you made a device node for ubd1, the command was: