Как ядро обслуживает запросы

Эти запросы можно разбить на 2 группы- обычные и привилегированные.

Следующая политика выполняется при их обработке:

Если ядро ничем не занято,оно начнет обрабатывать первым привилегированный запрос

Если привилегированный запрос прийдет в тот момент,

когда ядро обслуживает обычный,ядро немедленно переключается на его обслуживание.

Если привилегированный запрос прийдет в тот момент,

когда ядро обрабатывает другой такой же,

ядро переключится на его обработку, а потом вернется к предыдущему.

Обычный запрос может быть проигнорирован в тот момент,

когда обрабатывается привилегированный,или поставлен в очередь.

Понятно,что ядро может находиться либо в состоянии Kernel Mode,

либо в User Mode.Во втором случае говорят , что ядро находится в состоянии idle.

К привилегированным запросам относятся прерывания,

к обычным - системные вызовы или исключения процессов в User Mode.

Для вызова системного вызова нужна инструкция int

$0x80 либо sysenter.

Эти инструкции форсируют переключение с User Mode в Kernel Mode.

Kernel Preemption

It is surprisingly hard to give a good definition of kernel preemption. As a first try, we could say that a kernel is preemptive if a process switch may occur while the replaced process is executing a kernel function, that is, while it runs in Kernel Mode. Unfortunately, in Linux (as well as in any other real operating system) things are much more complicated:

Both in preemptive and nonpreemptive kernels, a process running in Kernel Mode can voluntarily relinquish the CPU, for instance because it has to sleep waiting for some resource. We will call this kind of process switch a planned

process switch. However, a preemptive kernel

differs from a nonpreemptive kernel on the way a process running in Kernel Mode reacts to asynchronous events that could induce a process switchfor instance, an interrupt handler that awakes a higher priority process. We will call this kind of process switch a forced

process switch. All process switches are performed by the switch_to macro. In both preemptive and nonpreemptive kernels, a process switch occurs when a process has finished some thread of kernel activity and the scheduler is invoked. However, in nonpreemptive kernels, the current process cannot be replaced unless it is about to switch to User Mode (see the section "

Performing the Process Switch" in

Chapter 3).

Therefore, the main characteristic of a preemptive kernel is that a process running in Kernel Mode can be replaced by another process while in the middle of a kernel function.

Let's give a couple of examples to illustrate the difference between preemptive and nonpreemptive kernels.

While process A executes an exception handler (necessarily in Kernel Mode), a higher priority process B becomes runnable. This could happen, for instance, if an IRQ occurs and the corresponding handler awakens process B. If the kernel is preemptive, a forced process switch replaces process A with B. The exception handler is left unfinished and will be resumed only when the scheduler selects again process A for execution. Conversely, if the kernel is nonpreemptive, no process switch occurs until process A either finishes handling the exception handler or voluntarily relinquishes the CPU.

For another example, consider a process that executes an exception handler and whose time quantum expires (see the section "

The scheduler_tick( ) Function" in

Chapter 7). If the kernel is preemptive, the process may be replaced immediately; however, if the kernel is nonpreemptive, the process continues to run until it finishes handling the exception handler or voluntarily relinquishes the CPU.

The main motivation for making a kernel preemptive is to reduce the dispatch latency

of the User Mode processes, that is, the delay between the time they become runnable and the time they actually begin running. Processes performing timely scheduled tasks (such as external hardware controllers, environmental monitors, movie players, and so on) really benefit from kernel preemption, because it reduces the risk of being delayed by another process running in Kernel Mode.

Making the Linux 2.6 kernel preemptive did not require a drastic change in the kernel design with respect to the older nonpreemptive kernel versions. As described in the section "

Returning from Interrupts and Exceptions" in

Chapter 4, kernel preemption is disabled when the preempt_count field in the tHRead_info descriptor referenced by the current_thread_info( ) macro is greater than zero. The field encodes three different counters, as shown in

Table 4-10 in

Chapter 4, so it is greater than zero when any of the following cases occurs:

The kernel is executing an interrupt service routine. The deferrable functions are disabled (always true when the kernel is executing a softirq or tasklet). The kernel preemption has been explicitly disabled by setting the preemption counter to a positive value.

The above rules tell us that the kernel can be preempted only when it is executing an exception handler (in particular a system call) and the kernel preemption has not been explicitly disabled. Furthermore, as described in the section "

Returning from Interrupts and Exceptions" in

Chapter 4, the local CPU must have local interrupts enabled, otherwise kernel preemption is not performed.

A few simple macros listed in Table 5-1 deal with the preemption counter in the prempt_count field.

Table 5-1. Macros dealing with the preemption counter subfieldMacro | Description |

|---|

preempt_count( ) | Selects the preempt_count field in the tHRead_info descriptor | preempt_disable( ) | Increases by one the value of the preemption counter | preempt_enable_no_resched( ) | Decreases by one the value of the preemption counter | preempt_enable( ) | Decreases by one the value of the preemption counter, and invokes preempt_schedule( ) if the TIF_NEED_RESCHED flag in the thread_info descriptor is set | get_cpu( ) | Similar to preempt_disable( ), but also returns the number of the local CPU | put_cpu( ) | Same as preempt_enable( ) | put_cpu_no_resched( ) | Same as preempt_enable_no_resched( ) |

The preempt_enable( ) macro decreases the preemption counter, then checks whether the TIF_NEED_RESCHED flag is set (see

Table 4-15 in

Chapter 4). In this case, a process switch request is pending, so the macro invokes the preempt_schedule( ) function, which essentially executes the following code:

if (!current_thread_info->preempt_count && !irqs_disabled()) {

current_thread_info->preempt_count = PREEMPT_ACTIVE;

schedule();

current_thread_info->preempt_count = 0;

}

The function checks whether local interrupts are enabled and the preempt_count field of current is zero; if both conditions are true, it invokes schedule( ) to select another process to run. Therefore, kernel preemption may happen either when a kernel control path (usually, an interrupt handler) is terminated, or when an exception handler reenables kernel preemption by means of preempt_enable( ). As we'll see in the section "

Disabling and Enabling Deferrable Functions" later in this chapter, kernel preemption may also happen when deferrable functions are enabled.

We'll conclude this section by noticing that kernel preemption introduces a nonnegligible overhead. For that reason, Linux 2.6 features a kernel configuration option that allows users to enable or disable kernel preemption when compiling the kernel.

5.1.2. When Synchronization Is Necessary

Chapter 1 introduced the concepts of race condition and critical region for processes. The same definitions apply to kernel control paths

. In this chapter, a race condition can occur when the outcome of a computation depends on how two or more interleaved kernel control paths are nested. A critical region is a section of code that must be completely executed by the kernel control path that enters it before another kernel control path can enter it.

Interleaving kernel control paths complicates the life of kernel developers: they must apply special care in order to identify the critical regions

in exception handlers, interrupt handlers, deferrable functions, and kernel threads

. Once a critical region has been identified, it must be suitably protected to ensure that any time at most one kernel control path is inside that region.

Suppose, for instance, that two different interrupt handlers need to access the same data structure that contains several related member variables for instance, a buffer and an integer indicating its length. All statements affecting the data structure must be put into a single critical region. If the system includes a single CPU, the critical region can be implemented by disabling interrupts while accessing the shared data structure, because nesting of kernel control paths can only occur when interrupts are enabled.

On the other hand, if the same data structure is accessed only by the service routines of system calls, and if the system includes a single CPU, the critical region can be implemented quite simply by disabling kernel preemption while accessing the shared data structure.

As you would expect, things are more complicated in multiprocessor systems. Many CPUs may execute kernel code at the same time, so kernel developers cannot assume that a data structure can be safely accessed just because kernel preemption is disabled and the data structure is never addressed by an interrupt, exception, or softirq handler.

We'll see in the following sections that the kernel offers a wide range of different synchronization techniques. It is up to kernel designers to solve each synchronization problem by selecting the most efficient technique.

5.1.3. When Synchronization Is Not Necessary

Some design choices already discussed in the previous chapter simplify somewhat the synchronization of kernel control paths. Let us recall them briefly:

All interrupt handlers acknowledge the interrupt on the PIC and also disable the IRQ line. Further occurrences of the same interrupt cannot occur until the handler terminates. Interrupt handlers, softirqs, and tasklets are both nonpreemptable and non-blocking, so they cannot be suspended for a long time interval. In the worst case, their execution will be slightly delayed, because other interrupts occur during their execution (nested execution of kernel control paths). A kernel control path performing interrupt handling cannot be interrupted by a kernel control path executing a deferrable function or a system call service routine. Softirqs and tasklets cannot be interleaved on a given CPU. The same tasklet cannot be executed simultaneously on several CPUs.

Each of the above design choices can be viewed as a constraint that can be exploited to code some kernel functions more easily. Here are a few examples of possible simplifications:

Interrupt handlers and tasklets need not to be coded as reentrant functions. Per-CPU variables accessed by softirqs and tasklets only do not require synchronization. A data structure accessed by only one kind of tasklet does not require synchronization.

The rest of this chapter describes what to do when synchronization is necessary i.e., how to prevent data corruption due to unsafe accesses to shared data structures.

5.2. Synchronization Primitives

We now examine how kernel control paths can be interleaved while avoiding race conditions among shared data. Table 5-2 lists the synchronization techniques used by the Linux kernel. The "Scope" column indicates whether the synchronization technique applies to all CPUs in the system or to a single CPU. For instance, local interrupt disabling applies to just one CPU (other CPUs in the system are not affected); conversely, an atomic operation affects all CPUs in the system (atomic operations on several CPUs cannot interleave while accessing the same data structure).

Table 5-2. Various types of synchronization techniques used by the kernelTechnique | Description | Scope |

|---|

Per-CPU variables | Duplicate a data structure among the CPUs | All CPUs | Atomic operation | Atomic read-modify-write instruction to a counter | All CPUs | Memory barrier | Avoid instruction reordering | Local CPU or All CPUs | Spin lock | Lock with busy wait | All CPUs | Semaphore | Lock with blocking wait (sleep) | All CPUs | Seqlocks | Lock based on an access counter | All CPUs | Local interrupt disabling | Forbid interrupt handling on a single CPU | Local CPU | Local softirq disabling | Forbid deferrable function handling on a single CPU | Local CPU | Read-copy-update (RCU) | Lock-free access to shared data structures through pointers | All CPUs |

Let's now briefly discuss each synchronization technique. In the later section "Synchronizing Accesses to Kernel Data Structures," we show how these synchronization techniques can be combined to effectively protect kernel data structures.

5.2.1. Per-CPU Variables

The best synchronization technique consists in designing the kernel so as to avoid the need for synchronization in the first place. As we'll see, in fact, every explicit synchronization primitive has a significant performance cost.

The simplest and most efficient synchronization technique consists of declaring kernel variables as per-CPU variables

. Basically, a per-CPU variable is an array of data structures, one element per each CPU in the system.

A CPU should not access the elements of the array corresponding to the other CPUs; on the other hand, it can freely read and modify its own element without fear of race conditions, because it is the only CPU entitled to do so. This also means, however, that the per-CPU variables can be used only in particular casesbasically, when it makes sense to logically split the data across the CPUs of the system.

The elements of the per-CPU array are aligned in main memory so that each data structure falls on a different line of the hardware cache (see the section "Hardware Cache" in

Chapter 2). Therefore, concurrent accesses to the per-CPU array do not result in cache line snooping and invalidation, which are costly operations in terms of system performance.

While per-CPU variables provide protection against concurrent accesses from several CPUs, they do not provide protection against accesses from asynchronous functions (interrupt handlers and deferrable functions). In these cases, additional synchronization primitives are required.

Furthermore, per-CPU variables are prone to race conditions caused by kernel preemption

, both in uniprocessor and multiprocessor systems. As a general rule, a kernel control path should access a per-CPU variable with kernel preemption disabled. Just consider, for instance, what would happen if a kernel control path gets the address of its local copy of a per-CPU variable, and then it is preempted and moved to another CPU: the address still refers to the element of the previous CPU.

Table 5-3 lists the main functions and macros offered by the kernel to use per-CPU variables.

Table 5-3. Functions and macros for the per-CPU variablesMacro or function name | Description |

|---|

DEFINE_PER_CPU(type, name) | Statically allocates a per-CPU array called name of type data structures | per_cpu(name, cpu) | Selects the element for CPU cpu of the per-CPU array name | _ _get_cpu_var(name) | Selects the local CPU's element of the per-CPU array name | get_cpu_var(name) | Disables kernel preemption, then selects the local CPU's element of the per-CPU array name | put_cpu_var(name) | Enables kernel preemption (name is not used) | alloc_percpu(type) | Dynamically allocates a per-CPU array of type data structures and returns its address | free_percpu(pointer) | Releases a dynamically allocated per-CPU array at address pointer | per_cpu_ptr(pointer, cpu) | Returns the address of the element for CPU cpu of the per-CPU array at address pointer |

5.2.2. Atomic Operations

Several assembly language instructions are of type "read-modify-write" that is, they access a memory location twice, the first time to read the old value and the second time to write a new value.

Suppose that two kernel control paths running on two CPUs try to "read-modify-write" the same memory location at the same time by executing nonatomic operations. At first, both CPUs try to read the same location, but the memory arbiter (a hardware circuit that serializes accesses to the RAM chips) steps in to grant access to one of them and delay the other. However, when the first read operation has completed, the delayed CPU reads exactly the same (old) value from the memory location. Both CPUs then try to write the same (new) value to the memory location; again, the bus memory access is serialized by the memory arbiter, and eventually both write operations succeed. However, the global result is incorrect because both CPUs write the same (new) value. Thus, the two interleaving "read-modify-write" operations act as a single one.

The easiest way to prevent race conditions due to "read-modify-write" instructions is by ensuring that such operations are atomic at the chip level. Every such operation must be executed in a single instruction without being interrupted in the middle and avoiding accesses to the same memory location by other CPUs. These very small atomic operations can be found at the base of other, more flexible mechanisms to create critical regions.

Let's review 80x86 Instructions According To That classification:

Assembly language instructions that make zero or one aligned memory access are atomic. Read-modify-write assembly language instructions (such as inc or dec) that read data from memory, update it, and write the updated value back to memory are atomic if no other processor has taken the memory bus after the read and before the write. Memory bus stealing never happens in a uniprocessor system. Read-modify-write assembly language instructions whose opcode is prefixed by the lock byte (0xf0) are atomic even on a multiprocessor system. When the control unit detects the prefix, it "locks" the memory bus until the instruction is finished. Therefore, other processors cannot access the memory location while the locked instruction is being executed. Assembly language instructions whose opcode is prefixed by a rep byte (0xf2, 0xf3, which forces the control unit to repeat the same instruction several times) are not atomic. The control unit checks for pending interrupts before executing a new iteration.

When you write C code, you cannot guarantee that the compiler will use an atomic instruction for an operation like a=a+1 or even for a++. Thus, the Linux kernel provides a special atomic_t type (an atomically accessible counter) and some special functions and macros (see Table 5-4) that act on atomic_t variables and are implemented as single, atomic assembly language instructions. On multiprocessor systems, each such instruction is prefixed by a lock byte.

Table 5-4. Atomic operations in LinuxFunction | Description |

|---|

atomic_read(v) | Return *v | atomic_set(v,i) | Set *v to i | atomic_add(i,v) | Add i to *v | atomic_sub(i,v) | Subtract i from *v | atomic_sub_and_test(i, v) | Subtract i from *v and return 1 if the result is zero; 0 otherwise | atomic_inc(v) | Add 1 to *v | atomic_dec(v) | Subtract 1 from *v | atomic_dec_and_test(v) | Subtract 1 from *v and return 1 if the result is zero; 0 otherwise | atomic_inc_and_test(v) | Add 1 to *v and return 1 if the result is zero; 0 otherwise | atomic_add_negative(i, v) | Add i to *v and return 1 if the result is negative; 0 otherwise | atomic_inc_return(v) | Add 1 to *v and return the new value of *v | atomic_dec_return(v) | Subtract 1 from *v and return the new value of *v | atomic_add_return(i, v) | Add i to *v and return the new value of *v | atomic_sub_return(i, v) | Subtract i from *v and return the new value of *v |

Another class of atomic functions operate on bit masks (see Table 5-5).

Table 5-5. Atomic bit handling functions in LinuxFunction | Description |

|---|

test_bit(nr, addr) | Return the value of the nrth bit of *addr | set_bit(nr, addr) | Set the nrth bit of *addr | clear_bit(nr, addr) | Clear the nrth bit of *addr | change_bit(nr, addr) | Invert the nrth bit of *addr | test_and_set_bit(nr, addr) | Set the nrth bit of *addr and return its old value | test_and_clear_bit(nr, addr) | Clear the nrth bit of *addr and return its old value | test_and_change_bit(nr, addr) | Invert the nrth bit of *addr and return its old value | atomic_clear_mask(mask, addr) | Clear all bits of *addr specified by mask | atomic_set_mask(mask, addr) | Set all bits of *addr specified by mask |

5.2.3. Optimization and Memory Barriers

When using optimizing compilers, you should never take for granted that instructions will be performed in the exact order in which they appear in the source code. For example, a compiler might reorder the assembly language instructions in such a way to optimize how registers are used. Moreover, modern CPUs usually execute several instructions in parallel and might reorder memory accesses. These kinds of reordering can greatly speed up the program.

When dealing with synchronization, however, reordering instructions must be avoided. Things would quickly become hairy if an instruction placed after a synchronization primitive is executed before the synchronization primitive itself. Therefore, all synchronization primitives act as optimization and memory barriers

.

An optimization barrier primitive ensures that the assembly language instructions corresponding to C statements placed before the primitive are not mixed by the compiler with assembly language instructions corresponding to C statements placed after the primitive. In Linux the barrier( ) macro, which expands into asm volatile("":::"memory"), acts as an optimization barrier. The asm instruction tells the compiler to insert an assembly language fragment (empty, in this case). The volatile keyword forbids the compiler to reshuffle the asm instruction with the other instructions of the program. The memory keyword forces the compiler to assume that all memory locations in RAM have been changed by the assembly language instruction; therefore, the compiler cannot optimize the code by using the values of memory locations stored in CPU registers before the asm instruction. Notice that the optimization barrier does not ensure that the executions of the assembly language instructions are not mixed by the CPUthis is a job for a memory barrier.

A memory barrier primitive ensures that the operations placed before the primitive are finished before starting the operations placed after the primitive. Thus, a memory barrier is like a firewall that cannot be passed by an assembly language instruction.

In the 80x86 processors, the following kinds of assembly language instructions are said to be "serializing" because they act as memory barriers:

All instructions that operate on I/O ports All instructions prefixed by the lock byte (see the section "Atomic Operations") All instructions that write into control registers, system registers, or debug registers

(for instance, cli

and sti

, which change the status of the IF flag in the eflags

register) The lfence

, sfence

, and mfence

assembly language instructions, which have been introduced in the Pentium 4 microprocessor to efficiently implement read memory barriers, write memory barriers, and read-write memory barriers, respectively. A few special assembly language instructions; among them, the iret

instruction that terminates an interrupt or exception handler

Linux uses a few memory barrier primitives, which are shown in Table 5-6. These primitives act also as optimization barriers

, because we must make sure the compiler does not move the assembly language instructions around the barrier. "Read memory barriers" act only on instructions that read from memory, while "write memory barriers" act only on instructions that write to memory. Memory barriers can be useful in both multiprocessor and uniprocessor systems. The smp_xxx( ) primitives are used whenever the memory barrier should prevent race conditions that might occur only in multiprocessor systems; in uniprocessor systems, they do nothing. The other memory barriers are used to prevent race conditions occurring both in uniprocessor and multiprocessor systems.

Table 5-6. Memory barriers in LinuxMacro | Description |

|---|

mb( ) | Memory barrier for MP and UP | rmb( ) | Read memory barrier for MP and UP | wmb( ) | Write memory barrier for MP and UP | smp_mb( ) | Memory barrier for MP only | smp_rmb( ) | Read memory barrier for MP only | smp_wmb( ) | Write memory barrier for MP only |

The implementations of the memory barrier primitives depend on the architecture of the system. On an 80x86 microprocessor, the rmb( ) macro usually expands into asm volatile("lfence") if the CPU supports the lfence assembly language instruction, or into asm volatile("lock;addl $0,0(%%esp)":::"memory") otherwise. The asm statement inserts an assembly language fragment in the code generated by the compiler and acts as an optimization barrier. The lock; addl $0,0(%%esp) assembly language instruction adds zero to the memory location on top of the stack; the instruction is useless by itself, but the lock prefix makes the instruction a memory barrier for the CPU.

The wmb( ) macro is actually simpler because it expands into barrier( ). This is because existing Intel microprocessors never reorder write memory accesses, so there is no need to insert a serializing assembly language instruction in the code. The macro, however, forbids the compiler from shuffling the instructions.

Notice that in multiprocessor systems, all atomic operations described in the earlier section "Atomic Operations" act as memory barriers because they use the lock byte.

5.2.4. Spin Locks

A widely used synchronization technique is locking. When a kernel control path must access a shared data structure or enter a critical region, it needs to acquire a "lock" for it. A resource protected by a locking mechanism is quite similar to a resource confined in a room whose door is locked when someone is inside. If a kernel control path wishes to access the resource, it tries to "open the door" by acquiring the lock. It succeeds only if the resource is free. Then, as long as it wants to use the resource, the door remains locked. When the kernel control path releases the lock, the door is unlocked and another kernel control path may enter the room.

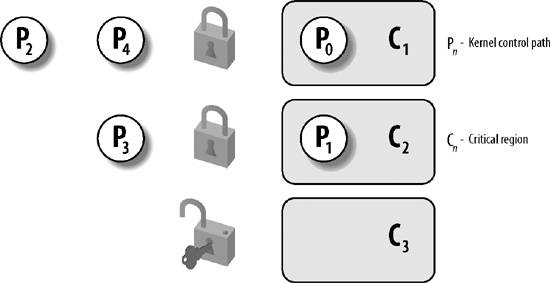

Figure 5-1 illustrates the use of locks. Five kernel control paths (P0, P1, P2, P3, and P4) are trying to access two critical regions (C1 and C2). Kernel control path P0 is inside C1, while P2 and P4 are waiting to enter it. At the same time, P1 is inside C2, while P3 is waiting to enter it. Notice that P0 and P1 could run concurrently. The lock for critical region C3 is open because no kernel control path needs to enter it.

Spin locks are a special kind of lock designed to work in a multiprocessor environment. If the kernel control path finds the spin lock "open," it acquires the lock and continues its execution. Conversely, if the kernel control path finds the lock "closed" by a kernel control path running on another CPU, it "spins" around, repeatedly executing a tight instruction loop, until the lock is released.

The instruction loop of spin locks represents a "busy wait." The waiting kernel control path keeps running on the CPU, even if it has nothing to do besides waste time. Nevertheless, spin locks are usually convenient, because many kernel resources are locked for a fraction of a millisecond only; therefore, it would be far more time-consuming to release the CPU and reacquire it later.

As a general rule, kernel preemption is disabled in every critical region protected by spin locks. In the case of a uniprocessor system, the locks themselves are useless, and the spin lock primitives just disable or enable the kernel preemption. Please notice that kernel preemption is still enabled during the busy wait phase, thus a process waiting for a spin lock to be released could be replaced by a higher priority process.

In Linux, each spin lock is represented by a spinlock_t structure consisting of two fields:

slock Encodes the spin lock state: the value 1 corresponds to the unlocked state, while every negative value and 0 denote the locked state

break_lock Flag signaling that a process is busy waiting for the lock (present only if the kernel supports both SMP and kernel preemption)

Six macros shown in Table 5-7 are used to initialize, test, and set spin locks. All these macros are based on atomic operations; this ensures that the spin lock will be updated properly even when multiple processes running on different CPUs try to modify the lock at the same time.

Table 5-7. Spin lock macrosMacro | Description |

|---|

spin_lock_init( ) | Set the spin lock to 1 (unlocked) | spin_lock( ) | Cycle until spin lock becomes 1 (unlocked), then set it to 0 (locked) | spin_unlock( ) | Set the spin lock to 1 (unlocked) | spin_unlock_wait( ) | Wait until the spin lock becomes 1 (unlocked) | spin_is_locked( ) | Return 0 if the spin lock is set to 1 (unlocked); 1 otherwise | spin_trylock( ) | Set the spin lock to 0 (locked), and return 1 if the previous value of the lock was 1; 0 otherwise |

5.2.4.1. The spin_lock macro with kernel preemption

Let's discuss in detail the spin_lock macro, which is used to acquire a spin lock. The following description refers to a preemptive kernel for an SMP system. The macro takes the address slp of the spin lock as its parameter and executes the following actions:

Invokes preempt_disable( ) to disable kernel preemption. Invokes the _raw_spin_trylock( ) function, which does an atomic test-and-set operation on the spin lock's slock field; this function executes first some instructions equivalent to the following assembly language fragment:

movb $0, %al

xchgb %al, slp->slock

The xchg

assembly language instruction exchanges atomically the content of the 8-bit %al register (storing zero) with the content of the memory location pointed to by slp->slock. The function then returns the value 1 if the old value stored in the spin lock (in %al after the xchg instruction) was positive, the value 0 otherwise. If the old value of the spin lock was positive, the macro terminates: the kernel control path has acquired the spin lock. Otherwise, the kernel control path failed in acquiring the spin lock, thus the macro must cycle until the spin lock is released by a kernel control path running on some other CPU. Invokes preempt_enable( ) to undo the increase of the preemption counter done in step 1. If kernel preemption was enabled before executing the spin_lock macro, another process can now replace this process while it waits for the spin lock. If the break_lock field is equal to zero, sets it to one. By checking this field, the process owning the lock and running on another CPU can learn whether there are other processes waiting for the lock. If a process holds a spin lock for a long time, it may decide to release it prematurely to allow another process waiting for the same spin lock to progress. Executes the wait cycle:

while (spin_is_locked(slp) && slp->break_lock)

cpu_relax();

The cpu_relax( ) macro reduces to a pause

assembly language instruction. This instruction has been introduced in the Pentium 4 model to optimize the execution of spin lock loops. By introducing a short delay, it speeds up the execution of code following the lock and reduces power consumption. The pause instruction is backward compatible with earlier models of 80x86 microprocessors because it corresponds to the instruction rep;nop, that is, to a no-operation. Jumps back to step 1 to try once more to get the spin lock.

5.2.4.2. The spin_lock macro without kernel preemption

If the kernel preemption option has not been selected when the kernel was compiled, the spin_lock macro is quite different from the one described above. In this case, the macro yields a assembly language fragment that is essentially equivalent to the following tight busy wait:

1: lock; decb slp->slock

jns 3f

2: pause

cmpb $0,slp->slock

jle 2b

jmp 1b

3:

The decb assembly language instruction decreases the spin lock value; the instruction is atomic because it is prefixed by the lock byte. A test is then performed on the sign flag. If it is clear, it means that the spin lock was set to 1 (unlocked), so normal execution continues at label 3 (the f suffix denotes the fact that the label is a "forward" one; it appears in a later line of the program). Otherwise, the tight loop at label 2 (the b suffix denotes a "backward" label) is executed until the spin lock assumes a positive value. Then execution restarts from label 1, since it is unsafe to proceed without checking whether another processor has grabbed the lock.

5.2.4.3. The spin_unlock macro

The spin_unlock macro releases a previously acquired spin lock; it essentially executes the assembly language instruction:

movb $1, slp->slock

and then invokes preempt_enable( ) (if kernel preemption is not supported, preempt_enable( ) does nothing). Notice that the lock byte is not used because write-only accesses in memory are always atomically executed by the current 80x86 microprocessors.

5.2.5. Read/Write Spin Locks

Read/write spin locks have been introduced to increase the amount of concurrency inside the kernel. They allow several kernel control paths to simultaneously read the same data structure, as long as no kernel control path modifies it. If a kernel control path wishes to write to the structure, it must acquire the write version of the read/write lock, which grants exclusive access to the resource. Of course, allowing concurrent reads on data structures improves system performance.

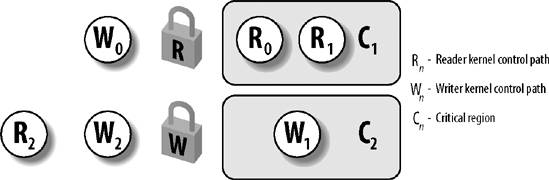

Figure 5-2 illustrates two critical regions (C1 and C2) protected by read/write locks. Kernel control paths R0 and R1 are reading the data structures in C1 at the same time, while W0 is waiting to acquire the lock for writing. Kernel control path W1 is writing the data structures in C2, while both R2 and W2 are waiting to acquire the lock for reading and writing, respectively.

Each read/write spin lock is a rwlock_t structure; its lock field is a 32-bit field that encodes two distinct pieces of information:

A 24-bit counter denoting the number of kernel control paths currently reading the protected data structure. The two's complement value of this counter is stored in bits 023 of the field. An unlock flag that is set when no kernel control path is reading or writing, and clear otherwise. This unlock flag is stored in bit 24 of the field.

Notice that the lock field stores the number 0x01000000 if the spin lock is idle (unlock flag set and no readers), the number 0x00000000 if it has been acquired for writing (unlock flag clear and no readers), and any number in the sequence 0x00ffffff, 0x00fffffe, and so on, if it has been acquired for reading by one, two, or more processes (unlock flag clear and the two's complement on 24 bits of the number of readers). As the spinlock_t structure, the rwlock_t structure also includes a break_lock field.

The rwlock_init macro initializes the lock field of a read/write spin lock to 0x01000000 (unlocked) and the break_lock field to zero.

5.2.5.1. Getting and releasing a lock for reading

The read_lock macro, applied to the address rwlp of a read/write spin lock, is similar to the spin_lock macro described in the previous section. If the kernel preemption option has been selected when the kernel was compiled, the macro performs the very same actions as those of spin_lock( ), with just one exception: to effectively acquire the read/write spin lock in step 2, the macro executes the _raw_read_trylock( ) function:

int _raw_read_trylock(rwlock_t *lock)

{

atomic_t *count = (atomic_t *)lock->lock;

atomic_dec(count);

if (atomic_read(count) >= 0)

return 1;

atomic_inc(count);

return 0;

}

The lock fieldthe read/write lock counteris accessed by means of atomic operations. Notice, however, that the whole function does not act atomically on the counter: for instance, the counter might change after having tested its value with the if statement and before returning 1. Nevertheless, the function works properly: in fact, the function returns 1 only if the counter was not zero or negative before the decrement, because the counter is equal to 0x01000000 for no owner, 0x00ffffff for one reader, and 0x00000000 for one writer.

If the kernel preemption option has not been selected when the kernel was compiled, the read_lock macro yields the following assembly language code:

movl $rwlp->lock,%eax

lock; subl $1,(%eax)

jns 1f

call _ _read_lock_failed

1:

where _ _read_lock_failed( ) is the following assembly language function:

_ _read_lock_failed:

lock; incl (%eax)

1: pause

cmpl $1,(%eax)

js 1b

lock; decl (%eax)

js _ _read_lock_failed

ret

The read_lock macro atomically decreases the spin lock value by 1, thus increasing the number of readers. The spin lock is acquired if the decrement operation yields a nonnegative value; otherwise, the _ _read_lock_failed( ) function is invoked. The function atomically increases the lock field to undo the decrement operation performed by the read_lock macro, and then loops until the field becomes positive (greater than or equal to 1). Next, _ _read_lock_failed( ) tries to get the spin lock again (another kernel control path could acquire the spin lock for writing right after the cmpl instruction).

Releasing the read lock is quite simple, because the read_unlock macro must simply increase the counter in the lock field with the assembly language instruction:

lock; incl rwlp->lock

to decrease the number of readers, and then invoke preempt_enable( ) to reenable kernel preemption.

5.2.5.2. Getting and releasing a lock for writing

The write_lock macro is implemented in the same way as spin_lock( ) and read_lock( ). For instance, if kernel preemption is supported, the function disables kernel preemption and tries to grab the lock right away by invoking _raw_write_trylock( ). If this function returns 0, the lock was already taken, thus the macro reenables kernel preemption and starts a busy wait loop, as explained in the description of spin_lock( ) in the previous section.

The _raw_write_trylock( ) function is shown below:

int _raw_write_trylock(rwlock_t *lock)

{

atomic_t *count = (atomic_t *)lock->lock;

if (atomic_sub_and_test(0x01000000, count))

return 1;

atomic_add(0x01000000, count);

return 0;

}

The _raw_write_trylock( ) function subtracts 0x01000000 from the read/write spin lock value, thus clearing the unlock flag (bit 24). If the subtraction operation yields zero (no readers), the lock is acquired and the function returns 1; otherwise, the function atomically adds 0x01000000 to the spin lock value to undo the subtraction operation.

Once again, releasing the write lock is much simpler because the write_unlock macro must simply set the unlock flag in the lock field with the assembly language instruction:

lock; addl $0x01000000,rwlp

and then invoke preempt_enable().

5.2.6. Seqlocks

When using read/write spin locks, requests issued by kernel control paths to perform a read_lock or a write_lock operation have the same priority: readers must wait until the writer has finished and, similarly, a writer must wait until all readers have finished.

Seqlocks introduced in Linux 2.6 are similar to read/write spin locks, except that they give a much higher priority to writers: in fact a writer is allowed to proceed even when readers are active. The good part of this strategy is that a writer never waits (unless another writer is active); the bad part is that a reader may sometimes be forced to read the same data several times until it gets a valid copy.

Each seqlock is a seqlock_t structure consisting of two fields: a lock field of type spinlock_t and an integer sequence field. This second field plays the role of a sequence counter. Each reader must read this sequence counter twice, before and after reading the data, and check whether the two values coincide. In the opposite case, a new writer has become active and has increased the sequence counter, thus implicitly telling the reader that the data just read is not valid.

A seqlock_t variable is initialized to "unlocked" either by assigning to it the value SEQLOCK_UNLOCKED, or by executing the seqlock_init macro. Writers acquire and release a seqlock by invoking write_seqlock( ) and write_sequnlock( ). The first function acquires the spin lock in the seqlock_t data structure, then increases the sequence counter by one; the second function increases the sequence counter once more, then releases the spin lock. This ensures that when the writer is in the middle of writing, the counter is odd, and that when no writer is altering data, the counter is even. Readers implement a critical region as follows:

unsigned int seq;

do {

seq = read_seqbegin(&seqlock);

/* ... CRITICAL REGION ... */

} while (read_seqretry(&seqlock, seq));

read_seqbegin() returns the current sequence number of the seqlock; read_seqretry() returns 1 if either the value of the seq local variable is odd (a writer was updating the data structure when the read_seqbegin( ) function has been invoked), or if the value of seq does not match the current value of the seqlock's sequence counter (a writer started working while the reader was still executing the code in the critical region).

Notice that when a reader enters a critical region, it does not need to disable kernel preemption; on the other hand, the writer automatically disables kernel preemption when entering the critical region, because it acquires the spin lock.

Not every kind of data structure can be protected by a seqlock. As a general rule, the following conditions must hold:

The data structure to be protected does not include pointers that are modified by the writers and dereferenced by the readers (otherwise, a writer could change the pointer under the nose of the readers) The code in the critical regions of the readers does not have side effects (otherwise, multiple reads would have different effects from a single read)

Furthermore, the critical regions of the readers should be short and writers should seldom acquire the seqlock, otherwise repeated read accesses would cause a severe overhead. A typical usage of seqlocks in Linux 2.6 consists of protecting some data structures related to the system time handling (see

Chapter 6).

5.2.7. Read-Copy Update (RCU)

Read-copy update (RCU) is yet another synchronization technique designed to protect data structures that are mostly accessed for reading by several CPUs. RCU allows many readers and many writers to proceed concurrently (an improvement over seqlocks, which allow only one writer to proceed). Moreover, RCU is lock-free, that is, it uses no lock or counter shared by all CPUs; this is a great advantage over read/write spin locks and seqlocks, which have a high overhead due to cache line-snooping and invalidation.

How does RCU obtain the surprising result of synchronizing several CPUs without shared data structures? The key idea consists of limiting the scope of RCU as follows:

Only data structures that are dynamically allocated and referenced by means of pointers can be protected by RCU. No kernel control path can sleep inside a critical region protected by RCU.

When a kernel control path wants to read an RCU-protected data structure, it executes the rcu_read_lock( ) macro, which is equivalent to preempt_disable( ). Next, the reader dereferences the pointer to the data structure and starts reading it. As stated above, the reader cannot sleep until it finishes reading the data structure; the end of the critical region is marked by the rcu_read_unlock( ) macro, which is equivalent to preempt_enable( ).

Because the reader does very little to prevent race conditions, we could expect that the writer has to work a bit more. In fact, when a writer wants to update the data structure, it dereferences the pointer and makes a copy of the whole data structure. Next, the writer modifies the copy. Once finished, the writer changes the pointer to the data structure so as to make it point to the updated copy. Because changing the value of the pointer is an atomic operation, each reader or writer sees either the old copy or the new one: no corruption in the data structure may occur. However, a memory barrier is required to ensure that the updated pointer is seen by the other CPUs only after the data structure has been modified. Such a memory barrier is implicitly introduced if a spin lock is coupled with RCU to forbid the concurrent execution of writers.

The real problem with the RCU technique, however, is that the old copy of the data structure cannot be freed right away when the writer updates the pointer. In fact, the readers that were accessing the data structure when the writer started its update could still be reading the old copy. The old copy can be freed only after all (potential) readers on the CPUs have executed the rcu_read_unlock( ) macro. The kernel requires every potential reader to execute that macro before:

The CPU performs a process switch (see restriction 2 earlier). The CPU starts executing in User Mode. The CPU executes the idle loop (see the section "

Kernel Threads" in

Chapter 3).

In each of these cases, we say that the CPU has gone through a quiescent state.

The call_rcu( ) function is invoked by the writer to get rid of the old copy of the data structure. It receives as its parameters the address of an rcu_head descriptor (usually embedded inside the data structure to be freed) and the address of a callback function to be invoked when all CPUs have gone through a quiescent state. Once executed, the callback function usually frees the old copy of the data structure.

The call_rcu( ) function stores in the rcu_head descriptor the address of the callback and its parameter, then inserts the descriptor in a per-CPU list of callbacks. Periodically, once every tick (see the section "

Updating Local CPU Statistics" in

Chapter 6), the kernel checks whether the local CPU has gone through a quiescent state. When all CPUs have gone through a quiescent state, a local taskletwhose descriptor is stored in the rcu_tasklet per-CPU variableexecutes all callbacks in the list.

RCU is a new addition in Linux 2.6; it is used in the networking layer and in the Virtual Filesystem.

5.2.8. Semaphores

We have already introduced semaphores

in the section "

Synchronization and Critical Regions" in

Chapter 1. Essentially, they implement a locking primitive that allows waiters to sleep until the desired resource becomes free.

Actually, Linux offers two kinds of semaphores:

Kernel semaphores, which are used by kernel control paths System V IPC semaphores, which are used by User Mode processes

In this section, we focus on kernel semaphores, while IPC semaphores are described in

Chapter 19.

A kernel semaphore is similar to a spin lock, in that it doesn't allow a kernel control path to proceed unless the lock is open. However, whenever a kernel control path tries to acquire a busy resource protected by a kernel semaphore, the corresponding process is suspended. It becomes runnable again when the resource is released. Therefore, kernel semaphores can be acquired only by functions that are allowed to sleep; interrupt handlers and deferrable functions cannot use them.

A kernel semaphore is an object of type struct semaphore, containing the fields shown in the following list.

count Stores an atomic_t value. If it is greater than 0, the resource is free that is, it is currently available. If count is equal to 0, the semaphore is busy but no other process is waiting for the protected resource. Finally, if count is negative, the resource is unavailable and at least one process is waiting for it.

wait Stores the address of a wait queue list that includes all sleeping processes that are currently waiting for the resource. Of course, if count is greater than or equal to 0, the wait queue is empty.

sleepers Stores a flag that indicates whether some processes are sleeping on the semaphore. We'll see this field in operation soon.

The init_MUTEX( ) and init_MUTEX_LOCKED( ) functions may be used to initialize a semaphore for exclusive access: they set the count field to 1 (free resource with exclusive access) and 0 (busy resource with exclusive access currently granted to the process that initializes the semaphore), respectively. The DECLARE_MUTEX and DECLARE_MUTEX_LOCKED macros do the same, but they also statically allocate the struct semaphore variable. Note that a semaphore could also be initialized with an arbitrary positive value n for count. In this case, at most n processes are allowed to concurrently access the resource.

5.2.8.1. Getting and releasing semaphores

Let's start by discussing how to release a semaphore, which is much simpler than getting one. When a process wishes to release a kernel semaphore lock, it invokes the up( ) function. This function is essentially equivalent to the following assembly language fragment:

movl $sem->count,%ecx

lock; incl (%ecx)

jg 1f

lea %ecx,%eax

pushl %edx

pushl %ecx

call _ _up

popl %ecx

popl %edx

1:

where _ _up( ) is the following C function:

__attribute__((regparm(3))) void _ _up(struct semaphore *sem)

{

wake_up(&sem->wait);

}

The up( ) function increases the count field of the *sem semaphore, and then it checks whether its value is greater than 0. The increment of count and the setting of the flag tested by the following jump instruction must be atomically executed, or else another kernel control path could concurrently access the field value, with disastrous results. If count is greater than 0, there was no process sleeping in the wait queue, so nothing has to be done. Otherwise, the _ _up( ) function is invoked so that one sleeping process is woken up. Notice that _ _up( ) receives its parameter from the eax register (see the description of the _ _switch_to( ) function in the section "

Performing the Process Switch" in

Chapter 3).

Conversely, when a process wishes to acquire a kernel semaphore lock, it invokes the down( ) function. The implementation of down( ) is quite involved, but it is essentially equivalent to the following:

down:

movl $sem->count,%ecx

lock; decl (%ecx);

jns 1f

lea %ecx, %eax

pushl %edx

pushl %ecx

call _ _down

popl %ecx

popl %edx

1:

where _ _down( ) is the following C function:

__attribute__((regparm(3))) void _ _down(struct semaphore * sem)

{

DECLARE_WAITQUEUE(wait, current);

unsigned long flags;

current->state = TASK_UNINTERRUPTIBLE;

spin_lock_irqsave(&sem->wait.lock, flags);

add_wait_queue_exclusive_locked(&sem->wait, &wait);

sem->sleepers++;

for (;;) {

if (!atomic_add_negative(sem->sleepers-1, &sem->count)) {

sem->sleepers = 0;

break;

}

sem->sleepers = 1;

spin_unlock_irqrestore(&sem->wait.lock, flags);

schedule( );

spin_lock_irqsave(&sem->wait.lock, flags);

current->state = TASK_UNINTERRUPTIBLE;

}

remove_wait_queue_locked(&sem->wait, &wait);

wake_up_locked(&sem->wait);

spin_unlock_irqrestore(&sem->wait.lock, flags);

current->state = TASK_RUNNING;

}

The down( ) function decreases the count field of the *sem semaphore, and then checks whether its value is negative. Again, the decrement and the test must be atomically executed. If count is greater than or equal to 0, the current process acquires the resource and the execution continues normally. Otherwise, count is negative, and the current process must be suspended. The contents of some registers are saved on the stack, and then _ _down( ) is invoked.

Essentially, the _ _down( ) function changes the state of the current process from TASK_RUNNING to TASK_UNINTERRUPTIBLE, and it puts the process in the semaphore wait queue. Before accessing the fields of the semaphore structure, the function also gets the sem->wait.lock spin lock that protects the semaphore wait queue (see "

How Processes Are Organized" in

Chapter 3) and disables local interrupts. Usually, wait queue functions get and release the wait queue spin lock as necessary when inserting and deleting an element. The _ _down( ) function, however, uses the wait queue spin lock also to protect the other fields of the semaphore data structure, so that no process running on another CPU is able to read or modify them. To that end, _ _down( ) uses the "_locked" versions of the wait queue functions, which assume that the spin lock has been already acquired before their invocations.

The main task of the _ _down( ) function is to suspend the current process until the semaphore is released. However, the way in which this is done is quite involved. To easily understand the code, keep in mind that the sleepers field of the semaphore is usually set to 0 if no process is sleeping in the wait queue of the semaphore, and it is set to 1 otherwise. Let's try to explain the code by considering a few typical cases.

MUTEX semaphore open (count equal to 1, sleepers equal to 0) The down macro just sets the count field to 0 and jumps to the next instruction of the main program; therefore, the _ _down( ) function is not executed at all.

MUTEX semaphore closed, no sleeping processes (count equal to 0, sleepers equal to 0) The down macro decreases count and invokes the _ _down( ) function with the count field set to -1 and the sleepers field set to 0. In each iteration of the loop, the function checks whether the count field is negative. (Observe that the count field is not changed by atomic_add_negative( ) because sleepers is equal to 0 when the function is invoked.)

If the count field is negative, the function invokes schedule( ) to suspend the current process. The count field is still set to -1, and the sleepers field to 1. The process picks up its run subsequently inside this loop and issues the test again. If the count field is not negative, the function sets sleepers to 0 and exits from the loop. It tries to wake up another process in the semaphore wait queue (but in our scenario, the queue is now empty) and terminates holding the semaphore. On exit, both the count field and the sleepers field are set to 0, as required when the semaphore is closed but no process is waiting for it.

MUTEX semaphore closed, other sleeping processes (count equal to -1, sleepers equal to 1) The down macro decreases count and invokes the _ _down( ) function with count set to -2 and sleepers set to 1. The function temporarily sets sleepers to 2, and then undoes the decrement performed by the down macro by adding the value sleepers-1 to count. At the same time, the function checks whether count is still negative (the semaphore could have been released by the holding process right before _ _down( ) entered the critical region).

If the count field is negative, the function resets sleepers to 1 and invokes schedule( ) to suspend the current process. The count field is still set to -1, and the sleepers field to 1. If the count field is not negative, the function sets sleepers to 0, tries to wake up another process in the semaphore wait queue, and exits holding the semaphore. On exit, the count field is set to 0 and the sleepers field to 0. The values of both fields look wrong, because there are other sleeping processes. However, consider that another process in the wait queue has been woken up. This process does another iteration of the loop; the atomic_add_negative( ) function subtracts 1 from count, restoring it to -1; moreover, before returning to sleep, the woken-up process resets sleepers to 1.

So, the code properly works in all cases. Consider that the wake_up( ) function in _ _down( ) wakes up at most one process, because the sleeping processes in the wait queue are exclusive (see the section "

How Processes Are Organized" in

Chapter 3).

Only exception handlers

, and particularly system call service routines

, can use the down( ) function. Interrupt handlers or deferrable functions must not invoke down( ), because this function suspends the process when the semaphore is busy. For this reason, Linux provides the down_trylock( ) function, which may be safely used by one of the previously mentioned asynchronous functions. It is identical to down( ) except when the resource is busy. In this case, the function returns immediately instead of putting the process to sleep.

A slightly different function called down_interruptible( ) is also defined. It is widely used by device drivers, because it allows processes that receive a signal while being blocked on a semaphore to give up the "down" operation. If the sleeping process is woken up by a signal before getting the needed resource, the function increases the count field of the semaphore and returns the value -EINTR. On the other hand, if down_interruptible( ) runs to normal completion and gets the resource, it returns 0. The device driver may thus abort the I/O operation when the return value is -EINTR.

Finally, because processes usually find semaphores in an open state, the semaphore functions are optimized for this case. In particular, the up( ) function does not execute jump instructions if the semaphore wait queue is empty; similarly, the down( ) function does not execute jump instructions if the semaphore is open. Much of the complexity of the semaphore implementation is precisely due to the effort of avoiding costly instructions in the main branch of the execution flow.

5.2.9. Read/Write Semaphores

Read/write semaphores are similar to the read/write spin locks described earlier in the section "Read/Write Spin Locks," except that waiting processes are suspended instead of spinning until the semaphore becomes open again.

Many kernel control paths may concurrently acquire a read/write semaphore for reading; however, every writer kernel control path must have exclusive access to the protected resource. Therefore, the semaphore can be acquired for writing only if no other kernel control path is holding it for either read or write access. Read/write semaphores improve the amount of concurrency inside the kernel and improve overall system performance.

The kernel handles all processes waiting for a read/write semaphore in strict FIFO order. Each reader or writer that finds the semaphore closed is inserted in the last position of a semaphore's wait queue list. When the semaphore is released, the process in the first position of the wait queue list are checked. The first process is always awoken. If it is a writer, the other processes in the wait queue continue to sleep. If it is a reader, all readers at the start of the queue, up to the first writer, are also woken up and get the lock. However, readers that have been queued after a writer continue to sleep.

Each read/write semaphore is described by a rw_semaphore structure that includes the following fields:

count Stores two 16-bit counters. The counter in the most significant word encodes in two's complement form the sum of the number of nonwaiting writers (either 0 or 1) and the number of waiting kernel control paths. The counter in the less significant word encodes the total number of nonwaiting readers and writers.

wait_list Points to a list of waiting processes. Each element in this list is a rwsem_waiter structure, including a pointer to the descriptor of the sleeping process and a flag indicating whether the process wants the semaphore for reading or for writing.

wait_lock A spin lock used to protect the wait queue list and the rw_semaphore structure itself.

The init_rwsem( ) function initializes an rw_semaphore structure by setting the count field to 0, the wait_lock spin lock to unlocked, and wait_list to the empty list.

The down_read( ) and down_write( ) functions acquire the read/write semaphore for reading and writing, respectively. Similarly, the up_read( ) and up_write( ) functions release a read/write semaphore previously acquired for reading and for writing. The down_read_trylock( ) and down_write_trylock( ) functions are similar to down_read( ) and down_write( ), respectively, but they do not block the process if the semaphore is busy. Finally, the downgrade_write( ) function atomically transforms a write lock into a read lock. The implementation of these five functions is long, but easy to follow because it resembles the implementation of normal semaphores; therefore, we avoid describing them.

5.2.10. Completions

Linux 2.6 also makes use of another synchronization primitive similar to semaphores: completions

. They have been introduced to solve a subtle race condition that occurs in multiprocessor systems when process A allocates a temporary semaphore variable, initializes it as closed MUTEX, passes its address to process B, and then invokes down( ) on it. Process A plans to destroy the semaphore as soon as it awakens. Later on, process B running on a different CPU invokes up( ) on the semaphore. However, in the current implementation up( ) and down( ) can execute concurrently on the same semaphore. Thus, process A can be woken up and destroy the temporary semaphore while process B is still executing the up( ) function. As a result, up( ) might attempt to access a data structure that no longer exists.

Of course, it is possible to change the implementation of down( ) and up( ) to forbid concurrent executions on the same semaphore. However, this change would require additional instructions, which is a bad thing to do for functions that are so heavily used.

The completion is a synchronization primitive that is specifically designed to solve this problem. The completion data structure includes a wait queue head and a flag:

struct completion {

unsigned int done;

wait_queue_head_t wait;

};

The function corresponding to up( ) is called complete( ). It receives as an argument the address of a completion data structure, invokes spin_lock_irqsave( ) on the spin lock of the completion's wait queue, increases the done field, wakes up the exclusive process sleeping in the wait wait queue, and finally invokes spin_unlock_irqrestore( ).

The function corresponding to down( ) is called wait_for_completion( ). It receives as an argument the address of a completion data structure and checks the value of the done flag. If it is greater than zero, wait_for_completion( ) terminates, because complete( ) has already been executed on another CPU. Otherwise, the function adds current to the tail of the wait queue as an exclusive process and puts current to sleep in the TASK_UNINTERRUPTIBLE state. Once woken up, the function removes current from the wait queue. Then, the function checks the value of the done flag: if it is equal to zero the function terminates, otherwise, the current process is suspended again. As in the case of the complete( ) function, wait_for_completion( ) makes use of the spin lock in the completion's wait queue.

The real difference between completions and semaphores is how the spin lock included in the wait queue is used. In completions, the spin lock is used to ensure that complete( ) and wait_for_completion( ) cannot execute concurrently. In semaphores, the spin lock is used to avoid letting concurrent down( )'s functions mess up the semaphore data structure.

5.2.11. Local Interrupt Disabling

Interrupt disabling

is one of the key mechanisms used to ensure that a sequence of kernel statements is treated as a critical section. It allows a kernel control path to continue executing even when hardware devices issue IRQ signals, thus providing an effective way to protect data structures that are also accessed by interrupt handlers. By itself, however, local interrupt disabling does not protect against concurrent accesses to data structures by interrupt handlers running on other CPUs, so in multiprocessor systems, local interrupt disabling is often coupled with spin locks (see the later section "

Synchronizing Accesses to Kernel Data Structures").

The local_irq_disable( ) macro, which makes use of the cli

assembly language instruction, disables interrupts on the local CPU. The local_irq_enable( ) macro, which makes use of the of the sti

assembly language instruction, enables them. As stated in the section "

IRQs and Interrupts" in

Chapter 4, the cli and sti assembly language instructions, respectively, clear and set the IF flag of the eflags

control register. The irqs_disabled( ) macro yields the value one if the IF flag of the eflags register is clear, the value one if the flag is set.

When the kernel enters a critical section, it disables interrupts by clearing the IF flag of the eflags register. But at the end of the critical section, often the kernel can't simply set the flag again. Interrupts can execute in nested fashion, so the kernel does not necessarily know what the IF flag was before the current control path executed. In these cases, the control path must save the old setting of the flag and restore that setting at the end.

Saving and restoring the eflags content is achieved by means of the local_irq_save and local_irq_restore macros, respectively. The local_irq_save macro copies the content of the eflags register into a local variable; the IF flag is then cleared by a cli assembly language instruction. At the end of the critical region, the macro local_irq_restore restores the original content of eflags; therefore, interrupts are enabled only if they were enabled before this control path issued the cli assembly language instruction.

5.2.12. Disabling and Enabling Deferrable Functions

In the section "

Softirqs" in

Chapter 4, we explained that deferrable functions can be executed at unpredictable times (essentially, on termination of hardware interrupt handlers). Therefore, data structures accessed by deferrable functions must be protected against race conditions.

A trivial way to forbid deferrable functions execution on a CPU is to disable interrupts on that CPU. Because no interrupt handler can be activated, softirq actions cannot be started asynchronously.

As we'll see in the next section, however, the kernel sometimes needs to disable deferrable functions without disabling

interrupts. Local deferrable functions can be enabled or disabled on the local CPU by acting on the softirq counter stored in the preempt_count field of the current's tHRead_info descriptor.

Recall that the do_softirq( ) function never executes the softirqs if the softirq counter is positive. Moreover, tasklets are implemented on top of softirqs, so setting this counter to a positive value disables the execution of all deferrable functions on a given CPU, not just softirqs.

The local_bh_disable macro adds one to the softirq counter of the local CPU, while the local_bh_enable( ) function subtracts one from it. The kernel can thus use several nested invocations of local_bh_disable; deferrable functions will be enabled again only by the local_bh_enable macro matching the first local_bh_disable invocation.

After having decreased the softirq counter, local_bh_enable( ) performs two important operations that help to ensure timely execution of long-waiting threads:

Checks the hardirq counter and the softirq counter in the preempt_count field of the local CPU; if both of them are zero and there are pending softirqs to be executed, invokes do_softirq( ) to activate them (see the section "

Softirqs" in

Chapter 4). Checks whether the TIF_NEED_RESCHED flag of the local CPU is set; if so, a process switch request is pending, thus invokes the preempt_schedule( ) function (see the section "

Kernel Preemption" earlier in this chapter).

|

5.3. Synchronizing Accesses to Kernel Data Structures

A shared data structure can be protected against race conditions by using some of the synchronization primitives shown in the previous section. Of course, system performance may vary considerably, depending on the kind of synchronization primitive selected. Usually, the following rule of thumb is adopted by kernel developers: always keep the concurrency level

as high as possible in the system.

In turn, the concurrency level in the system depends on two main factors:

To maximize I/O throughput, interrupts should be disabled for very short periods of time. As described in the section "

IRQs and Interrupts" in

Chapter 4, when interrupts are disabled, IRQs issued by I/O devices are temporarily ignored by the PIC, and no new activity can start on such devices.

To use CPUs efficiently, synchronization primitives based on spin locks should be avoided whenever possible. When a CPU is executing a tight instruction loop waiting for the spin lock to open, it is wasting precious machine cycles. Even worse, as we have already said, spin locks have negative effects on the overall performance of the system because of their impact on the hardware caches.

Let's illustrate a couple of cases in which synchronization can be achieved while still maintaining a high concurrency level:

A shared data structure consisting of a single integer value can be updated by declaring it as an atomic_t type and by using atomic operations. An atomic operation is faster than spin locks and interrupt disabling, and it slows down only kernel control paths that concurrently access the data structure. Inserting an element into a shared linked list is never atomic, because it consists of at least two pointer assignments. Nevertheless, the kernel can sometimes perform this insertion operation without using locks or disabling interrupts. As an example of why this works, we'll consider the case where a system call service routine (see "

System Call Handler and Service Routines" in

Chapter 10) inserts new elements in a singly linked list, while an interrupt handler or deferrable function asynchronously looks up the list. In the C language, insertion is implemented by means of the following pointer assignments:

new->next = list_element->next;

list_element->next = new;

In assembly language, insertion reduces to two consecutive atomic instructions. The first instruction sets up the next pointer of the new element, but it does not modify the list. Thus, if the interrupt handler sees the list between the execution of the first and second instructions, it sees the list without the new element. If the handler sees the list after the execution of the second instruction, it sees the list with the new element. The important point is that in either case, the list is consistent and in an uncorrupted state. However, this integrity is assured only if the interrupt handler does not modify the list. If it does, the next pointer that was just set within the new element might become invalid. However, developers must ensure that the order of the two assignment operations cannot be subverted by the compiler or the CPU's control unit; otherwise, if the system call service routine is interrupted by the interrupt handler between the two assignments, the handler finds a corrupted list. Therefore, a write memory barrier primitive is required:

new->next = list_element->next;

wmb( );

list_element->next = new;

5.3.1. Choosing Among Spin Locks, Semaphores, and Interrupt Disabling

Unfortunately, access patterns to most kernel data structures are a lot more complex than the simple examples just shown, and kernel developers are forced to use semaphores, spin locks, interrupts, and softirq disabling. Generally speaking, choosing the synchronization primitives depends on what kinds of kernel control paths access the data structure, as shown in Table 5-8. Remember that whenever a kernel control path acquires a spin lock (as well as a read/write lock, a seqlock, or a RCU "read lock"), disables the local interrupts, or disables the local softirqs, kernel preemption is automatically disabled.

Table 5-8. Protection required by data structures accessed by kernel control pathsKernel control paths accessing the data structure | UP protection | MP further protection |

|---|

Exceptions | Semaphore | None | Interrupts | Local interrupt disabling | Spin lock | Deferrable functions | None | None or spin lock (see Table 5-9) | Exceptions + Interrupts | Local interrupt disabling | Spin lock | Exceptions + Deferrable functions | Local softirq disabling | Spin lock | Interrupts + Deferrable functions | Local interrupt disabling | Spin lock | Exceptions + Interrupts + Deferrable functions | Local interrupt disabling | Spin lock |

5.3.1.1. Protecting a data structure accessed by exceptions

When a data structure is accessed only by exception handlers, race conditions are usually easy to understand and prevent. The most common exceptions that give rise to synchronization problems are the system call service routines (see the section "

System Call Handler and Service Routines" in

Chapter 10) in which the CPU operates in Kernel Mode to offer a service to a User Mode program. Thus, a data structure accessed only by an exception usually represents a resource that can be assigned to one or more processes.

Race conditions are avoided through semaphores, because these primitives allow the process to sleep until the resource becomes available. Notice that semaphores work equally well both in uniprocessor and multiprocessor systems.

Kernel preemption does not create problems either. If a process that owns a semaphore is preempted, a new process running on the same CPU could try to get the semaphore. When this occurs, the new process is put to sleep, and eventually the old process will release the semaphore. The only case in which kernel preemption must be explicitly disabled is when accessing per-CPU variables, as explained in the section "

Per-CPU Variables" earlier in this chapter.

5.3.1.2. Protecting a data structure accessed by interrupts

Suppose that a data structure is accessed by only the "top half" of an interrupt handler. We learned in the section "

Interrupt Handling" in

Chapter 4 that each interrupt handler is serialized with respect to itself that is, it cannot execute more than once concurrently. Thus, accessing the data structure does not require synchronization primitives.

Things are different, however, if the data structure is accessed by several interrupt handlers. A handler may interrupt another handler, and different interrupt handlers may run concurrently in multiprocessor systems. Without synchronization, the shared data structure might easily become corrupted.

In uniprocessor systems, race conditions must be avoided by disabling interrupts in all critical regions of the interrupt handler. Nothing less will do because no other synchronization primitives accomplish the job. A semaphore can block the process, so it cannot be used in an interrupt handler. A spin lock, on the other hand, can freeze the system: if the handler accessing the data structure is interrupted, it cannot release the lock; therefore, the new interrupt handler keeps waiting on the tight loop of the spin lock.

Multiprocessor systems, as usual, are even more demanding. Race conditions cannot be avoided by simply disabling local interrupts. In fact, even if interrupts are disabled on a CPU, interrupt handlers can still be executed on the other CPUs. The most convenient method to prevent the race conditions is to disable local interrupts (so that other interrupt handlers running on the same CPU won't interfere) and to acquire a spin lock or a read/write spin lock that protects the data structure. Notice that these additional spin locks cannot freeze the system because even if an interrupt handler finds the lock closed, eventually the interrupt handler on the other CPU that owns the lock will release it.

The Linux kernel uses several macros that couple the enabling and disabling of local interrupts with spin lock handling. Table 5-9 describes all of them. In uniprocessor systems, these macros just enable or disable local interrupts and kernel preemption.

Table 5-9. Interrupt-aware spin lock macrosMacro | Description |

|---|

spin_lock_irq(l) | local_irq_disable( ); spin_lock(l) | spin_unlock_irq(l) | spin_unlock(l); local_irq_enable() | spin_lock_bh(l) | local_bh_disable( ); spin_lock(l) | spin_unlock_bh(l) | spin_unlock(l); local_bh_enable() | spin_lock_irqsave(l,f) | local_irq_save(f); spin_lock(l) | spin_unlock_irqrestore(l,f) | spin_unlock(l); local_irq_restore(f) | read_lock_irq(l) | local_irq_disable( ); read_lock(l) | read_unlock_irq(l) | read_unlock(l); local_irq_enable( ) | read_lock_bh(l) | local_bh_disable( ); read_lock(l) | read_unlock_bh(l) | read_unlock(l); local_bh_enable( ) | write_lock_irq(l) | local_irq_disable( ); write_lock(l) | write_unlock_irq(l) | write_unlock(l); local_irq_enable( ) | write_lock_bh(l) | local_bh_disable( ); write_lock(l) | write_unlock_bh(l) | write_unlock(l); local_bh_enable( ) | read_lock_irqsave(l,f) | local_irq_save(f); read_lock(l) | read_unlock_irqrestore(l,f) | read_unlock(l); local_irq_restore(f) | write_lock_irqsave(l,f) | local_irq_save(f); write_lock(l) | write_unlock_irqrestore(l,f) | write_unlock(l); local_irq_restore(f) | read_seqbegin_irqsave(l,f) | local_irq_save(f); read_seqbegin(l) | read_seqretry_irqrestore(l,v,f) | read_seqretry(l,v); local_irq_restore(f) | write_seqlock_irqsave(l,f) | local_irq_save(f); write_seqlock(l) | write_sequnlock_irqrestore(l,f) | write_sequnlock(l); local_irq_restore(f) | write_seqlock_irq(l) | local_irq_disable( ); write_seqlock(l) | write_sequnlock_irq(l) | write_sequnlock(l); local_irq_enable( ) | write_seqlock_bh(l) | local_bh_disable( ); write_seqlock(l); | write_sequnlock_bh(l) | write_sequnlock(l); local_bh_enable( ) |

5.3.1.3. Protecting a data structure accessed by deferrable functions